OGProxy - a replacement for iFramely

-

your solution working fine. It should be as simple to implement as Julian’s plugin and it would be perfect ^^

-

@phenomlab said in Potential replacement for iFramely:

and now appears to work very well.

Are you sure

After One hour !!

After One hour !!

@DownPW yes, mine is doing the same now.

-

your solution working fine. It should be as simple to implement as Julian’s plugin and it would be perfect ^^

@DownPW it’s not going to be a plugin as it requires it’s own reverse proxy in a sub domain as I mentioned previously and everything is client side so a plugin would be pointless as it has no settings - only custom JS code.

-

I have literally one bug (a simple one) to resolve, then clean up the code and restyle done of the elements (@DownPW is right in the sense that they are a bit big

) and it’s ready for testing.

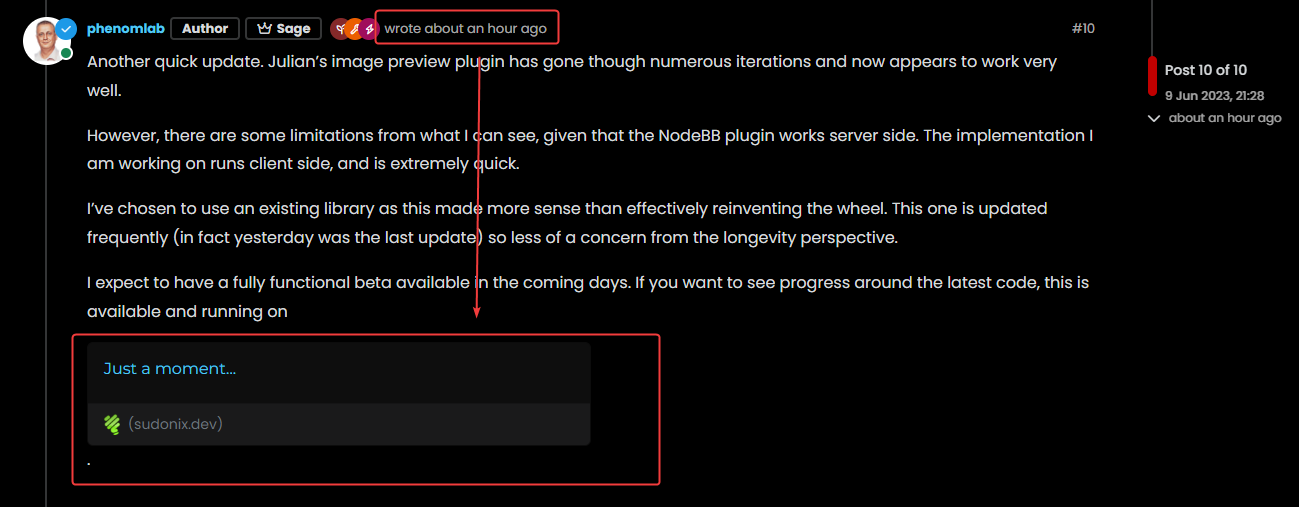

) and it’s ready for testing.In reality, I expect this to work almost flawlessly on other user forums as the code has been though several iterations to ensure it’s both lean and efficient. If you test it in sudonix.dev you’ll see what I mean. I found an ingenuous way to extract the

favicon- believe it or not, despite the year being 2023, web site owners don’t understand how to structuremetatags properly 🤬🤬.I learned today that Google in fact has a hidden API that you can use to get the

faviconfrom any site which not only works flawlessly, but it’s extremely efficient and so, I’m using it in my code - pointless reinventing the wheel when you don’t have to.The final “bug” isn’t really a “bug” in the traditional sense. In fact, its nothing to do with my code, but one specific variable I look for in the headers of each URL being crawled is

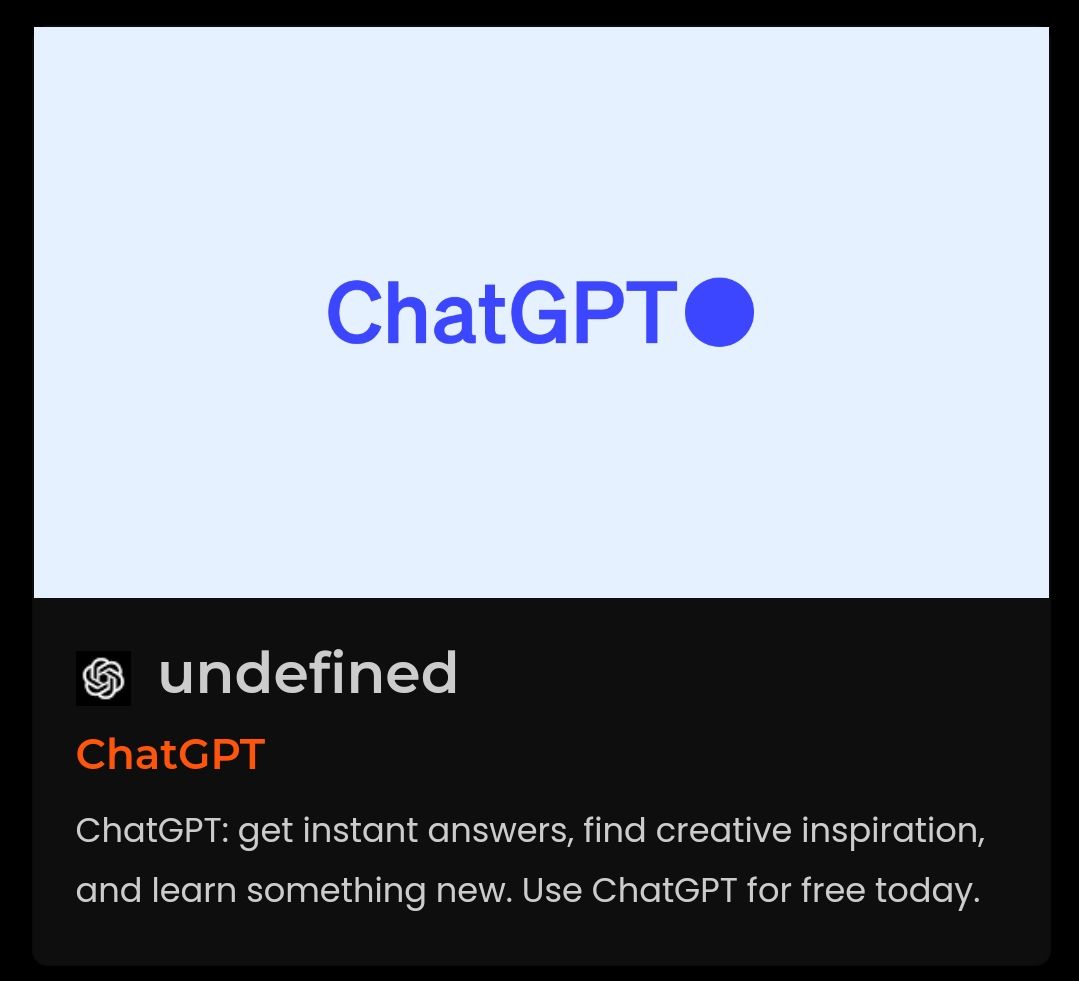

ogSiteNameThe “og” part is short for OpenGraph - an industry standard for years, yet often missing in headers. This has the undesired effect of returning

undefinedwhen it doesn’t exist, meaning reviews look like thisNotice the “undefined” text where the site title will be - that looks bad, to I’ll likely replace the with the domain name of the site of the title is missing.

Seriously, if you haven’t tested this out yet, if suggest to do so on sudonix.dev as soon as you can.

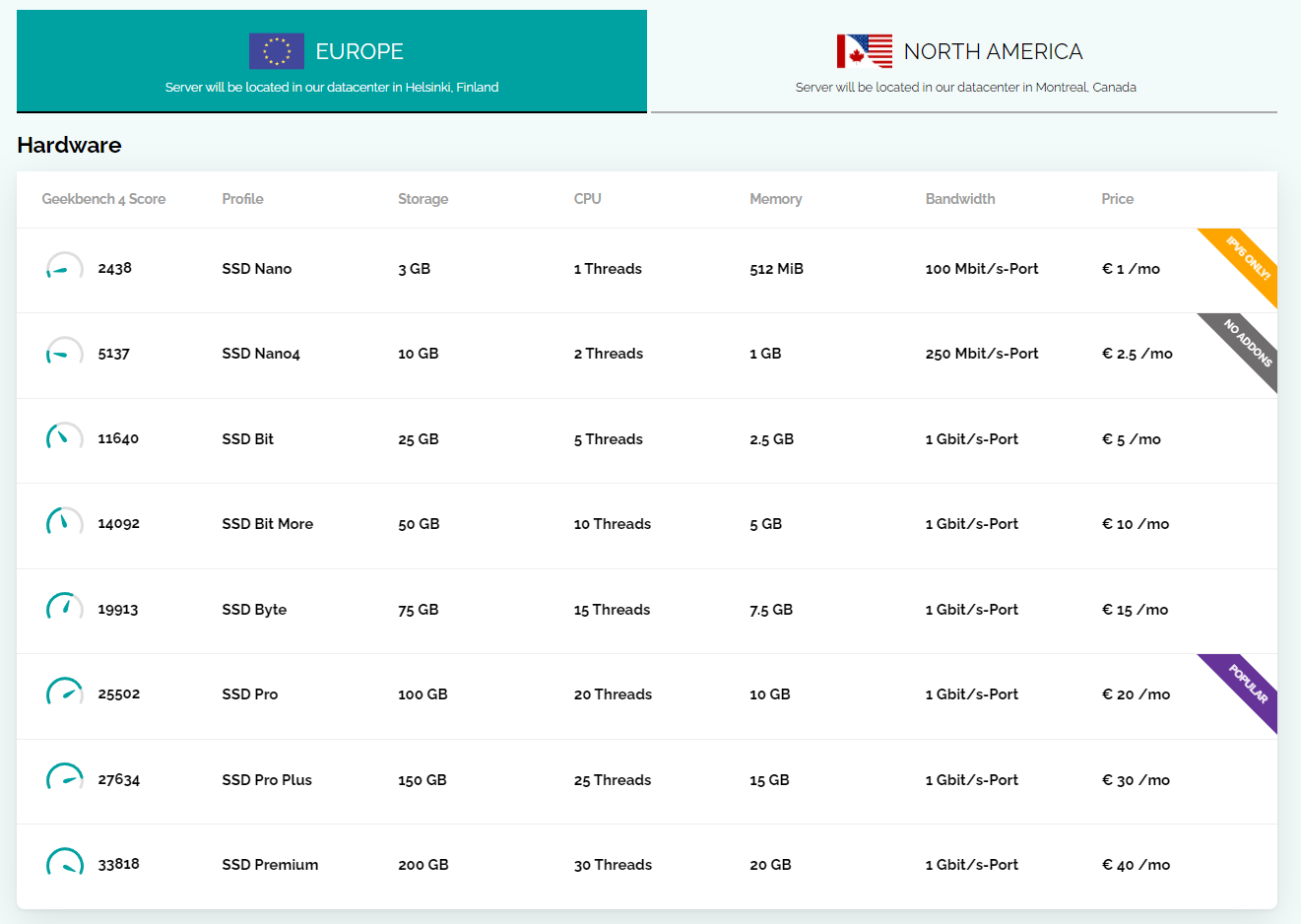

Currently, this site is running

nodebb-plugin-link-previewsbut will be switching to my client side version in the coming days.As soon as it’s running here, I’ll release the code and a guide.

-

I have literally one bug (a simple one) to resolve, then clean up the code and restyle done of the elements (@DownPW is right in the sense that they are a bit big

) and it’s ready for testing.

) and it’s ready for testing.In reality, I expect this to work almost flawlessly on other user forums as the code has been though several iterations to ensure it’s both lean and efficient. If you test it in sudonix.dev you’ll see what I mean. I found an ingenuous way to extract the

favicon- believe it or not, despite the year being 2023, web site owners don’t understand how to structuremetatags properly 🤬🤬.I learned today that Google in fact has a hidden API that you can use to get the

faviconfrom any site which not only works flawlessly, but it’s extremely efficient and so, I’m using it in my code - pointless reinventing the wheel when you don’t have to.The final “bug” isn’t really a “bug” in the traditional sense. In fact, its nothing to do with my code, but one specific variable I look for in the headers of each URL being crawled is

ogSiteNameThe “og” part is short for OpenGraph - an industry standard for years, yet often missing in headers. This has the undesired effect of returning

undefinedwhen it doesn’t exist, meaning reviews look like this

Notice the “undefined” text where the site title will be - that looks bad, to I’ll likely replace the with the domain name of the site of the title is missing.

Seriously, if you haven’t tested this out yet, if suggest to do so on sudonix.dev as soon as you can.

Currently, this site is running

nodebb-plugin-link-previewsbut will be switching to my client side version in the coming days.As soon as it’s running here, I’ll release the code and a guide.

@phenomlab said in Potential replacement for iFramely:

believe it or not, despite the year being 2023, web site owners don’t understand how to structure meta tags properly 🤬🤬.

Ha ha I believe you !!

@phenomlab said in Potential replacement for iFramely:

Notice the “undefined” text where the site title will be - that looks bad, to I’ll likely replace the with the domain name of the site of the title is missing.

yep be clearly better with domain name when OpenGraphi when he is absent in headers

@phenomlab said in Potential replacement for iFramely:

Seriously, if you haven’t tested this out yet, if suggest to do so on sudonix.dev as soon as you can.

Already tested, very fast

@phenomlab said in Potential replacement for iFramely:

Currently, this site is running nodebb-plugin-link-previews but will be switching to my client side version in the coming days.

As soon as it’s running here, I’ll release the code and a guide.

Hell yeah

-

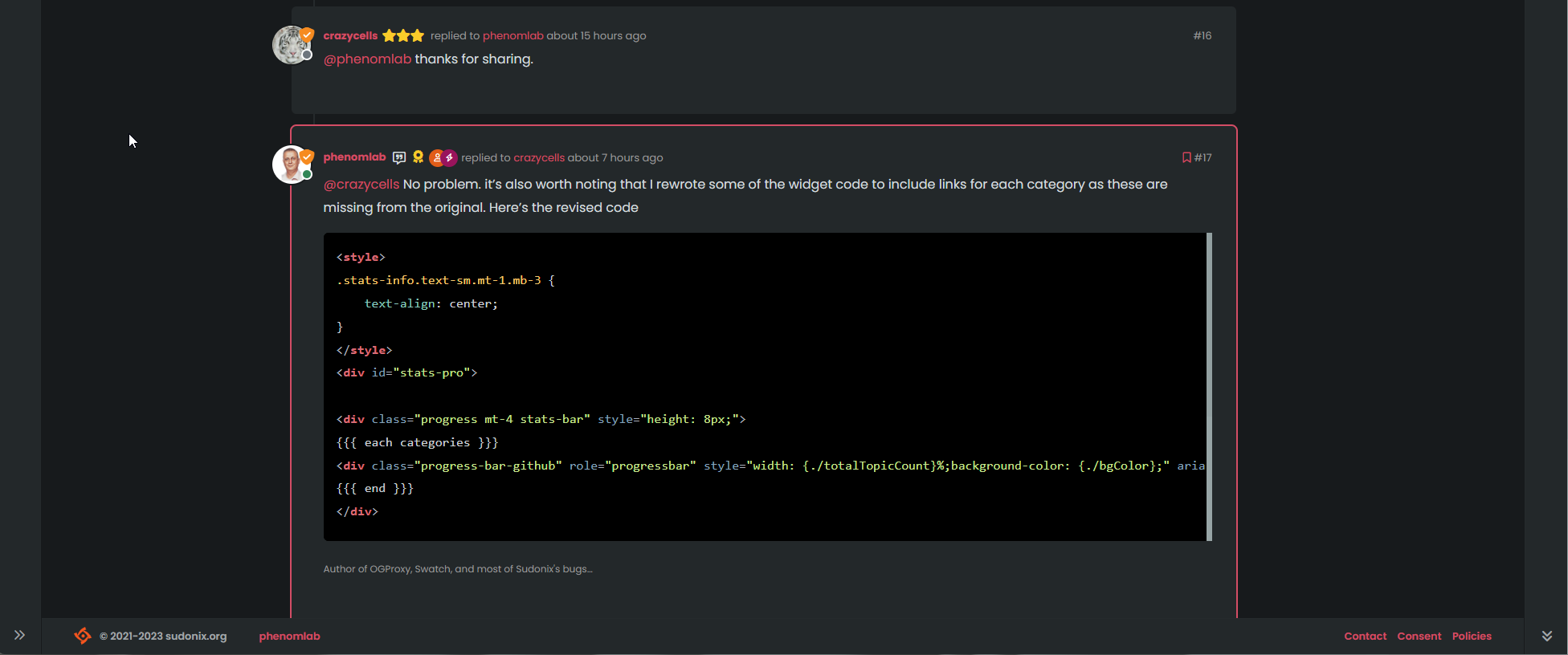

Ok, spent some time on this late last night, and the good news is that it’s finished, and ready for you to try out.

Please do remember that this isn’t a plugin, but a client side

jsfunction with a server side proxy. However, don’t be put off as the installation is simple, and you should be up and running in around 30 minutes maximum.It does require some technical knowledge and ability, but if you’ve setup NodeBB, then you can do this easily - besides, there will be full documentation so you are taken through each step.

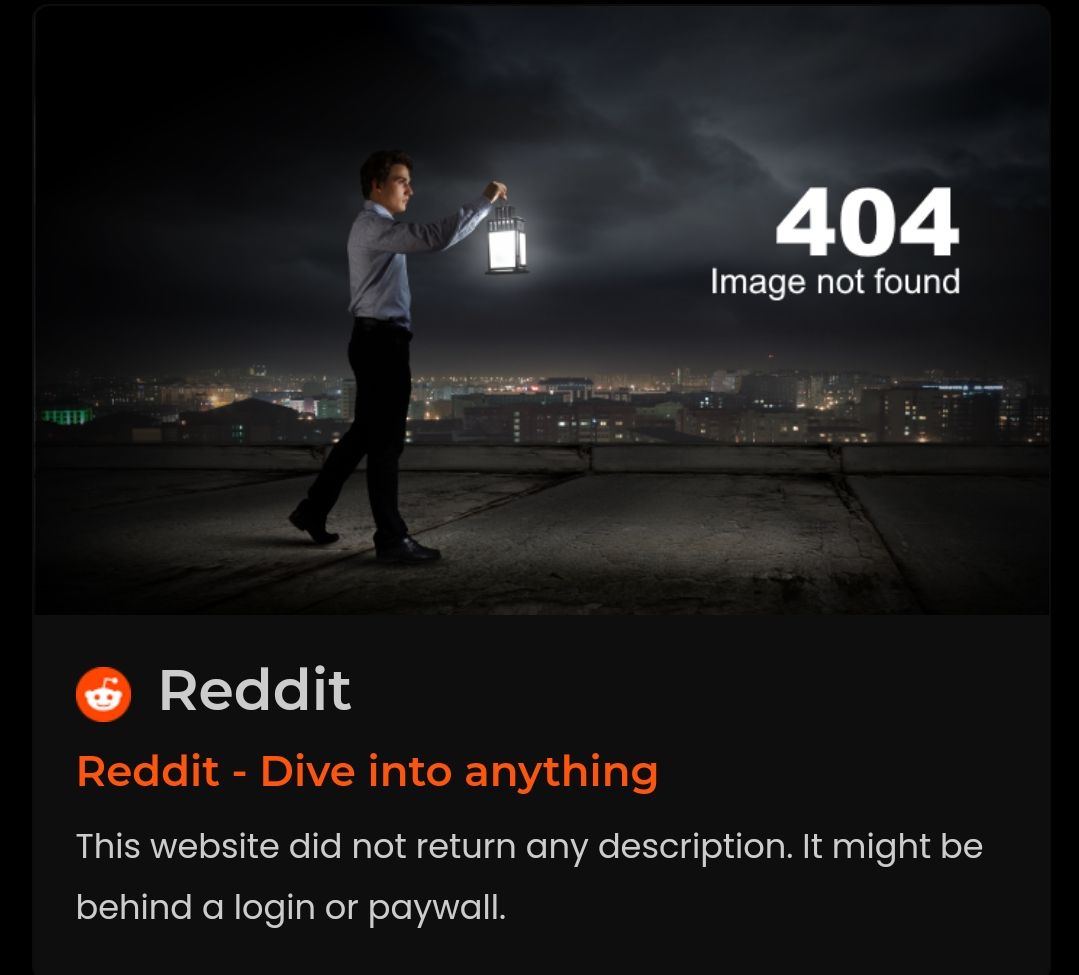

Some other points to note are that not every site returns valid Open Graph data - in fact, some don’t even return an image (yes, Reddit, I’m taking about you) when they are closed to the public, or behind a registration form / membership grant, or in some cases, a paywall.

When this scenario is met, the problem arises that no valid image is being returned. I did toy with the idea of using a free random image API , and even wrote the code for it - then realised nature scenery didn’t quite align with a tech site like Reddit.

Ok - the only thing to do here is to generate your own image then, and bundle that in with the proxy. For this purpose, I chose an image (I have a subscription to stockphotosecrets.com, which is an annual cost to me. I’ve cancelled the subscription as I don’t use it, but provided I downloaded the image before the term expires, I have the right to use any images after the fact) and then added some text parts to it so that it could then be used as a placeholder for when the image is absent.

Here’s that image

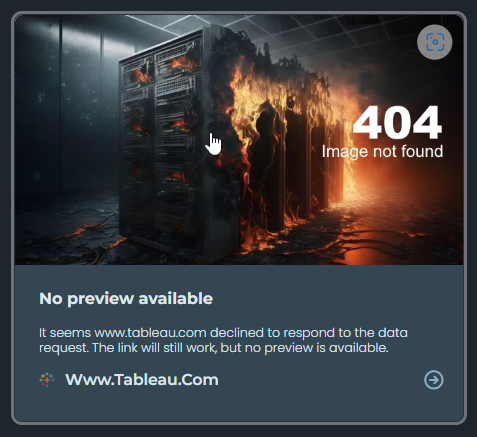

It’s sparse, but functional. And given my comment earlier around membership and paywalls, here’s what that specific scenario would look like when encountered

Before I go ahead and provide the documentation, code, and proxy server, I’d recommend you try out the latest code on sudonix.dev.

Enjoy.

-

Mark could you please install to my site also?

-

@cagatay Let me put the guide together first, and see if you can get it working by yourself. It’s not a difficult installation, and once you understand how the components intersect and work together, I think you’ll be fine. If the worst comes to it however, I’m always happy to help.

-

Already found 2 bugs, which have been committed to live code

- Relative path is provided in some instances, so a function now exists to return the full path instead so the image is rendered

- OGProxy does not target chat - this has been fixed

-

undefined phenomlab referenced this topic on 13 Jun 2023, 14:58

-

One thing I never really took into account when developing OGProxy was the potential for CLS (Cumulative Layout Shifting). If you’re sitting there thinking “what is he talking about?” then this goes some way to explain it

Not only does this harm the user experience in the sense that the page jumps all over the place when you are trying to read content, but is also harmful when it comes to site performance and SEO, as this is a key measurable when checking page performance and speed.

Based on this, I’ve made several changes to how OGProxy works - some of which I will outline below

- Link Selection and Filtering

The function selects certain links in the document and filters out unnecessary ones, like internal links or specific classes.

It further filters links by checking for domains or file types that should be ignored.- Placeholders for Preventing Content Layout Shift (CLS)

Placeholders are inserted initially with a generic “Loading…” message and a temporary image. This prevents CLS, which happens when the page layout shifts due to asynchronously loaded content. By having a placeholder occupy the same space as the final preview, the layout stays stable while data is fetched.

- AJAX Request to Fetch Link Metadata

The function sends an AJAX request to an OpenGraph proxy service to retrieve metadata (title, description, and image) about each link. It uses the same proxy server and API key to fetch this information as it’s predecessor.

- Dynamic Update of Placeholder with Real Data

Once data is retrieved, the placeholder is replaced with the actual preview. Title, description, and image are updated based on the fetched metadata. If an image is missing or invalid, it defaults to a specified “404” image.

- Error Handling and Debugging

Debug logging and error handling ensure that if data can’t be fetched, the placeholders are either left unchanged or logged for troubleshooting.

This approach provides a smoother user experience by managing both loading time and visual stability, which are critical for preventing CLS in dynamically loaded content.

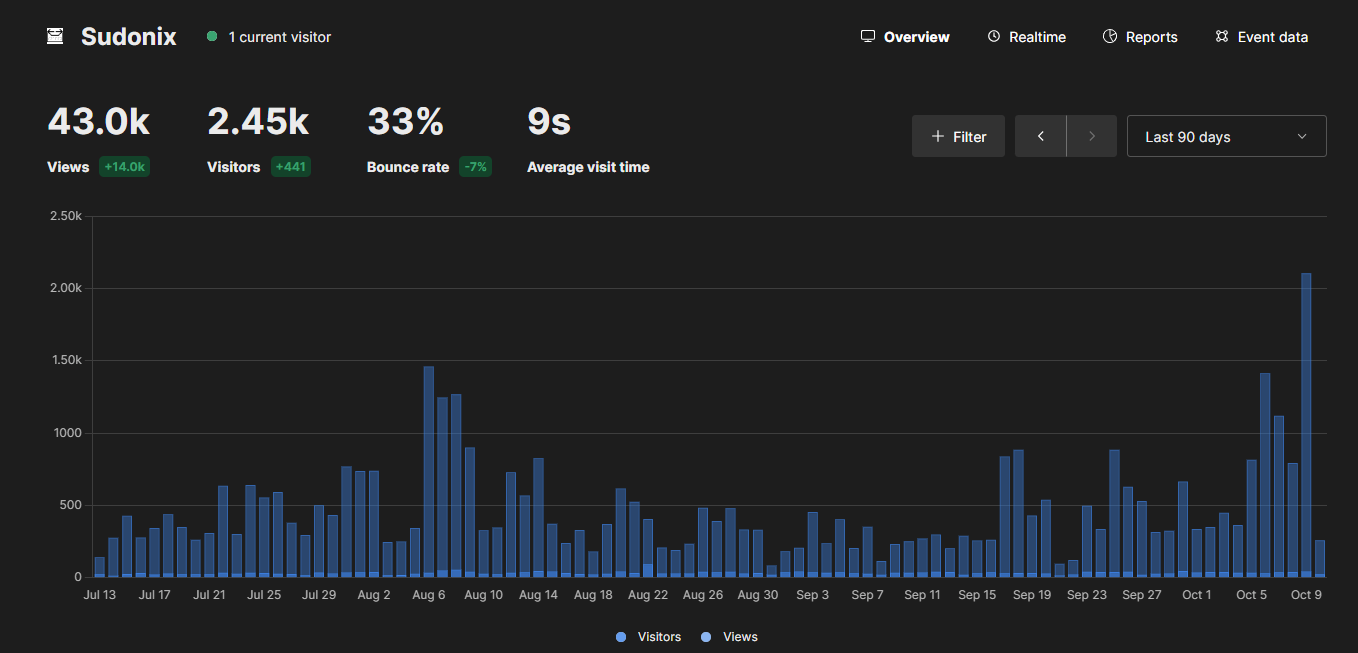

The new code is active on this site, and there’s not only a huge visual improvement, but also serious performance gains. I’ll give it a few weeks, then formally release the new code.

-

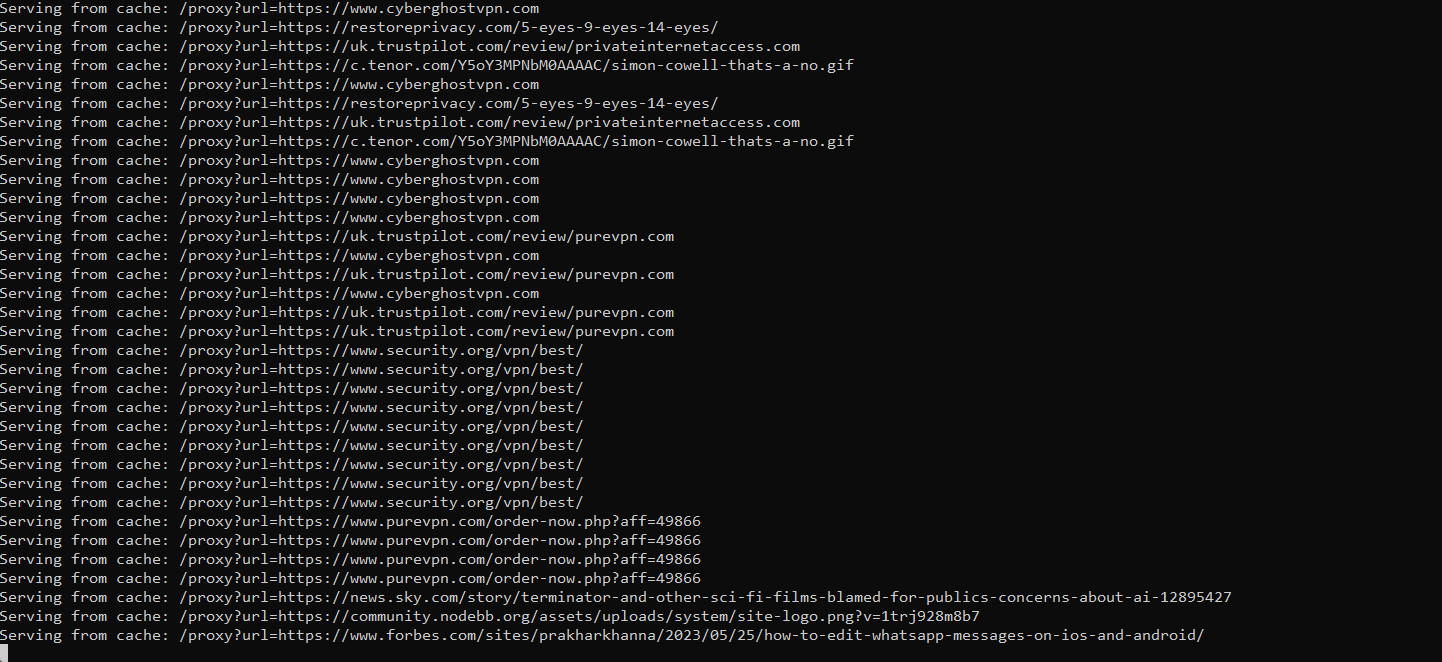

And now, changes made to the back-end Proxy Server to increase performance

Key Changes Made:

- Rate Limiting

Added express-rate-limit to limit requests from a single IP.

- Logging

Integrated morgan for logging HTTP requests.

- Health Check Endpoint

Added a simple endpoint to check the server’s status.

- Data Validation

Implemented input validation for the URL using Joi.

- Environment Variables

Used

dotenvfor managing sensitive data like API keys and port configuration.- Error Handling

Enhanced error logging for debugging purposes.

- Asynchronous Error Handling

Utilize a centralized error-handling middleware to manage errors in one place.

- Environment Variable Management

Use environment variables for more configuration options, such as cache duration or allowed origins, making it easier to change configurations without altering the code.

- Static Response Handling

Use a middleware for handling static responses or messages instead of duplicating logic.

- Compression Middleware

Add compression middleware to reduce the size of the response bodies, which can improve performance, especially for larger responses.

- Timeout Handling on Requests

Handle timeouts for the requests made to the target URLs and provide appropriate error responses.

- Security Improvements

Implement security best practices, such as Helmet for setting HTTP headers, which can help protect against well-known vulnerabilities.

- Logging Configuration

Improve logging with different levels (e.g., info, error) using a logging library like winston, which provides more control over logging output.

- Graceful Shutdown

Implement graceful shutdown logic to handle server termination more smoothly, especially during deployment.

- Monitoring and Metrics

Integrate monitoring tools like Prometheus or an APM tool for better insights into the application’s performance and resource usage.

- Response Schema Validation

Use libraries like

JoiorAjvto validate responses sent back to the client, ensuring they conform to expected formats.Again, this new code is running here in test for a few weeks.

-

wowww

Very good work my friend!!

-

And now, changes made to the back-end Proxy Server to increase performance

Key Changes Made:

- Rate Limiting

Added express-rate-limit to limit requests from a single IP.

- Logging

Integrated morgan for logging HTTP requests.

- Health Check Endpoint

Added a simple endpoint to check the server’s status.

- Data Validation

Implemented input validation for the URL using Joi.

- Environment Variables

Used

dotenvfor managing sensitive data like API keys and port configuration.- Error Handling

Enhanced error logging for debugging purposes.

- Asynchronous Error Handling

Utilize a centralized error-handling middleware to manage errors in one place.

- Environment Variable Management

Use environment variables for more configuration options, such as cache duration or allowed origins, making it easier to change configurations without altering the code.

- Static Response Handling

Use a middleware for handling static responses or messages instead of duplicating logic.

- Compression Middleware

Add compression middleware to reduce the size of the response bodies, which can improve performance, especially for larger responses.

- Timeout Handling on Requests

Handle timeouts for the requests made to the target URLs and provide appropriate error responses.

- Security Improvements

Implement security best practices, such as Helmet for setting HTTP headers, which can help protect against well-known vulnerabilities.

- Logging Configuration

Improve logging with different levels (e.g., info, error) using a logging library like winston, which provides more control over logging output.

- Graceful Shutdown

Implement graceful shutdown logic to handle server termination more smoothly, especially during deployment.

- Monitoring and Metrics

Integrate monitoring tools like Prometheus or an APM tool for better insights into the application’s performance and resource usage.

- Response Schema Validation

Use libraries like

JoiorAjvto validate responses sent back to the client, ensuring they conform to expected formats.Again, this new code is running here in test for a few weeks.

@phenomlab the best of the best, great work Mark

.

. -

Seeing as not every site on the planet has relevant CORS headers that permit data scraping, I thought I’d make this a bit more obvious on the response. The link is still rendered, but by using the below if the remote site refuses to respond to request

Not entirely sold on the image yet - likely will change it, but you get the idea

It’s more along the lines of graceful failure rather than a URL that simply does nothing.

It’s more along the lines of graceful failure rather than a URL that simply does nothing. -

Seeing as not every site on the planet has relevant CORS headers that permit data scraping, I thought I’d make this a bit more obvious on the response. The link is still rendered, but by using the below if the remote site refuses to respond to request

Not entirely sold on the image yet - likely will change it, but you get the idea

It’s more along the lines of graceful failure rather than a URL that simply does nothing.

It’s more along the lines of graceful failure rather than a URL that simply does nothing.@phenomlab I love that image and think it is perfect! LOL

-

And now, changes made to the back-end Proxy Server to increase performance

Key Changes Made:

- Rate Limiting

Added express-rate-limit to limit requests from a single IP.

- Logging

Integrated morgan for logging HTTP requests.

- Health Check Endpoint

Added a simple endpoint to check the server’s status.

- Data Validation

Implemented input validation for the URL using Joi.

- Environment Variables

Used

dotenvfor managing sensitive data like API keys and port configuration.- Error Handling

Enhanced error logging for debugging purposes.

- Asynchronous Error Handling

Utilize a centralized error-handling middleware to manage errors in one place.

- Environment Variable Management

Use environment variables for more configuration options, such as cache duration or allowed origins, making it easier to change configurations without altering the code.

- Static Response Handling

Use a middleware for handling static responses or messages instead of duplicating logic.

- Compression Middleware

Add compression middleware to reduce the size of the response bodies, which can improve performance, especially for larger responses.

- Timeout Handling on Requests

Handle timeouts for the requests made to the target URLs and provide appropriate error responses.

- Security Improvements

Implement security best practices, such as Helmet for setting HTTP headers, which can help protect against well-known vulnerabilities.

- Logging Configuration

Improve logging with different levels (e.g., info, error) using a logging library like winston, which provides more control over logging output.

- Graceful Shutdown

Implement graceful shutdown logic to handle server termination more smoothly, especially during deployment.

- Monitoring and Metrics

Integrate monitoring tools like Prometheus or an APM tool for better insights into the application’s performance and resource usage.

- Response Schema Validation

Use libraries like

JoiorAjvto validate responses sent back to the client, ensuring they conform to expected formats.Again, this new code is running here in test for a few weeks.

@phenomlab said in OGProxy - a replacement for iFramely:

And now, changes made to the back-end Proxy Server to increase performance

Key Changes Made:

- Rate Limiting

Added express-rate-limit to limit requests from a single IP.

- Logging

Integrated morgan for logging HTTP requests.

- Health Check Endpoint

Added a simple endpoint to check the server’s status.

- Data Validation

Implemented input validation for the URL using Joi.

- Environment Variables

Used

dotenvfor managing sensitive data like API keys and port configuration.- Error Handling

Enhanced error logging for debugging purposes.

- Asynchronous Error Handling

Utilize a centralized error-handling middleware to manage errors in one place.

- Environment Variable Management

Use environment variables for more configuration options, such as cache duration or allowed origins, making it easier to change configurations without altering the code.

- Static Response Handling

Use a middleware for handling static responses or messages instead of duplicating logic.

- Compression Middleware

Add compression middleware to reduce the size of the response bodies, which can improve performance, especially for larger responses.

- Timeout Handling on Requests

Handle timeouts for the requests made to the target URLs and provide appropriate error responses.

- Security Improvements

Implement security best practices, such as Helmet for setting HTTP headers, which can help protect against well-known vulnerabilities.

- Logging Configuration

Improve logging with different levels (e.g., info, error) using a logging library like winston, which provides more control over logging output.

- Graceful Shutdown

Implement graceful shutdown logic to handle server termination more smoothly, especially during deployment.

- Monitoring and Metrics

Integrate monitoring tools like Prometheus or an APM tool for better insights into the application’s performance and resource usage.

- Response Schema Validation

Use libraries like

JoiorAjvto validate responses sent back to the client, ensuring they conform to expected formats.Again, this new code is running here in test for a few weeks.

code updated on github or not ?

-

@phenomlab said in OGProxy - a replacement for iFramely:

And now, changes made to the back-end Proxy Server to increase performance

Key Changes Made:

- Rate Limiting

Added express-rate-limit to limit requests from a single IP.

- Logging

Integrated morgan for logging HTTP requests.

- Health Check Endpoint

Added a simple endpoint to check the server’s status.

- Data Validation

Implemented input validation for the URL using Joi.

- Environment Variables

Used

dotenvfor managing sensitive data like API keys and port configuration.- Error Handling

Enhanced error logging for debugging purposes.

- Asynchronous Error Handling

Utilize a centralized error-handling middleware to manage errors in one place.

- Environment Variable Management

Use environment variables for more configuration options, such as cache duration or allowed origins, making it easier to change configurations without altering the code.

- Static Response Handling

Use a middleware for handling static responses or messages instead of duplicating logic.

- Compression Middleware

Add compression middleware to reduce the size of the response bodies, which can improve performance, especially for larger responses.

- Timeout Handling on Requests

Handle timeouts for the requests made to the target URLs and provide appropriate error responses.

- Security Improvements

Implement security best practices, such as Helmet for setting HTTP headers, which can help protect against well-known vulnerabilities.

- Logging Configuration

Improve logging with different levels (e.g., info, error) using a logging library like winston, which provides more control over logging output.

- Graceful Shutdown

Implement graceful shutdown logic to handle server termination more smoothly, especially during deployment.

- Monitoring and Metrics

Integrate monitoring tools like Prometheus or an APM tool for better insights into the application’s performance and resource usage.

- Response Schema Validation

Use libraries like

JoiorAjvto validate responses sent back to the client, ensuring they conform to expected formats.Again, this new code is running here in test for a few weeks.

code updated on github or not ?

@DownPW Not yet. One or two bugs left to resolve, then it’ll be posted.

-

Ok I’ll install the old version in the meantime… Or maybe just wait if it’s not too long

-

Can’t wait

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in