NODEBB: Nginx error performance & High CPU

-

@phenomlab said in NODEBB: Nginx error performance & High CPU:

@DownPW try this

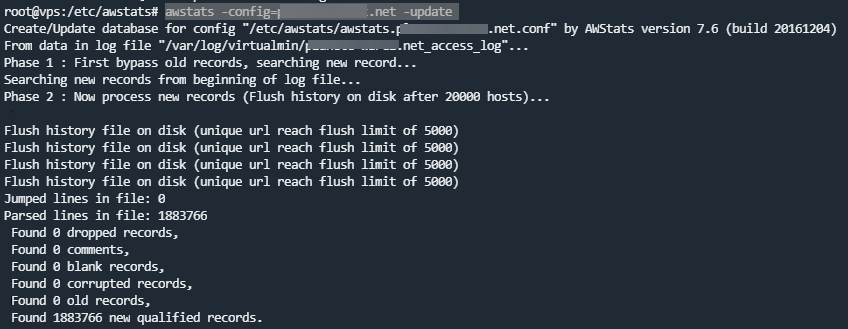

https://manpages.org/awstatsIt worked with the following command (very long) in cli but I’m afraid it won’t work with the virtualmin module

awstats -config=XXX-XXX.XXX -update

@DownPW yes, that was a command line to get you the immediate information you needed. Did you try adding the necessary config to

nginxin order that it bypasses the reverse proxy? -

Hello

Since yesterday, we have been experiencing a massive influx of new users.

Today we have more than 1000 simultaneous connections (account creation, participation in topics, etc.)

I had a lot of issues with Nginx that I think I got fixed.

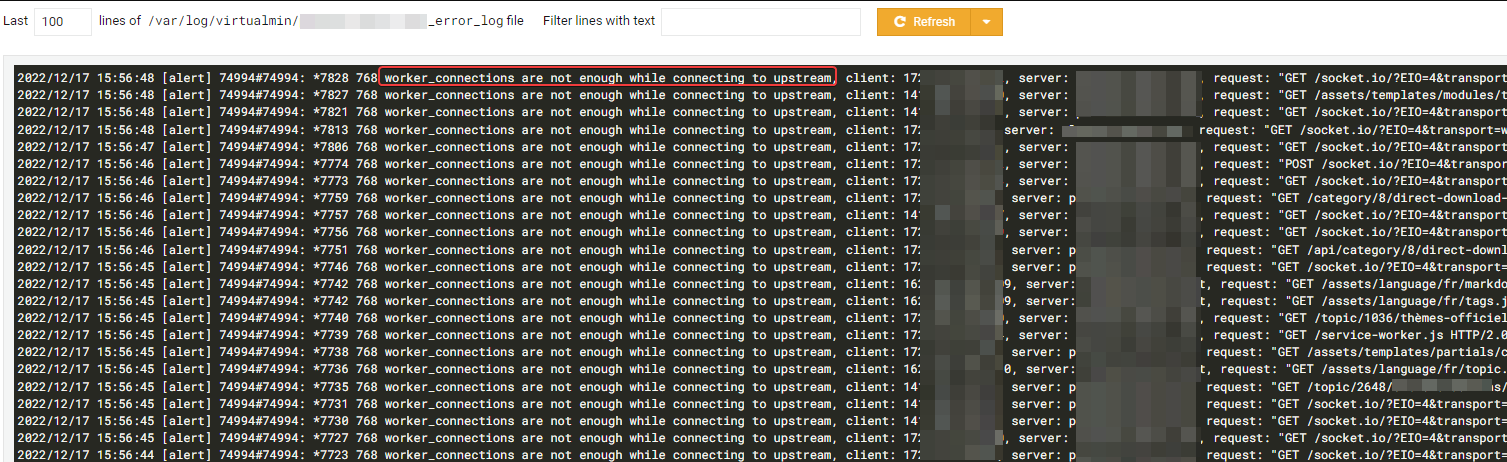

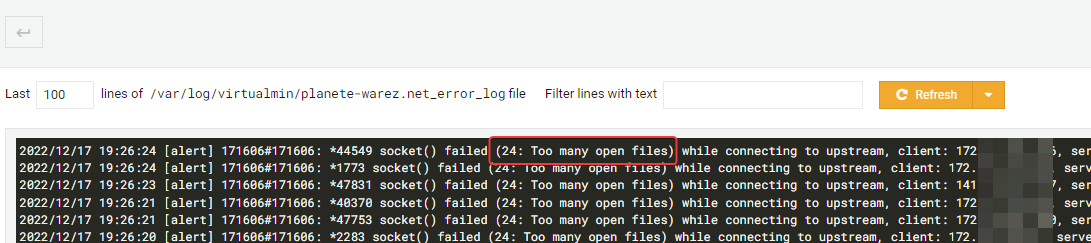

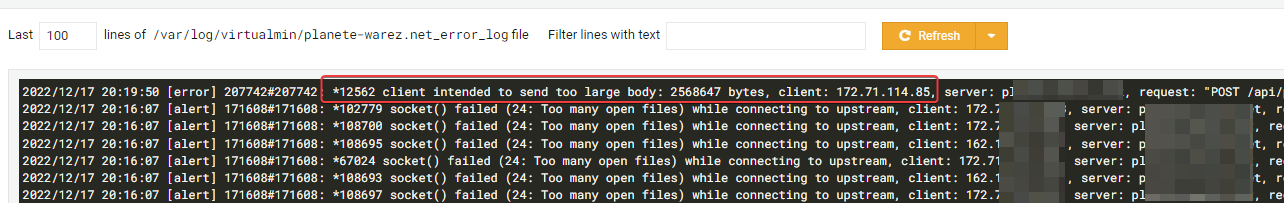

I have several “worker_connections is too long” & “too many openfiles” errors on nginx log :

I think I solved his 2 problems in the following way :

systemctl edit --full nginx.service [Service] LimitNOFILE=70000nano /etc/nginx/nginx.conf worker_rlimit_nofile 70000; events { # worker_connections 768; worker_connections 65535; # multi_accept on; multi_accept on; }I have “client intended to send too large body” errors too :

I think I solved this problem in the following way :

nano /etc/nginx/nginx.conf http { ## # Basic Settings ## client_max_body_size 10M;–> I hope this will be enough for Nginx.

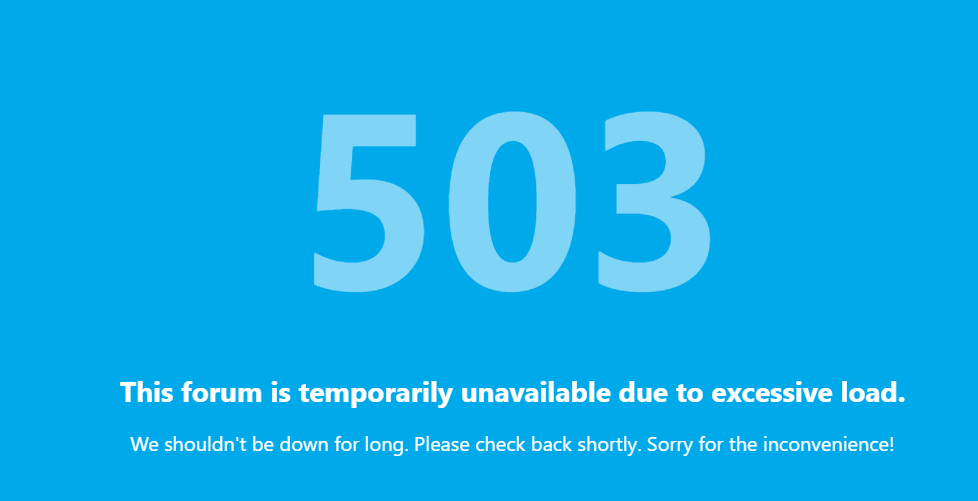

But now, I have a problem of CPU performance (sometimes, not ) which causes nodebb 503 errors (not nginx) or latencies because nodeJS and mongodb takes a lot of CPU.

– Would there be tweaks for nodejs, nodebb or mongodb to prevent the influx of users from draining the CPU - RAM ?

– If you have better values for nginx error (see above the topic) or better fix, tell me

Thanks in advance @phenomlab

@DownPW one day a year, we have the same problem in our forum, where several thousand users become online at the same minute and get similar problems… but we are getting better each year at handling this…

I do not have full technical details about our solution but I know for sure that using NodeBB that listens to three ports help… 4567, 4568, and 4569… Is your NodeBB set up this way?

-

@DownPW one day a year, we have the same problem in our forum, where several thousand users become online at the same minute and get similar problems… but we are getting better each year at handling this…

I do not have full technical details about our solution but I know for sure that using NodeBB that listens to three ports help… 4567, 4568, and 4569… Is your NodeBB set up this way?

@crazycells said in NODEBB: Nginx error performance & High CPU:

4567, 4568, and 4569… Is your NodeBB set up this way?

It’s not (I set their server up). Sudonix is not configured this way either, but from memory, this also requires

redisto handle the session data. I may configure this site to do exactly that. -

@crazycells said in NODEBB: Nginx error performance & High CPU:

4567, 4568, and 4569… Is your NodeBB set up this way?

It’s not (I set their server up). Sudonix is not configured this way either, but from memory, this also requires

redisto handle the session data. I may configure this site to do exactly that.@phenomlab said in NODEBB: Nginx error performance & High CPU:

@crazycells said in NODEBB: Nginx error performance & High CPU:

4567, 4568, and 4569… Is your NodeBB set up this way?

It’s not (I set their server up). Sudonix is not configured this way either, but from memory, this also requires

redisto handle the session data. I may configure this site to do exactly that.yep it’s not but it interests me a lot.

I see the documentation but I would have to adapt to our configuration and is it really worth doing?

Where I put ionode directives? on nginx.conf or vhost your_website.conf ? I think on nginx.confAnd where put proxy_pass directive? on nginx.conf or vhost your_website.Conf ?

It’s still pretty blurry but I just took a look at it;

-

@phenomlab said in NODEBB: Nginx error performance & High CPU:

@crazycells said in NODEBB: Nginx error performance & High CPU:

4567, 4568, and 4569… Is your NodeBB set up this way?

It’s not (I set their server up). Sudonix is not configured this way either, but from memory, this also requires

redisto handle the session data. I may configure this site to do exactly that.yep it’s not but it interests me a lot.

I see the documentation but I would have to adapt to our configuration and is it really worth doing?

Where I put ionode directives? on nginx.conf or vhost your_website.conf ? I think on nginx.confAnd where put proxy_pass directive? on nginx.conf or vhost your_website.Conf ?

It’s still pretty blurry but I just took a look at it;

@DownPW It’s more straightforward than it sounds, although can be confusing if you look at it for the first time. I’ve just implemented it here. Can you provide your

nginxconfig and yourconfig.json(remove password obviously)Thanks

-

@DownPW yes, that was a command line to get you the immediate information you needed. Did you try adding the necessary config to

nginxin order that it bypasses the reverse proxy?@phenomlab said in NODEBB: Nginx error performance & High CPU:

@DownPW yes, that was a command line to get you the immediate information you needed. Did you try adding the necessary config to

nginxin order that it bypasses the reverse proxy?Nope because I don’t know what it will be used for.

I access the report just fine without it.

I would just like to use webmin to generate it every day automatically.

I would just like to settle this permissions thing. -

@DownPW It’s more straightforward than it sounds, although can be confusing if you look at it for the first time. I’ve just implemented it here. Can you provide your

nginxconfig and yourconfig.json(remove password obviously)Thanks

@phenomlab said in NODEBB: Nginx error performance & High CPU:

@DownPW It’s more straightforward than it sounds, although can be confusing if you look at it for the first time. I’ve just implemented it here. Can you provide your

nginxconfig and yourconfig.json(remove password obviously)Thanks

here is my nginx.conf

user www-data; worker_processes auto; pid /run/nginx.pid; worker_rlimit_nofile 70000; include /etc/nginx/modules-enabled/*.conf; events { # worker_connections 768; worker_connections 4000; #multi_accept on; #multi_accept on; } http { ## # Basic Settings ## #client_max_body_size 10M; #Requete maximun par ip limit_req_zone $binary_remote_addr zone=flood:10m rate=100r/s; #Connexions maximum par ip limit_conn_zone $binary_remote_addr zone=ddos:1m; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; # server_tokens off; # server_names_hash_bucket_size 64; # server_name_in_redirect off; include /etc/nginx/mime.types; default_type application/octet-stream; ## # SSL Settings ## ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3; # Dropping SSLv3, ref: POODLE ssl_prefer_server_ciphers on; ## # Logging Settings ## access_log /var/log/nginx/access.log; error_log /var/log/nginx/error.log; ## # Gzip Settings ## gzip on; gzip_min_length 1000; #test Violence gzip_proxied off; #test Violence gzip_types text/plain application/xml text/javascript application/javascript application/x-javascript text/css application/json; # gzip_vary on; # gzip_proxied any; # gzip_comp_level 6; # gzip_buffers 16 8k; # gzip_http_version 1.1; # gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript; ## # Virtual Host Configs ## include /etc/nginx/conf.d/*.conf; include /etc/nginx/sites-enabled/*; server_names_hash_bucket_size 128; } #mail { # # See sample authentication script at: # # http://wiki.nginx.org/ImapAuthenticateWithApachePhpScript # # # auth_http localhost/auth.php; # # pop3_capabilities "TOP" "USER"; # # imap_capabilities "IMAP4rev1" "UIDPLUS"; # # server { # listen localhost:110; # protocol pop3; # proxy on; # } # # server { # listen localhost:143; # protocol imap; # proxy on; # } #} And my NodebB website.conf :

server { server_name XX-XX.net www.XX-XX.net; listen XX.XX.XX.X; listen [XX:XX:XX:XX::]; root /home/XX-XX/nodebb; index index.php index.htm index.html; access_log /var/log/virtualmin/XX-XX.net_access_log; error_log /var/log/virtualmin/XX-XX.net_error_log; location / { limit_req zone=flood burst=100 nodelay; limit_conn ddos 10; proxy_read_timeout 180; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_pass http://127.0.0.1:4567/; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } listen XX.XX.XX.XX:XXssl http2; listen [XX:XX:XX:XX::]:443 ssl http2; ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert; ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break; } } and my nodebb config.json :

{ "url": "https://XX-XX.net", "secret": "XXXXXXXXXXXXXXXX", "database": "mongo", "mongo": { "host": "127.0.0.1", "port": "27017", "username": "XXXXXXXXXXX", "password": "XXXXXXXXXXX", "database": "nodebb", "uri": "" } } -

@phenomlab said in NODEBB: Nginx error performance & High CPU:

@DownPW It’s more straightforward than it sounds, although can be confusing if you look at it for the first time. I’ve just implemented it here. Can you provide your

nginxconfig and yourconfig.json(remove password obviously)Thanks

here is my nginx.conf

user www-data; worker_processes auto; pid /run/nginx.pid; worker_rlimit_nofile 70000; include /etc/nginx/modules-enabled/*.conf; events { # worker_connections 768; worker_connections 4000; #multi_accept on; #multi_accept on; } http { ## # Basic Settings ## #client_max_body_size 10M; #Requete maximun par ip limit_req_zone $binary_remote_addr zone=flood:10m rate=100r/s; #Connexions maximum par ip limit_conn_zone $binary_remote_addr zone=ddos:1m; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; # server_tokens off; # server_names_hash_bucket_size 64; # server_name_in_redirect off; include /etc/nginx/mime.types; default_type application/octet-stream; ## # SSL Settings ## ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3; # Dropping SSLv3, ref: POODLE ssl_prefer_server_ciphers on; ## # Logging Settings ## access_log /var/log/nginx/access.log; error_log /var/log/nginx/error.log; ## # Gzip Settings ## gzip on; gzip_min_length 1000; #test Violence gzip_proxied off; #test Violence gzip_types text/plain application/xml text/javascript application/javascript application/x-javascript text/css application/json; # gzip_vary on; # gzip_proxied any; # gzip_comp_level 6; # gzip_buffers 16 8k; # gzip_http_version 1.1; # gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript; ## # Virtual Host Configs ## include /etc/nginx/conf.d/*.conf; include /etc/nginx/sites-enabled/*; server_names_hash_bucket_size 128; } #mail { # # See sample authentication script at: # # http://wiki.nginx.org/ImapAuthenticateWithApachePhpScript # # # auth_http localhost/auth.php; # # pop3_capabilities "TOP" "USER"; # # imap_capabilities "IMAP4rev1" "UIDPLUS"; # # server { # listen localhost:110; # protocol pop3; # proxy on; # } # # server { # listen localhost:143; # protocol imap; # proxy on; # } #}And my NodebB website.conf :

server { server_name XX-XX.net www.XX-XX.net; listen XX.XX.XX.X; listen [XX:XX:XX:XX::]; root /home/XX-XX/nodebb; index index.php index.htm index.html; access_log /var/log/virtualmin/XX-XX.net_access_log; error_log /var/log/virtualmin/XX-XX.net_error_log; location / { limit_req zone=flood burst=100 nodelay; limit_conn ddos 10; proxy_read_timeout 180; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_pass http://127.0.0.1:4567/; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } listen XX.XX.XX.XX:XXssl http2; listen [XX:XX:XX:XX::]:443 ssl http2; ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert; ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break; } }and my nodebb config.json :

{ "url": "https://XX-XX.net", "secret": "XXXXXXXXXXXXXXXX", "database": "mongo", "mongo": { "host": "127.0.0.1", "port": "27017", "username": "XXXXXXXXXXX", "password": "XXXXXXXXXXX", "database": "nodebb", "uri": "" } }@DownPW Can you check to ensure that

redis-serveris installed on your server before we proceed ? -

@DownPW Can you check to ensure that

redis-serveris installed on your server before we proceed ?@phenomlab said in NODEBB: Nginx error performance & High CPU:

@DownPW Can you check to ensure that

redis-serveris installed on your server before we proceed ?just this command ? :

sudo apt install redisAnd what about the perf use ?

-

@phenomlab said in NODEBB: Nginx error performance & High CPU:

@DownPW Can you check to ensure that

redis-serveris installed on your server before we proceed ?just this command ? :

sudo apt install redisAnd what about the perf use ?

@DownPW You should use

sudo apt install redis-serverIn terms of performance, your server should have enough resources for this - at any rate, the session information is stored in

redisbut nothing else, so it’s essentially only valid for the length of the session and has no impact to the over site in terms of speed. -

@phenomlab said in NODEBB: Nginx error performance & High CPU:

@DownPW It’s more straightforward than it sounds, although can be confusing if you look at it for the first time. I’ve just implemented it here. Can you provide your

nginxconfig and yourconfig.json(remove password obviously)Thanks

here is my nginx.conf

user www-data; worker_processes auto; pid /run/nginx.pid; worker_rlimit_nofile 70000; include /etc/nginx/modules-enabled/*.conf; events { # worker_connections 768; worker_connections 4000; #multi_accept on; #multi_accept on; } http { ## # Basic Settings ## #client_max_body_size 10M; #Requete maximun par ip limit_req_zone $binary_remote_addr zone=flood:10m rate=100r/s; #Connexions maximum par ip limit_conn_zone $binary_remote_addr zone=ddos:1m; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; # server_tokens off; # server_names_hash_bucket_size 64; # server_name_in_redirect off; include /etc/nginx/mime.types; default_type application/octet-stream; ## # SSL Settings ## ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3; # Dropping SSLv3, ref: POODLE ssl_prefer_server_ciphers on; ## # Logging Settings ## access_log /var/log/nginx/access.log; error_log /var/log/nginx/error.log; ## # Gzip Settings ## gzip on; gzip_min_length 1000; #test Violence gzip_proxied off; #test Violence gzip_types text/plain application/xml text/javascript application/javascript application/x-javascript text/css application/json; # gzip_vary on; # gzip_proxied any; # gzip_comp_level 6; # gzip_buffers 16 8k; # gzip_http_version 1.1; # gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript; ## # Virtual Host Configs ## include /etc/nginx/conf.d/*.conf; include /etc/nginx/sites-enabled/*; server_names_hash_bucket_size 128; } #mail { # # See sample authentication script at: # # http://wiki.nginx.org/ImapAuthenticateWithApachePhpScript # # # auth_http localhost/auth.php; # # pop3_capabilities "TOP" "USER"; # # imap_capabilities "IMAP4rev1" "UIDPLUS"; # # server { # listen localhost:110; # protocol pop3; # proxy on; # } # # server { # listen localhost:143; # protocol imap; # proxy on; # } #}And my NodebB website.conf :

server { server_name XX-XX.net www.XX-XX.net; listen XX.XX.XX.X; listen [XX:XX:XX:XX::]; root /home/XX-XX/nodebb; index index.php index.htm index.html; access_log /var/log/virtualmin/XX-XX.net_access_log; error_log /var/log/virtualmin/XX-XX.net_error_log; location / { limit_req zone=flood burst=100 nodelay; limit_conn ddos 10; proxy_read_timeout 180; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_pass http://127.0.0.1:4567/; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } listen XX.XX.XX.XX:XXssl http2; listen [XX:XX:XX:XX::]:443 ssl http2; ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert; ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break; } }and my nodebb config.json :

{ "url": "https://XX-XX.net", "secret": "XXXXXXXXXXXXXXXX", "database": "mongo", "mongo": { "host": "127.0.0.1", "port": "27017", "username": "XXXXXXXXXXX", "password": "XXXXXXXXXXX", "database": "nodebb", "uri": "" } }@DownPW Change your

config.jsonso that it looks like the below{ "url": "https://XX-XX.net", "secret": "XXXXXXXXXXXXXXXX", "database": "mongo", "mongo": { "host": "127.0.0.1", "port": "27017", "username": "XXXXXXXXXXX", "password": "XXXXXXXXXXX", "database": "nodebb", "uri": "" }, "redis": { "host":"127.0.0.1", "port":"6379", "database": 5 }, "port": ["4567", "4568", "4569"] // will start three processes } Your

nginx.confalso needs modification (see commented steps for changes etc)# add the below block for nodeBB clustering upstream io_nodes { ip_hash; server 127.0.0.1:4567; server 127.0.0.1:4568; server 127.0.0.1:4569; } server { server_name XX-XX.net www.XX-XX.net; listen XX.XX.XX.X; listen [XX:XX:XX:XX::]; root /home/XX-XX/nodebb; index index.php index.htm index.html; access_log /var/log/virtualmin/XX-XX.net_access_log; error_log /var/log/virtualmin/XX-XX.net_error_log; # add the below block which will force all traffic into the cluster when referenced with @nodebb location @nodebb { proxy_pass http://io_nodes; } location / { limit_req zone=flood burst=100 nodelay; limit_conn ddos 10; proxy_read_timeout 180; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; # It's necessary to set @nodebb here so that the clustering works proxy_pass @nodebb; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } listen XX.XX.XX.XX:XXssl http2; listen [XX:XX:XX:XX::]:443 ssl http2; ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert; ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break; } } -

@DownPW Change your

config.jsonso that it looks like the below{ "url": "https://XX-XX.net", "secret": "XXXXXXXXXXXXXXXX", "database": "mongo", "mongo": { "host": "127.0.0.1", "port": "27017", "username": "XXXXXXXXXXX", "password": "XXXXXXXXXXX", "database": "nodebb", "uri": "" }, "redis": { "host":"127.0.0.1", "port":"6379", "database": 5 }, "port": ["4567", "4568", "4569"] // will start three processes }Your

nginx.confalso needs modification (see commented steps for changes etc)# add the below block for nodeBB clustering upstream io_nodes { ip_hash; server 127.0.0.1:4567; server 127.0.0.1:4568; server 127.0.0.1:4569; } server { server_name XX-XX.net www.XX-XX.net; listen XX.XX.XX.X; listen [XX:XX:XX:XX::]; root /home/XX-XX/nodebb; index index.php index.htm index.html; access_log /var/log/virtualmin/XX-XX.net_access_log; error_log /var/log/virtualmin/XX-XX.net_error_log; # add the below block which will force all traffic into the cluster when referenced with @nodebb location @nodebb { proxy_pass http://io_nodes; } location / { limit_req zone=flood burst=100 nodelay; limit_conn ddos 10; proxy_read_timeout 180; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; # It's necessary to set @nodebb here so that the clustering works proxy_pass @nodebb; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } listen XX.XX.XX.XX:XXssl http2; listen [XX:XX:XX:XX::]:443 ssl http2; ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert; ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break; } }ok I have to talk to the staff members first before i do anything.

So there is nothing to put in the vhost XX-XX.net ?, everything is in the nginx.conf and the config.json if I understand correctly

In terms of disk space, memory, cpu what does it give ?

After all of that, we need to restart nodebb I imagine ?

These new ports (4567", “4568”, "4569) must also be open in the Hetzner interface?

I wanted all the details if possible

-

ok I have to talk to the staff members first before i do anything.

So there is nothing to put in the vhost XX-XX.net ?, everything is in the nginx.conf and the config.json if I understand correctly

In terms of disk space, memory, cpu what does it give ?

After all of that, we need to restart nodebb I imagine ?

These new ports (4567", “4568”, "4569) must also be open in the Hetzner interface?

I wanted all the details if possible

@DownPW said in NODEBB: Nginx error performance & High CPU:

everything is in the nginx.conf and the config.json if I understand correctly

Yes, that’s correct

@DownPW said in NODEBB: Nginx error performance & High CPU:

In terms of disk space, memory, cpu what does it give ?

There should be little change in terms of the usage. What clustering does is essentially provide multiple processes to carry out the same tasks, but obviously much faster than one process only ever could.

@DownPW said in NODEBB: Nginx error performance & High CPU:

After all of that, we need to restart nodebb I imagine ?

Correct

@DownPW said in NODEBB: Nginx error performance & High CPU:

These new ports (4567", “4568”, "4569) must also be open in the Hetzner interface?

Will not be necessary as they are not available publicly, but only to the reverse proxy on

127.0.0.1 -

@DownPW said in NODEBB: Nginx error performance & High CPU:

everything is in the nginx.conf and the config.json if I understand correctly

Yes, that’s correct

@DownPW said in NODEBB: Nginx error performance & High CPU:

In terms of disk space, memory, cpu what does it give ?

There should be little change in terms of the usage. What clustering does is essentially provide multiple processes to carry out the same tasks, but obviously much faster than one process only ever could.

@DownPW said in NODEBB: Nginx error performance & High CPU:

After all of that, we need to restart nodebb I imagine ?

Correct

@DownPW said in NODEBB: Nginx error performance & High CPU:

These new ports (4567", “4568”, "4569) must also be open in the Hetzner interface?

Will not be necessary as they are not available publicly, but only to the reverse proxy on

127.0.0.1OK.

I resume in details.

1- Stop nodebb

2- Stop iframely

3- Stop nginx

4- Install redis server : sudo apt install redis-server

5- Change nodebb Config.json file (can you verifiy this synthax please ? nodebb documentation tell “database”: 0 and not “database”: 5 - but maybe it’s just a name and i can use the same as mongo like “database”: nodebb , I moved the port directive) :{ "url": "https://XX-XX.net", "secret": "XXXXXXXXXXXXXXXX", "database": "mongo", "port": [4567, 4568,4569], "mongo": { "host": "127.0.0.1", "port": "27017", "username": "XXXXXXXXXXX", "password": "XXXXXXXXXXX", "database": "nodebb", "uri": "" }, "redis": { "host":"127.0.0.1", "port":"6379", "database": 5 } } 6- Change nginx.conf :

# add the below block for nodeBB clustering upstream io_nodes { ip_hash; server 127.0.0.1:4567; server 127.0.0.1:4568; server 127.0.0.1:4569; } server { server_name XX-XX.net www.XX-XX.net; listen XX.XX.XX.X; listen [XX:XX:XX:XX::]; root /home/XX-XX/nodebb; index index.php index.htm index.html; access_log /var/log/virtualmin/XX-XX.net_access_log; error_log /var/log/virtualmin/XX-XX.net_error_log; # add the below block which will force all traffic into the cluster when referenced with @nodebb location @nodebb { proxy_pass http://io_nodes; } location / { limit_req zone=flood burst=100 nodelay; limit_conn ddos 10; proxy_read_timeout 180; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; # It's necessary to set @nodebb here so that the clustering works proxy_pass @nodebb; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } listen XX.XX.XX.XX:XXssl http2; listen [XX:XX:XX:XX::]:443 ssl http2; ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert; ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break; } } 7- restart redis server systemctl restart redis-server.service

8- Restart nginx

9- Restart iframely

10- Restart nodebb

11- test configuration -

OK.

I resume in details.

1- Stop nodebb

2- Stop iframely

3- Stop nginx

4- Install redis server : sudo apt install redis-server

5- Change nodebb Config.json file (can you verifiy this synthax please ? nodebb documentation tell “database”: 0 and not “database”: 5 - but maybe it’s just a name and i can use the same as mongo like “database”: nodebb , I moved the port directive) :{ "url": "https://XX-XX.net", "secret": "XXXXXXXXXXXXXXXX", "database": "mongo", "port": [4567, 4568,4569], "mongo": { "host": "127.0.0.1", "port": "27017", "username": "XXXXXXXXXXX", "password": "XXXXXXXXXXX", "database": "nodebb", "uri": "" }, "redis": { "host":"127.0.0.1", "port":"6379", "database": 5 } }6- Change nginx.conf :

# add the below block for nodeBB clustering upstream io_nodes { ip_hash; server 127.0.0.1:4567; server 127.0.0.1:4568; server 127.0.0.1:4569; } server { server_name XX-XX.net www.XX-XX.net; listen XX.XX.XX.X; listen [XX:XX:XX:XX::]; root /home/XX-XX/nodebb; index index.php index.htm index.html; access_log /var/log/virtualmin/XX-XX.net_access_log; error_log /var/log/virtualmin/XX-XX.net_error_log; # add the below block which will force all traffic into the cluster when referenced with @nodebb location @nodebb { proxy_pass http://io_nodes; } location / { limit_req zone=flood burst=100 nodelay; limit_conn ddos 10; proxy_read_timeout 180; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; # It's necessary to set @nodebb here so that the clustering works proxy_pass @nodebb; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } listen XX.XX.XX.XX:XXssl http2; listen [XX:XX:XX:XX::]:443 ssl http2; ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert; ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break; } }7- restart redis server systemctl restart redis-server.service

8- Restart nginx

9- Restart iframely

10- Restart nodebb

11- test configuration@DownPW said in NODEBB: Nginx error performance & High CPU:

5- Change nodebb Config.json file (can you verifiy this synthax please ? nodebb documentation tell “database”: 0 and not “database”: 5,

All fine from my perspective - no real need to stop

iFramely, but ok. The database number doesn’t matter as long as it’s not being used. You can use0if you wish - it’s in use on my side, hence5. -

OK.

I resume in details.

1- Stop nodebb

2- Stop iframely

3- Stop nginx

4- Install redis server : sudo apt install redis-server

5- Change nodebb Config.json file (can you verifiy this synthax please ? nodebb documentation tell “database”: 0 and not “database”: 5 - but maybe it’s just a name and i can use the same as mongo like “database”: nodebb , I moved the port directive) :{ "url": "https://XX-XX.net", "secret": "XXXXXXXXXXXXXXXX", "database": "mongo", "port": [4567, 4568,4569], "mongo": { "host": "127.0.0.1", "port": "27017", "username": "XXXXXXXXXXX", "password": "XXXXXXXXXXX", "database": "nodebb", "uri": "" }, "redis": { "host":"127.0.0.1", "port":"6379", "database": 5 } }6- Change nginx.conf :

# add the below block for nodeBB clustering upstream io_nodes { ip_hash; server 127.0.0.1:4567; server 127.0.0.1:4568; server 127.0.0.1:4569; } server { server_name XX-XX.net www.XX-XX.net; listen XX.XX.XX.X; listen [XX:XX:XX:XX::]; root /home/XX-XX/nodebb; index index.php index.htm index.html; access_log /var/log/virtualmin/XX-XX.net_access_log; error_log /var/log/virtualmin/XX-XX.net_error_log; # add the below block which will force all traffic into the cluster when referenced with @nodebb location @nodebb { proxy_pass http://io_nodes; } location / { limit_req zone=flood burst=100 nodelay; limit_conn ddos 10; proxy_read_timeout 180; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; # It's necessary to set @nodebb here so that the clustering works proxy_pass @nodebb; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } listen XX.XX.XX.XX:XXssl http2; listen [XX:XX:XX:XX::]:443 ssl http2; ssl_certificate /etc/ssl/virtualmin/166195366750642/ssl.cert; ssl_certificate_key /etc/ssl/virtualmin/166195366750642/ssl.key; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) "https://XX-XX.net/$1" break; } }7- restart redis server systemctl restart redis-server.service

8- Restart nginx

9- Restart iframely

10- Restart nodebb

11- test configuration@DownPW I’d add another set of steps here. When you move the sessions away from

mongotoredisyou are going to encounter issues logging in as the session tables will no longer match meaning none of your users will be able to loginTo resolve this issue

Review https://sudonix.com/topic/249/invalid-csrf-on-dev-install and implement all steps - note that you will also need the below string when connecting to

mongodbmongo -u admin -p <password> --authenticationDatabase=adminObviously, substitute <password> with the actual value. So in summary:

- Open the

mondogbconsole - Select your database - in my case

use sudonix; - Issue this command

db.objects.update({_key: "config"}, {$set: {cookieDomain: ""}}); - Press enter and then type

quit()into themongodbshell - Restart NodeBB

- Clear cache on browser

- Try connection again

- Open the

-

@DownPW I’d add another set of steps here. When you move the sessions away from

mongotoredisyou are going to encounter issues logging in as the session tables will no longer match meaning none of your users will be able to loginTo resolve this issue

Review https://sudonix.com/topic/249/invalid-csrf-on-dev-install and implement all steps - note that you will also need the below string when connecting to

mongodbmongo -u admin -p <password> --authenticationDatabase=adminObviously, substitute <password> with the actual value. So in summary:

- Open the

mondogbconsole - Select your database - in my case

use sudonix; - Issue this command

db.objects.update({_key: "config"}, {$set: {cookieDomain: ""}}); - Press enter and then type

quit()into themongodbshell - Restart NodeBB

- Clear cache on browser

- Try connection again

Hmm ok when perform these steps ?

- Open the

-

Hmm ok when perform these steps ?

@DownPW After you’ve setup the cluster and restarted NodeBB

-

@crazycells said in NODEBB: Nginx error performance & High CPU:

4567, 4568, and 4569… Is your NodeBB set up this way?

It’s not (I set their server up). Sudonix is not configured this way either, but from memory, this also requires

redisto handle the session data. I may configure this site to do exactly that.@phenomlab said in NODEBB: Nginx error performance & High CPU:

@crazycells said in NODEBB: Nginx error performance & High CPU:

4567, 4568, and 4569… Is your NodeBB set up this way?

It’s not (I set their server up). Sudonix is not configured this way either, but from memory, this also requires

redisto handle the session data. I may configure this site to do exactly that.yes, you might be right about the necessity. We have redis installed.

-

@phenomlab said in NODEBB: Nginx error performance & High CPU:

@crazycells said in NODEBB: Nginx error performance & High CPU:

4567, 4568, and 4569… Is your NodeBB set up this way?

It’s not (I set their server up). Sudonix is not configured this way either, but from memory, this also requires

redisto handle the session data. I may configure this site to do exactly that.yep it’s not but it interests me a lot.

I see the documentation but I would have to adapt to our configuration and is it really worth doing?

Where I put ionode directives? on nginx.conf or vhost your_website.conf ? I think on nginx.confAnd where put proxy_pass directive? on nginx.conf or vhost your_website.Conf ?

It’s still pretty blurry but I just took a look at it;

@DownPW yes, this is the place to start:

Did this solution help you?

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in