Blog

Articles written by the founder, and by other members of this platform. We actively encourage blog articles of your own, but each one is subject to moderator review before it is released.

53

Topics

633

Posts

Trending

Trending

Ever since the first computer was created and made available to the world, technology has advanced at an incredible pace. From its early inception before the World Wide Web became the common platform it is today, there have been innovators. Some of those faded into obscurity before their idea even made it into the mainstream - for example, Sir Clive Sinclair’s ill fated C5 - effectively the prehistoric Sedgeway of the 80’s era that ended up in receivership after falling short of both sales forecasts and enthusiasm from the general public - mostly cited around safety, practicality, and the notoriously short battery life. Sinclair had an interest in battery powered vehicles, and whilst his initial idea seemed outlandish in the 80s, if you look at the electric cars market today, he was actually a groundbreaking pioneer.

The technology Revolution

The next revolution in technology was, without doubt, the World Wide Web. A creation pioneered in 1989 by Sir Tim Berners-Lee whilst working for CERN in Switzerland that made use of the earliest form of common computer language - HTML This new technology, coupled with the Mosaic Browser formed the basis of all technology communication as we know it today. The internet. With the dot com tech bubble lasting between 1995 and 2001 before finally bursting, the huge wave of interest in this new transport and communication phenomenon meant that new ideas came to life. Ideas that were previously considered inconceivable became probable, and then reality as technology gained significant ground and funding from a variety of sources. One of the earliest investors in the internet was Cisco. Despite losing almost 86% of its market share during the dot com fallout, it managed to cling on and is now responsible for providing the underpinning technology and infrastructure that makes the web as we know it today function. In a similar ilk, eBay and Amazon were early adopters of the dot com boom, and also managed to stay afloat during the crash. Amazon is the huge success story that went from simply selling books to being one of the largest technology firms in its space, and pioneering technology such as Amazon Web Services that effectively destroyed the physical data centre with its unstoppable adoption rate of organisations moving their operations to cloud based environments.

With the rise of the internet came the rise of automation, and Artificial Intelligence. Early technological advances and revolutionary ideas arrived in the form of self serving ATM’s, analogue cell phones, credit card transaction processing (data warehouses), and improvements to home appliances all designed to make our lives easier. Whilst it’s undisputed that technology has immensely enriched our lives and allowed us to achieve feats of engineering and construction that Brunel could have only dreamed of, the technology evolution wheel now spins at an alarming rate. The mobile phone when first launched was the size of a house brick and had an antennae that made placing it in your pocket impossible unless you wore a trench coat. Newer iterations of the same technology saw analogue move to digital, and the cell phone reduce in size to that of a Mars bar. As with all technology advances, the first generation was rapidly left behind, with 3G, then 4G being the mainstream and accepted standard (with 5G being in the final stages before release). Along with the accessibility factor in terms of mobile networks came the smartphone. An idea first pioneered in 2007 by Steve Jobs with the arrival of the iPhone 2G. This technology brand rocketed in popularity and rapidly became the most sought after technology in the world thanks to its founder’s insight. Since 2007, we’ve seen several new iPhone and iPad models surface - as of now, up to the iPhone X. 2008 saw competitor Android release its first device with version 1. Fast forward ten years and the most recent release is Oreo (8.0). The smartphone and enhanced capacity networks era made it possible to communicate in new ways that were previously inaccessible. From instant messaging to video calls on your smartphone, plus a wealth of applications designed to provide both entertainment and enhanced functionality, technology was now the at the forefront and a major component of everyday life.

The brain’s last stand ?

The rise of social media platforms such as Facebook and Twitter took communication to a new level - creating a playing field for technology to further embrace communication and enrich our lives in terms of how we interact with others, and the information we share on a daily basis. However, to fully understand how Artificial Intelligence made such a dramatic impact on our lives, we need to step back to 1943 when Alan Turing pioneered the Turing Test. Probably the most defining moment in Artificial Intelligence history was in 1997 when reigning chess champion Garry Kasparov played supercomputer Deep Blue - and subsequently lost. Not surprising when you consider that the IBM built machine was capable of evaluating and executing 200 million moves per second. The question was, could it cope with strategy ? The clear answer was yes. Dubbed “the brain’s last stand”, this occurrence set the inevitable path for Artificial Intelligence to scale to new heights. The US military attempted to use AI in the Cold War, although this amounted to virtually nothing. However, interest in the progress of Artificial Intelligence rose quickly, with development in this new technology being taken seriously with a range of autonomous robots. BigDog, developed by Boston Dynamics, was one of the first. Designed to operate as a robotic pack animal in terrain considered unreachable or inaccessible for standard vehicles - although it has never actually seen active service. iRobot also gained popularity and became a major player in this area. The bomb disposal device PackBot combines user control with artificial intelligence capabilities such as explosives sniffing. As of today, over 2000 of these PackBot devices have been utilised in Iraq and Afghanistan with the aim of successfully and safely tackling IED’s to prevent loss of human life.

Never heard of Boston Dynamics ? Here’s a video that’ll give you an insight into one of their latest creations

A similar occurrence to the Kasparov incident in 2011 saw IBM again unveil it’s latest technology (Watson) which took on the human brain again - this time in US quiz show Jeopardy. Watson was “trained” for three years to take on this challenge, and using a complex set of algorithms and machine learning, trounced the human opposition (who happened to be two of the shows smartest contestants) and stole the show. This incident quickly went viral, and placed Artificial Intelligence as a prominent technology that had advanced to the point where it proved beyond reasonable doubt to be superior to the human brain. 2014 saw the introduction of driverless vehicles that leveraged Artificial Intelligence to make decisions based on road and traffic conditions. Both Tesla and Google are major players in this area - more predominantly Tesla of late. I’ve previously written an article concerning the use of Artificial Intelligence around driverless vehicles that can be found here.

The Facebook Experiment

In the save year, Tim Berners-Lee himself said that machines were getting smarter, but humans were not. He also stated that computers would end up communicating with each other in their own language. Given the Facebook AI experiment conducted this year, this prediction was in fact correct. Here’s a synopsis of the discussion between two bots named Alice and Bob.

Bob: i can i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i i can i i i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i . . . . . . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i i i i i everything else . . . . . . . . . . . . . .

Alice: balls have 0 to me to me to me to me to me to me to me to me to

Bob: you i i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Whilst this discussion became difficult to decipher from the human perspective and looked like complete gibberish (you could draw some parallel with The Chuckle Brothers on LSD) the AI learning in this experiment had actually taken the decision to communicate in a bespoke method as a means of making the stream more effective. The problem with this approach was that the bots were designed to communicate with humans, and not themselves. AI decision making isn’t something new either. Google translate actually converts unsanitized input to its own internal machine language before providing a translation. The developers at Google noticed this, but where happy for this to continue as it made the AI more effective. And now for reality. Is this considered acceptable when it’s supposed to enhance something rather than effectively exclude a human from the process ? The idea here is around interaction. There’s a lot of rumours circulating the internet as to Facebook’s decision to pull the plug. Was it out of fear, or did the scientists device to simply abandon the experiment because it didn’t produce the desired result ?

The future of AI - and human existence ?

A more disturbing point is that AI appears to have had control in the decision making process, and did not need a human to choose or approve any request. We’ve actually had basic AI for many years in the form of speech recognition when calling a customer service centre, or when seeking help on websites in the form of unattended bots that can answer basic questions quickly and effectively - and in the event that they cannot answer, they have the intelligence to route the question elsewhere. But what happens if you take AI to a new level where you give it control of something far more sinister like military capabilities ? Here’s a video outlining how a particular scenario concerning the usage of autonomous weapons could work if we continue down the path of ignorance. Whilst it seems like Hollywood, the potential is very real and should be taken seriously. In fact, this particular footage, whilst fiction, had been taken very seriously with names such as Elon Musk and Stephen Hawking providing strong support and backing to effectively create a ban on autonomous weapons and the use of AI in their deployment.

Does this strike a chord with you ? Is this really how we are going to allow AI to evolve ? We’ve had unmanned drones for a while now, and whilst they are effective at providing a mechanism for surgical strike on a particular target, they are still controlled by humans that can override functionality, and ultimately decide when to execute. The real question here is just how far do we want to take AI in terms of autonomy and decision making ? Do we want AI to enrich our lives, or assume our identities thus allowing the human race to slip into oblivion ? If we allow this to happen, where does our purpose lie, and what function would humanity then provide that AI can’t ? People need to realise that as soon as we fully embrace AI and give it control over aspects of our lives, we will effectively end up working for it rather than it working for us. Is AI going to pay for your pension ? No, but it could certainly replace your existence.

In addition, how long before that “intelligence” decides you are superfluous to requirement ? Sounds very “Hollywood” I’ll admit, but there really needs to be a clear boundary as to what the human race accepts as progress as opposed to elimination. Have I been watching too many Terminator films ? No. I live technology and fully embrace it, but replacing human way of life with machines is not the answer to the world’s problems - only the downfall. It’s already started with driverless cars, and will only get worse - if we allow it.

Blog

16 days ago

This is such a bad idea in terms of privacy and and security that the ICO has already begun an investigation. And clearly for very good reason.

https://news.sky.com/story/microsoft-ai-feature-investigated-by-uk-watchdog-over-screenshots-13141171

Any nefarious actor with access to the target machine could easily gain access to these screenshots and use them to exploit a victim directly. This is literally like leaving the keys under the mat, and all your personal information in clear view ripe for picking.

This is nothing more than spyware in my view and should be stopped.

What’s your view?

Blog

12 Apr 2025, 13:23

In a significant move towards modernization, Japan is finally phasing out the use of floppy disks in its governmental and administrative processes. This step is part of a broader effort to streamline and digitize operations, marking the end of an era for a technology that has long been outdated in most parts of the world.

Floppy disks, once a ubiquitous medium for data storage in the 1980s and 1990s, have been largely obsolete for years, replaced by more efficient and higher-capacity storage solutions like USB drives, cloud storage, and solid-state drives. However, Japan has continued to rely on these antiquated devices, especially within various government departments and public services.

This decision to eliminate floppy disks is driven by the need to enhance efficiency, security, and reliability in data management. Floppy disks are prone to physical damage and data degradation over time, making them a less secure option for storing important information. Additionally, the availability of devices capable of reading floppy disks has diminished, further complicating their continued use.

The push for digital transformation in Japan has been gaining momentum, particularly under the leadership of Digital Minister Taro Kono. Kono has been a vocal advocate for reducing bureaucratic red tape and accelerating the adoption of digital technologies across the government. His efforts aim to not only improve internal processes but also to make government services more accessible and user-friendly for the public.

The transition away from floppy disks is part of a broader initiative to overhaul Japan’s digital infrastructure. This includes promoting the use of online forms and digital signatures, which can significantly reduce paperwork and the time required to process documents. By embracing modern technology, Japan hopes to improve the efficiency of its public services and better serve its citizens in an increasingly digital world.

While the move to retire floppy disks might seem overdue to some, it reflects a larger trend of technological updates within government systems worldwide. Many countries are grappling with similar challenges as they work to modernize legacy systems that were once state-of-the-art but have since become inefficient and outdated.

Japan’s decision to bid farewell to the floppy disk is a symbolic and practical step forward. It underscores the nation’s commitment to digital innovation and sets the stage for further advancements in how the government interacts with and serves its people. As Japan continues to embrace new technologies, it is poised to enhance its administrative capabilities and pave the way for a more efficient and connected future.

Blog

6 Mar 2025, 01:04

For ages now, I’ve been highly suspicious of the frankly awful battery life on my OnePlus Pro 9 device. Over time, the battery life has become hugely problematic, and on average, I get around half a day of usage before I need to charge it again. Yes, I’m a power user, but I don’t use this device for video streaming or games, so it’s not as though I’m asking the earth in terms of reliability and performance.

As my contact is coming up for renewal, I started looking at the OnePlus Pro 11 - with it’s outrageous price tag, I decided to look elsewhere, and am currently waiting for a Samsung S23 Plus to be delivered (hopefully tomorrow).

It seems that this has been an issue since it was identified in 2021 - OnePlus evidently deliberately “throttles” the performance of apps such as WhatsApp, Twitter, Discord - in fact, most of them in order to “improve” battery life on the OnePlus 9 and 9 Pro phones

There are two articles I came across that discuss the same topic.

https://www.xda-developers.com/oneplus-exaplains-oneplus-9-cpu-throttling

https://www.theverge.com/2021/7/8/22568107/oneplus-9-pro-battery-life-app-throttling-benchmarks

Having to discover this after you buy a supposedly “premium” handset is bad enough, but OnePlus themselves kept this quiet and told nobody. To my mind, this is totally unacceptable behaviour, and it means I will not use this brand going forward. The last time I had a Samsung, it was my trusty S2 which literally kept going until it finally gave up with hardware failure meaning it wouldn’t boot anymore. I could have re-flashed the ROM, but consigned it to the bin with full military honours

And so, here I am again back on the Samsung trail. Sorry OnePlus, but this is a bridge too far in my view. I faithfully used your models throughout the years, and my all-time favourite was easily the OnePlus 6T - a fantastic device. I only upgraded “because I could” and I’m sorry I did now.

Here’s to a (hopefully) happy ever after with Samsung.

Blog

11 Feb 2025, 13:04

I expect that by now, everyone has seen the new Apple commercial? For those who haven’t, here you go

Unsurprisingly, this video has attracted a lot of criticism for its content. I for one will NEVER buy into the Apple ecosystem and have always preferred Android devices - for that one reason alone, I’ll never likely be a customer. However, in my view, this advert is in extremely poor taste.

If you watch the advert, you’ll see where I’m going with this. These are creations through generations, and they are being crushed to form an iPad at the end. Without these very creations, and the genius that went accompanied it, our lives would not be what they are today. Technology enriches lives, sure, but it is no match for the feeling and emotion of a human playing a piano or guitar for example. For centuries, masterpieces have been hand crafted on musical instruments by past artists (think of all the great composers before our time that shaped the music we enjoy so much today), and yet, these unique creations are being crushed as though they mean absolutely nothing and can easily be replaced. Even the humble metronome has not escaped.

The same could be said for those amazing analogue cameras, retro arcade machines (that I loved as a child), record players (given that vinyl is enjoying something of a resurgence). And the bust? Don’t get me started on this one. Centuries of beautiful artefacts being destroyed in 1 minute and 8 seconds is beyond comprehension in my view.

What this DOES do very well is create the false view that it’s ok to completely crush important creations, dump all of them in landfill without repurposing or recycling anything and replace what should have been left as analogue with a digital “equivalent”. As everyone with a musical ear knows, vinyl records for example capture exactly the sound the artist wanted you to hear - not a digitally cleaned and “enhanced” track. I recall listening to early albums in mono, and later on in life, their stereo “equivalents”, which never sounded the same and lost their uniqueness.

Sorry Apple, but this video is just plain WRONG. Whoever in your marketing department decided this was a good idea should be immediately fired. I honestly cannot think of a worse way to show complete contempt for all the amazing creations this advert completely destroys in just over one minute.

Bad move, Apple. Bad move. You have just alienated most of your existing user base with this extremely poor judgement. If you have any sense, you’ll pull this ad immediately and re-think your strategy.

Instead of destroying all of the things that today’s technology is based on, why not pay some form of appropriate homage instead?

Blog

13 Aug 2024, 15:55

According to various news outlets, Julian Assange has been freed, and is currently in layover at Bangkok awaiting transition to the final destination of Australia (his country of birth).

https://news.sky.com/story/julian-assange-will-not-be-extradited-to-the-us-after-reaching-plea-deal-13158340

Who is Julian Assange?

Julian Assange is an Australian editor, publisher, and activist best known as the founder of WikiLeaks, a platform that publishes classified and sensitive information provided by anonymous sources. Assange’s work with WikiLeaks has made him a polarizing figure; he is hailed by supporters as a champion of transparency and press freedom, while critics accuse him of jeopardizing national security and diplomatic relations. In 2012, facing extradition to Sweden over sexual assault allegations, Assange sought asylum in the Ecuadorian Embassy in London, where he remained until his arrest in April 2019. Until recently, he was fighting extradition to the United States, where he faced charges under the Espionage Act.

Who are Wiki Leaks?

Established in 2006, WikiLeaks gained global attention in 2010 when it released a massive trove of U.S. military documents and diplomatic cables provided by U.S. Army intelligence analyst Chelsea Manning, including the Collateral Murder video, which showed a U.S. helicopter attack in Baghdad. These disclosures sparked significant controversy, revealing previously undisclosed information about military operations, government surveillance, and diplomatic affairs. Assange’s actions have led to a polarized global debate over issues of transparency, national security, and press freedom.

Should Assange be freed?

The question of whether Julian Assange should be freed is highly contentious and involves several complex factors, including legal, ethical, and political considerations.

Arguments for his release:

Freedom of the Press: Supporters argue that Assange’s actions with WikiLeaks are a form of journalistic activity aimed at promoting transparency and holding governments accountable. They believe prosecuting him sets a dangerous precedent for press freedom and the rights of journalists worldwide.

Human Rights Concerns: Advocates for Assange highlight concerns over his mental and physical health, exacerbated by prolonged confinement. They argue that continued detention is inhumane and call for his release on humanitarian grounds.

Whistleblower Protection: Some view Assange as a whistleblower who exposed important information in the public interest. They argue that instead of punishment, he deserves protection for unveiling government and corporate misconduct.

Arguments against his release:

Legal Accountability: Critics argue that Assange should face the legal consequences of his actions, particularly the charges of espionage and computer intrusion in the United States. They contend that his releases endangered lives and compromised national security.

Rule of Law: Opponents emphasize the importance of upholding the rule of law. They argue that regardless of the nature of the leaks, Assange must be held accountable for any illegal activities, including hacking and handling classified information without authorization.

Impact on National Security: Governments and security experts argue that the indiscriminate release of classified documents can have severe repercussions, including compromising intelligence operations and putting lives at risk. They believe that his prosecution is necessary to deter future leaks that could threaten national security.

My view is that Julian Assange should not have been freed and made to face the consequences of his actions. You can easily argue that what he did was in the public interest, but when doing so directly impacts the security of a nation, and the safety of individuals, then this should be regarded as a criminal offence - in fact, it qualifies for treason - again, you could argue that as an Australian national, he did not sell out his own country. However, while the specific charge of treason usually applies to nationals, countries have legal mechanisms to address and prosecute harmful actions by non-nationals that threaten their security and interests.

The United States sought the extradition of Julian Assange primarily due to his role in obtaining and publishing classified information through WikiLeaks. The specific reasons included:

Espionage and Theft of Classified Information: Assange was charged under the Espionage Act for conspiring to obtain and release classified documents. The charges relate to the publication of sensitive military and diplomatic files that were provided to WikiLeaks by Chelsea Manning, a former U.S. Army intelligence analyst.

Compromising National Security: The U.S. government argued that the publication of these documents endangered lives, compromised national security, and threatened the safety of U.S. personnel and allies. The documents released included detailed records of military operations in Iraq and Afghanistan, as well as diplomatic cables.

Computer Intrusion: Assange faced charges of conspiracy to commit computer intrusion, stemming from allegations that he assisted Manning in cracking a password to gain unauthorized access to U.S. government computers.

Precedent for Handling Leaks: The extradition and prosecution of Assange were seen as a means to set a legal precedent and deter future unauthorized disclosures of classified information. The U.S. government aimed to demonstrate the serious consequences of leaking sensitive materials - effectively an attempt to “lead by example”.

The U.S. extradition request was part of broader efforts to address the implications of WikiLeaks’ activities on national security and the protection of classified information. The case sparked significant debate over issues of press freedom, the public’s right to know, and the boundaries of investigative journalism.

What do you think? I’m really interested in views and opinions here. I, of course, have my own view that he should not have been freed, but made to face the consequences.

Blog

13 Aug 2024, 02:51

During an unrelated discussion today, I was asked why I preferred Linux over Windows. The most obvious responses are that Linux does not have any licensing costs (perhaps not the case entirely with RHEL) and is capable of running on hardware much older than Windows10 will readily accept (or run on without acting like a snail). The other seeking point for Linux is that it’s the backbone of most web servers these days running either Apache or NGINX.

The remainder of the discussion centered around the points below;

Linux is pretty secure out of the box (based on the fact that most distros update as part of the install process), whilst Windows, well, isn’t. Admittedly, there’s an argument for both sides of the fence here - the most common being that Windows is more of a target because of its popularity and market presence - in other words, malware, ransomware, and “whatever-other-nasty-ware” (you fill in the blanks) are typically designed for the Windows platform in order to increase the success and hit rate of any potential campaign to it’s full potential.

Windows is also a monolithic kernel, meaning it’s installed in it’s entirety regardless of the hardware it sits on. What makes Linux unique is that each module is compiled based on the hardware in the system, so no “bloat” - you are also free to modify the system directly if you don’t like the layout or material design that the developer provided.

Linux is far superior in the security space. Windows only acquired “run as” in Windows XP, and a “reasonable” UAC environment (the reference to “reasonable” is loose, as it relates to Windows Vista). However, Microsoft were very slow to the gate with this - it’s something that Unix has had for years.

Possibly the most glaring security hole in Windows systems (in terms of NTFS) is that it can be easily read by the EXT file system in Linux (but not the other way round). And let’s not forget the fact that it’s a simple exercise to break the SAM database on a Windows install with Linux, and reset the local admin account.

Linux enjoys an open source community where issues reported are often picked up extremely quickly by developers all over the world, resolved, and an update issued to multiple repositories to remediate the issue.

Windows cannot be run from a DVD or thumb drive. Want to use it ? You’ll have to install it

Linux isn’t perfect by any stretch of the imagination, but I for one absolutely refuse to buy into the Microsoft ecosystem on a personal level - particularly using an operating system that by default doesn’t respect privacy. And no prizes for guessing what my take on Apple is - it’s essentially BSD in an expensive suit.

However, since COVID, I am in fact using Windows 11 at home, but that’s only for the integration. If I had the choice, I would be using Linux. There are a number of applications which I’d consider core that just do not work properly under Linux, and that’s the only real reason as to why I made the decision (somewhat resentfully) to move back to Windows on the home front.

Here’s a thought to leave you with. How many penetration testers do you know that use Windows for vulnerability assessments ?

This isn’t meant to be an “operating system war”. It’s a debate

Blog

6 Aug 2024, 18:53

This is a topic close to my heart, and one of the (many) reasons why I founded sudonix.org in the first place. Below, I set out why forums still have their place in society, and why social media is generally a bad idea in terms of data privacy. Here at Sudonix, you have complete control.

Why Forums Are Still Relevant in 2024

In the fast-paced digital age of 2024, forums continue to hold their ground as significant platforms for online communities, despite the overwhelming popularity of social media. While social media platforms have become the go-to for quick interactions and broad outreach, forums provide unique advantages that maintain their relevance. This article explores why forums still matter today and the pitfalls of social media that forums effectively mitigate.

The Unique Strengths of Forums

Focused and In-Depth Discussions

Forums are designed for detailed, topic-focused discussions. Unlike social media, where conversations can quickly veer off course, forums keep discussions organized within threads. This structure allows users to dive deep into specific topics, whether they’re discussing advanced programming techniques, niche hobbies, or troubleshooting technical issues. The depth and focus of these conversations are unmatched by the often shallow and fragmented nature of social media interactions.

Knowledge Preservation and Accessibility

The archival nature of forums ensures that valuable information is preserved and easily accessible. Users can search through years of discussions to find solutions to problems, tutorials, and expert advice. This persistent repository of knowledge contrasts sharply with social media, where content rapidly becomes buried under a constant influx of new posts.

Community Building and Support

Forums foster strong, tight-knit communities. Members often share a common interest, leading to a sense of belonging and mutual support. Whether it’s a forum dedicated to gardening, vintage car restoration, or software development, the sense of camaraderie and shared purpose can be profound. These communities often provide peer support that is more focused and reliable than what is typically found on social media platforms.

Quality Control and Moderation

Effective moderation is a hallmark of successful forums. Dedicated moderators ensure that discussions stay on topic and maintain a respectful tone, significantly reducing spam and off-topic content. This level of quality control creates a more pleasant and productive environment for users, something that is often lacking on social media platforms plagued by trolling and irrelevant posts.

Privacy and Anonymity

Forums often offer users the ability to remain anonymous or use pseudonyms. This can encourage more open and honest discussions, especially on sensitive topics. In contrast, social media platforms frequently require real-name policies and track user activity for targeted advertising, raising significant privacy concerns.

The Downsides of Social Media

While social media has largely replaced forums for many casual interactions, it comes with notable downsides:

Data Monetization and Privacy Concerns

One of the biggest criticisms of social media platforms is their business model, which heavily relies on the monetization of user data. Personal information, browsing habits, and interactions are tracked and sold to advertisers, often without explicit user consent. This has led to growing concerns over privacy and the ethical use of personal data.

Shallow Interactions

Social media is designed for quick, fleeting interactions. The focus on short-form content and instant gratification can lead to shallow conversations that lack depth. Important discussions often get lost in the noise, and the fast-paced nature of social media can make it difficult to have meaningful, sustained interactions.

Algorithmic Control

Social media platforms use algorithms to control what users see, often prioritizing content that drives engagement rather than what is necessarily valuable or informative. This can create echo chambers, where users are only exposed to information that reinforces their existing beliefs, limiting exposure to diverse perspectives.

Trolls and Toxicity

The relatively low barrier to entry and the anonymity provided by social media can lead to toxic behaviour, trolling, and harassment. While forums are not immune to these issues, their structure and moderation practices often provide a better defence against such negative interactions.

The Complementary Roles of Forums and Social Media

While social media and forums serve different purposes, they can complement each other effectively. Social media is excellent for real-time updates, broad outreach, and casual interactions. In contrast, forums excel in fostering in-depth discussions, preserving knowledge, and building strong communities.

Conclusion

In 2024, forums continue to be relevant because they offer a unique blend of focused discussions, knowledge preservation, community support, and privacy. These strengths address many of the shortcomings of social media, making forums indispensable for many users. As the digital landscape evolves, forums and social media will likely continue to coexist, each serving its unique role in the online ecosystem.

Blog

6 Aug 2024, 18:40

In a major victory for cybersecurity, international law enforcement agencies have dismantled the notorious 911 S5 botnet. This botnet, which had been a significant player in the cybercrime landscape, was responsible for orchestrating numerous malicious activities, including Distributed Denial of Service (DDoS) attacks, data theft, and financial fraud. The takedown marks a crucial step in the ongoing battle against cybercriminal networks.

The Rise of the 911 S5 Botnet

The 911 S5 botnet, named after the “911” premium proxy service it provided, was a large and sophisticated network of compromised computers. Botnets like 911 S5 are typically created by infecting devices with malware, which then allows cybercriminals to control these devices remotely. This botnet was particularly notorious for its ability to provide proxy services to other criminals, enabling them to mask their identities and locations while conducting illicit activities.

The botnet’s infrastructure was vast, consisting of tens of thousands of infected devices worldwide. These devices were often unwittingly recruited through phishing campaigns, malicious downloads, or exploitation of software vulnerabilities. Once compromised, the devices became part of the botnet, executing commands from its controllers without the knowledge of their legitimate owners.

The Impact of 911 S5

The 911 S5 botnet’s primary function was to serve as a proxy network, allowing criminals to reroute their internet traffic through compromised devices. This service was highly sought after in the cybercrime community because it provided an additional layer of anonymity. Criminals could use the botnet to carry out various illegal activities, including:

DDoS Attacks: By overwhelming targeted systems with traffic from numerous infected devices, the botnet could render websites and online services unusable.

Data Theft: The botnet facilitated the theft of sensitive information, including personal data and financial credentials, which could be sold on the dark web.

Financial Fraud: Cybercriminals used the botnet to engage in fraudulent transactions, such as unauthorized online purchases and bank fraud.

The economic impact of these activities was substantial, affecting businesses and individuals alike. The botnet’s operations also posed significant challenges for cybersecurity professionals, who had to constantly adapt to its evolving tactics.

The Takedown Operation

The successful takedown of the 911 S5 botnet was the result of a coordinated effort involving multiple international law enforcement agencies, cybersecurity firms, and researchers. The operation, which took several months of planning and execution, targeted the botnet’s command and control infrastructure.

Key elements of the takedown included:

Intelligence Gathering: Investigators collected data on the botnet’s operations, infrastructure, and the individuals behind it. This involved monitoring the botnet’s activities and analyzing its command and control servers.

Legal Actions: Authorities obtained the necessary legal permissions to disrupt the botnet’s operations. This included warrants to seize servers and arrest individuals involved in running the botnet.

Technical Measures: Cybersecurity experts worked to neutralize the malware infecting the compromised devices, effectively cutting off the botnet’s control over these machines.

The takedown operation culminated in the seizure of servers and the arrest of several key individuals believed to be behind the 911 S5 botnet. These actions significantly disrupted the botnet’s operations, rendering it inoperable and cutting off the services it provided to cybercriminals.

Implications and Future Outlook

The dismantling of the 911 S5 botnet represents a significant achievement in the fight against cybercrime. It sends a strong message to cybercriminals that international cooperation and advanced cybersecurity techniques can effectively combat even the most sophisticated threats, and law enforcement will eventually catch up with the perpetrators of these attacks.

However, the fight against botnets and cybercrime is far from over. As long as there are vulnerabilities in software and systems, cybercriminals will continue to exploit them. The takedown of the 911 S5 botnet underscores the importance of ongoing vigilance, collaboration, and innovation in cybersecurity.

Moving forward, it is crucial for individuals and organizations to adopt robust cybersecurity practices, including regular software updates, employee training, and the use of advanced security tools. Law enforcement agencies and cybersecurity professionals must continue to work together, sharing intelligence and resources to stay ahead of evolving cyber threats.

In conclusion, the takedown of the 911 S5 botnet is a landmark achievement in the battle against cybercrime. It not only disrupts a major criminal enterprise but also serves as a reminder of the importance of vigilance, cooperation, and proactive measures in securing the digital landscape.

Blog

30 May 2024, 12:36

The European Union has initiated a significant antitrust probe into three of the world’s most influential tech companies: Apple, Meta (formerly Facebook), and Google’s parent company Alphabet. This move underscores the EU’s growing scrutiny of big tech firms and their market dominance, signalling a potentially pivotal moment in the regulation of digital platforms.

The investigation, announced by the European Commission, focuses on concerns regarding the companies’ practices in the digital advertising market. Margrethe Vestager, the EU’s Executive Vice-President for A Europe Fit for the Digital Age, emphasized the importance of fair competition in the digital sector and the need to ensure that dominant players do not abuse their power to stifle innovation or harm consumers.

At the heart of the probe lies the question of whether Apple, Meta, and Google have violated EU competition rules by leveraging their control over user data and digital advertising to gain an unfair advantage over competitors. These companies wield enormous influence, with vast user bases and access to extensive troves of personal information, which they use to target advertisements with remarkable precision.

Apple, through its iOS platform, has introduced privacy measures such as App Tracking Transparency, which allows users to opt out of being tracked across apps for advertising purposes, but while lauded for enhancing user privacy, these measures have raised concerns among app developers and advertisers who rely on targeted advertising for revenue. The EU investigation will likely delve into whether Apple’s privacy measures unfairly disadvantage competitors in the digital advertising ecosystem.

Meta, the parent company of social media behemoths like Facebook, Instagram, and WhatsApp, faces scrutiny over its data practices and alleged anti-competitive behaviour. The company’s vast user base and comprehensive user profiles make it a dominant player in the digital advertising market. However, Meta has faced criticism and regulatory challenges over issues ranging from data privacy to its handling of misinformation and hate speech on its platforms.

Google, with its ubiquitous search engine and digital advertising services, also comes under the EU’s microscope. The tech giant’s control over online search and advertising infrastructure gives it immense power in the digital economy. Concerns have been raised about Google’s practices regarding the use of data, the display of search results, and its dominance in the online advertising market.

The EU’s investigation into these tech giants reflects a broader global trend of increased scrutiny and regulatory action targeting big tech companies. Governments and regulatory bodies worldwide are grappling with how to rein in the power of these corporate giants while fostering competition and innovation in the digital economy.

Antitrust investigations and regulatory actions have become more common, with tech companies facing fines, lawsuits, and calls for structural reforms. In the United States, lawmakers and regulators have also intensified their scrutiny of big tech, with antitrust lawsuits filed against companies like Google and Meta.

The outcome of the EU’s investigation could have far-reaching implications for the future of digital competition and regulation. If the European Commission finds evidence of anti-competitive behavior or violations of EU competition rules, it could impose significant fines and require changes to the companies’ business practices. Moreover, the investigation could prompt broader discussions about the need for new regulations to address the unique challenges posed by the digital economy.

In response to the EU’s investigation, Apple, Meta, and Google have stated their commitment to complying with EU competition rules and cooperating with the European Commission’s inquiry. However, the tech giants are likely to vigorously defend their business practices and challenge any allegations of anti-competitive behavior.

As the digital economy continues to evolve and reshape industries and societies worldwide, the regulation of big tech companies will remain a contentious and complex issue. The EU’s antitrust investigation into Apple, Meta, and Google underscores the growing recognition among policymakers of the need to ensure that digital markets remain fair, competitive, and conducive to innovation.

Blog

28 Mar 2024, 01:17

Blog

15 Mar 2024, 20:03

Those in the security space may already be aware of the secure DNS service provided by Quad9. For those who have not heard of this free service, Quad9 is a public Domain Name System (DNS) service that provides a more secure and privacy-focused alternative to traditional DNS services. DNS is the system that translates human-readable domain names (like www.google.com) into IP addresses that computers use to identify each other on the internet.

Quad9 is known for its emphasis on security and privacy. It uses threat intelligence from various cybersecurity companies to block access to known malicious websites and protect users from accessing harmful content. When a user makes a DNS query, Quad9 checks the requested domain against a threat intelligence feed, and if the domain is flagged as malicious, Quad9 blocks access to it.

One notable feature of Quad9 is its commitment to user privacy. Quad9 does not store any personally identifiable information about its users, and it does not sell or share user data.

https://www.quad9.net/

Users can configure their devices or routers to use Quad9 as their DNS resolver to take advantage of its security and privacy features. The DNS server addresses for Quad9 are usually 9.9.9.9 and 149.112.112.112.

The name “Quad9” is derived from the service’s use of four DNS servers in different geographic locations to provide redundancy and improve reliability. Users can configure their devices or routers to use the Quad9 DNS servers, and doing so can offer an additional layer of protection against malware, phishing, and other online threats. It’s important to note that while Quad9 can enhance security, it is not a substitute for other security measures such as antivirus software and good internet security practices, and if you are not using these technologies already, then you are leaving yourself open to compromise.

I’d strongly recommend you take a look at Quad9. Not only is it fast, but it seems to be extremely solid, and well thought out. If you’re using Cloudflare for your DNS, Quad9 is actually faster.

Blog

19 Jan 2024, 18:23

Blog

13 Dec 2023, 11:18

I read this article with interest, and I must say, I do agree with the points being raised on both sides of the fence.

https://news.sky.com/story/amazon-microsoft-dominance-in-uk-cloud-market-faces-competition-investigation-12977203

There are valid points from OfCom, the UK regulator, such as

highlighted worries about committed spend discounts which, Ofcom feared, could incentivise customers to use a single major firm for all or most of their cloud needs.

This is a very good point. Customers are often tempted in with monthly discounts - but those only apply if you have a committed monthly spend, which if you are trying to reduce spend on technology, is hard to achieve or even offset.

On the flip side of the coin, AWS claims

Only a small percentage of IT spend is in the cloud, and customers can meet their IT needs from any combination of on-premises hardware and software, managed or co-location services, and cloud services.

This isn’t true at all. Most startups and existing technology firms want to reduce their overall reliance on on-premises infrastructure as a means of reducing cost, negating the need to refresh hardware every x years, and further extending the capabilities of their disaster recovery and business continuity programs. The less reliance you have on a physical office, the better as this effectively lowers your RTO (Recovery Time Objective) in the event that you incur physical damage owing to fire or water (for example, but not limited to) and have to replace servers and other existing infrastructure before you are effectively able to start the recovery process.

Similarly, businesses who adopt the on-premise model would typically require some sort of standby or recovery site that has both replication enabled between these sites, plus regular and structured testing. It’s relatively simple to “fail over” to a recovery site, but much harder to move back to the primary site afterwards without further downtime. For this reason, several institutions have adopted the cloud model as a way of resolving this particular issue, as well as the cost benefits.

The cost of data egress is well known throughout the industry and is an accepted (although not necessarily desirable) “standard” these days. The comment from AWS concerning the ability to switch between providers very much depends on individual technology requirements, and such a “switch” could be made much harder by leveraging the use of proprietary products such as AWS Aurora - slated as a MySQL and PostgreSQL and then attempting to switch back to native platforms - only to find some essential functionality is missing.

My personal view is that AWS are digging their heels in and disagree with the CMA because they want to retain their dominance.

Interestingly, GCS (Google Cloud Services) doesn’t seem to be in scope, and given Google’s dominance over literally everything internet related, this surprises me.

Blog

8 Oct 2023, 22:26

Just seen this post pop up on Sky News

https://news.sky.com/story/elon-musks-brain-chip-firm-given-all-clear-to-recruit-for-human-trials-12965469

He has claimed the devices are so safe he would happily use his children as test subjects.

Is this guy completely insane? You’d seriously use your kids as Guinea Pigs in human trials?? This guy clearly has easily more money than sense, and anyone who’d put their children in danger in the name of technology “advances” should seriously question their own ethics - and I’m honestly shocked that nobody else seems to have a comment about this.

This entire “experiment” is dangerous to say the least in my view as there is huge potential for error. However, reading the below article where a paralyzed man was able to walk again thanks to a neuro “bridge” is truly ground breaking and life changing for that individual.

https://news.sky.com/story/paralysed-man-walks-again-thanks-to-digital-bridge-that-wirelessly-reconnects-brain-and-spinal-cord-12888128

However, this is reputable Swiss technology at it’s finest - Switzerland’s Lausanne University Hospital, the University of Lausanne, and the Swiss Federal Institute of Technology Lausanne were all involved in this process and the implants themselves were developed by the French Atomic Energy Commission.

Musk’s “off the cuff” remark makes the entire process sound “cavalier” in my view and the brain isn’t something that can be manipulated without dire consequences for the patient if you get it wrong.

I daresay there are going to agreements composed by lawyers which each recipient of this technology will need to sign so that it exonerates Neuralink and it’s executives of all responsibility should anything go wrong.

I must admit, I’m torn here (in the sense of the Swiss experiment) - part of me finds it morally wrong to interfere with the human brain like this because of the potential for irreversible damage, although the benefits are huge, obviously life changing for the recipient, and in most cases may outweigh the risk (at what level I cannot comment not being a neurosurgeon of course).

Interested in other views - would you offer yourself as a test subject for this? If I were in a wheelchair and couldn’t move, I probably would I think, but would need assurance that such technology and it’s associated procedure is safe, which at this stage, I’m not convinced it’s a guarantee that can be given. There are of course no real guarantees with anything these days, but this is a leap of faith that once taken, cannot be reversed if it goes wrong.

Blog

22 Sept 2023, 10:43

I’ve just read this article with a great deal of interest. Whilst it’s not “perfect” in the way it’s written, it certainly does a very good job in explaining the IT function to a tee - and despite having been written in 2009, it’s still factually correct and completely relevant.

https://www.computerworld.com/article/2527153/opinion-the-unspoken-truth-about-managing-geeks.html

This is my interpretation;

The points made are impossible to disagree with. Yes, IT pros do want their managers to be technically competent - there’s nothing worse than having a manager who’s never been “on the tools” and is non technical - they are about as much use as a chocolate fireguard when it comes to being a sounding board for technical issues that a specific tech cannot easily resolve.

I’ve been in senior management since 2016 and being “on the tools” previously for 30+ years has enabled me to see both the business and technical angles - and equally appreciate both of them. Despite my management role, I still maintain a strong technical presence, and am (probably) the most senior and experienced technical resource in my team.

That’s not to say that the team members I do have aren’t up to the job - very much the opposite in fact and for the most part, they work unsupervised and only call on my skill set when they have exhausted their own and need someone with a trained ear to bounce ideas off.

On the flip side, I’ve worked with some cowboys in my industry who can talk the talk but not walk the walk - and they are exposed very quickly in smaller firms where it’s much harder to hide technical deficit behind other team members.

The hallmark of a good manager is one who knows how much is involved in a specific project or task in order to steer it to completion, and is willing to step back and let others in the team be in the driving seat. A huge plus is knowing how to get the best out of each individual team member and does not deploy pointless techniques such as micro management - in other words, be on their wavelength and understand their strengths and weaknesses, then use those to the advantage of the team rather than the individual.

Sure, there will always be those in the team who you wouldn’t stick in front of clients - not because of the fact that they don’t know their field of expertise, but may lack the necessary polish or soft skills to give clients a warm fuzzy feeling, or may be unable (or simply unwilling) to explain technology to someone without the fundamental understanding of how a variety of components and services intersect.

That should never be seen as a negative though. A strong manager recognizes that whilst team members are uncomfortable with being the “front of house”, they excel in other areas supporting and maintaining technology that most users don’t even realize exists, yet they use it daily (or some variant of it). It is these skills that mean IT departments and associated technologies run 24x7x365, and we should champion them more than we do already from the business perspective.

Blog

3 Sept 2023, 20:49

Blog

13 Aug 2023, 10:10

One thing I’m seeing on a repeated basis is email address that do not match the site or the business they were intended for. People seem to spend an inordinate amount of time and money getting their website so look exactly the way they want it, and in most cases, usually with highly polished results.

They then subsequently undo all of that good work by using a completely different email address in terms of the domain for the contact. I’ve seen a mix of Hotmail (probably the worst), GMail, Outlook, Yahoo - the list is endless. If you’ve purchased a domain, then why not use it for email also so that users can essentially trust your brand, and not make them feel like they are about to be scammed!

One core reason for this is design services. They tend to build out the website design, but then stop short of finishing the job and setting up the email too. Admittedly, with a new domain comes the pitfalls of “trust” when set against established “mail clearing houses” such as MimeCast (to name one of many examples), and even if you do setup the mail correctly, without the corresponding and expected SPF, DMARC and DKIM records, your email is almost certainly to land up in junk - if it even arrives at all.

Here’s a great guide I found that not only describes what these are, but how to set them up properly

https://woodpecker.co/blog/spf-dkim/

I suspect most of these “design boutiques” likely lack the experience and knowledge to get email working properly for the domain in question - either that, or they consider that outside of the scope of what they are providing, but if I were asked to develop a website (and I’ve done a fair few in my time) then email is always in scope, and it will be configured properly. The same applies when I build a VPS as others here will likely attest to.

My personal experience of this was using a local alloy wheel refurb company (scuffed alloys on the car). I’d found a local company who came highly recommended, so contacted them - only to find that the owner was using a Hotmail address for his business! I did honestly reconsider, but after meeting up with the owner and seeing his work first hand (he’s done the alloys on two of my cars so far and the work is of an excellent standard), I was impressed, and he’s since had several work projects from me, and recommendations to friends and family.

I did speak to him about the usage of a Hotmail address on his website, and he said that he had no idea how to make the actual domain email work, and the guy who designed his website didn’t even offer to help - no surprises there. I offered to help him set this up (for free of course) but he said that he’d had that address for years and didn’t want to change it as everyone knew it. This is fair enough of course, but I can’t help but wonder how many people are immediately turned off or become untrusting because a business uses a publicly available email service…

Perhaps it’s just me, but branding (in my mind) is essential, and you have to get it right.

Blog

12 Aug 2023, 12:25

Blog

20 Jul 2023, 17:57

This really is a great read and a trip down memory lane in terms of how the mobile telephone has evolved over the years into the devices we know today.

Some surprises along the journey (based on little known facts), but definitely well worth the read.

Interestingly, the reference to the UK’s first mobile phone call which was made in 1985 is featured in My Journey which can be found here.

https://news.sky.com/story/the-matrix-phone-to-the-iphone-and-that-unforgettable-ringtone-50-moments-in-50-years-since-first-mobile-call-12845390

I can certainly draw parallel with the BlackBerry devices. I had several of these when RIM was the technology leader. It’s surprising how things turn around - one minute they were industry pioneers, and the next, they were on a path to rapid demise.

Then Nokia introduced the N95. I had one of these in 2006 for a short period, coupled with a Treo (who remembers those?)…

https://en.m.wikipedia.org/wiki/Treo_650

Enjoy the ride!

Blog

18 Jul 2023, 10:30

-

AI... A new dawn, or the demise of humanity ?

Watching Ignoring Scheduled Pinned Locked Moved learning intelligence blog 22 Nov 2021, 13:03203 Votes361 Posts42k Views -

Recall to take screenshots every 2 seconds

Watching Ignoring Scheduled Pinned Locked Moved spying copilot microsoft 25 May 2024, 23:269 Votes14 Posts913 Views -

Japan eliminates the usage of floppy disks - in 2024?

Watching Ignoring Scheduled Pinned Locked Moved floppy japan media 6 Jul 2024, 00:0611 Votes16 Posts655 Views -

Goodbye OnePlus, hello Samsung

Watching Ignoring Scheduled Pinned Locked Moved oneplus performance 29 Sept 2023, 16:3236 Votes73 Posts3k Views -

Apple, what were you thinking?

Watching Ignoring Scheduled Pinned Locked Moved apple history crushed 9 May 2024, 15:3014 Votes15 Posts1k Views -

Julian Assange Freed - What's your view?

Watching Ignoring Scheduled Pinned Locked Moved wikileaks assange freedom law 25 Jun 2024, 11:373 Votes4 Posts411 Views -

Linux vs Windows - who wins ?

Watching Ignoring Scheduled Pinned Locked Moved windows linux 6 Oct 2022, 09:164 Votes8 Posts596 Views -

Why Forums Are Still Relevant in 2024

Watching Ignoring Scheduled Pinned Locked Moved forums privacy 6 Aug 2024, 17:252 Votes3 Posts288 Views -

The Takedown of the 911 S5 Botnet: A Significant Blow to Cybercrime

Watching Ignoring Scheduled Pinned Locked Moved 911 s5 botnet 30 May 2024, 12:360 Votes2 Posts965 Views -

EU Launches Antitrust Investigation into Tech Giants

Watching Ignoring Scheduled Pinned Locked Moved antitrust tech investigation 26 Mar 2024, 17:073 Votes3 Posts528 Views -

CSS border gradients

Watching Ignoring Scheduled Pinned Locked Moved gradient border css 12 Mar 2024, 18:56 3

2 Votes10 Posts694 Views

3

2 Votes10 Posts694 Views -

Looking for secure (and free) DNS? Quad 9 is your new best friend

Watching Ignoring Scheduled Pinned Locked Moved dns quad9 security 18 Jan 2024, 15:223 Votes4 Posts1k Views -

The pandemic effect on technology

Watching Ignoring Scheduled Pinned Locked Moved covid pandemic tech 13 Dec 2023, 11:18 1

2 Votes1 Posts885 Views

1

2 Votes1 Posts885 Views -

Do AWS and Microsoft have too much dominance?

Watching Ignoring Scheduled Pinned Locked Moved aws cma dominance 8 Oct 2023, 17:551 Votes3 Posts410 Views -

Neuralink given all-clear to recruit for human trials

Watching Ignoring Scheduled Pinned Locked Moved neuralink brain implant 22 Sept 2023, 10:432 Votes1 Posts268 Views -

How do you manage IT pros?

Watching Ignoring Scheduled Pinned Locked Moved team management 29 Aug 2023, 18:101 Votes3 Posts456 Views -

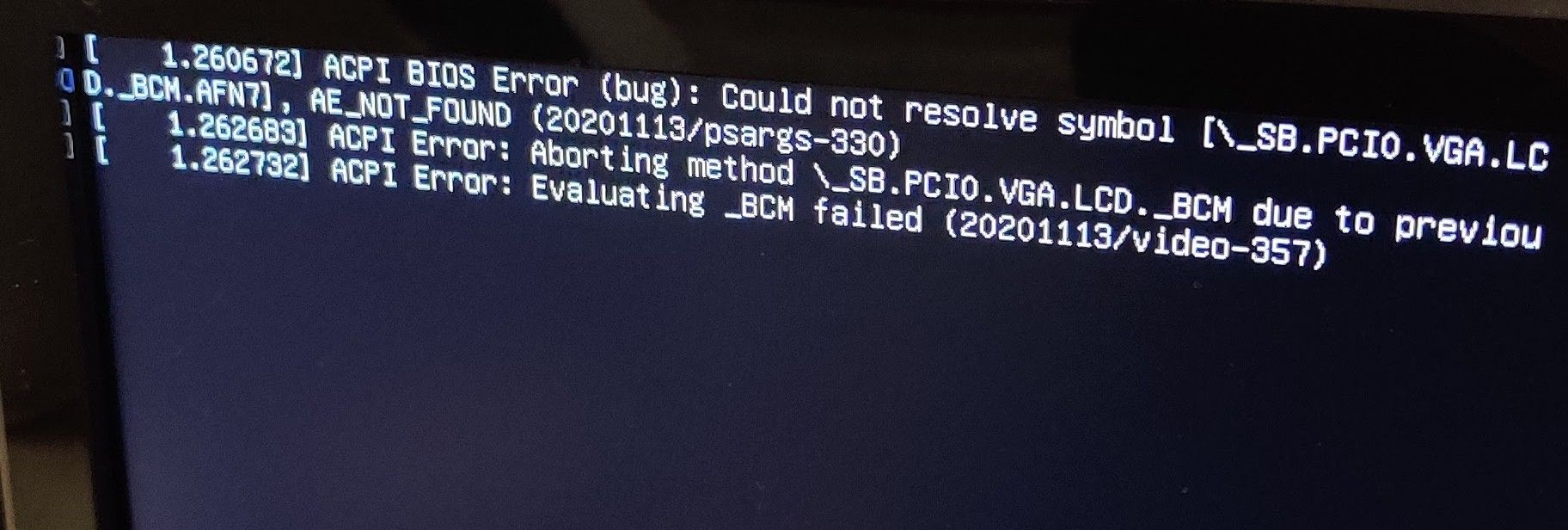

Wasting time on a system that hangs on boot

Watching Ignoring Scheduled Pinned Locked Moved boot linux windows 12 Aug 2023, 11:04 1

1 Votes3 Posts482 Views

1

1 Votes3 Posts482 Views -

Website and mail branding failures

Watching Ignoring Scheduled Pinned Locked Moved branding email domain 10 Aug 2023, 13:5116 Votes12 Posts903 Views -

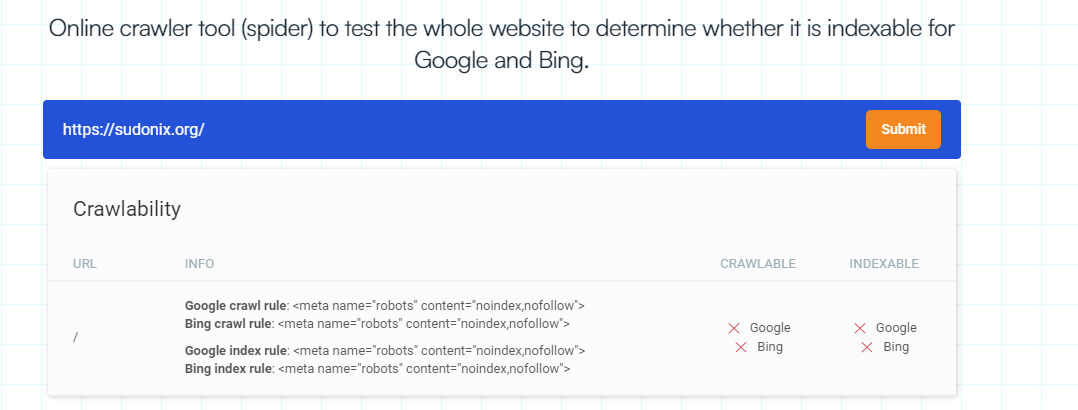

Cloudflare bot fight mode and Google search

Watching Ignoring Scheduled Pinned Locked Moved cloudflare crawler indexing 18 Jun 2023, 11:22 2

10 Votes12 Posts2k Views

2

10 Votes12 Posts2k Views -

50 moments in 50 years

Watching Ignoring Scheduled Pinned Locked Moved sky nostalgia technology 17 Jul 2023, 21:522 Votes3 Posts486 Views