AI... A new dawn, or the demise of humanity ?

-

Ever since the first computer was created and made available to the world, technology has advanced at an incredible pace. From its early inception before the World Wide Web became the common platform it is today, there have been innovators. Some of those faded into obscurity before their idea even made it into the mainstream - for example, Sir Clive Sinclair’s ill fated C5 - effectively the prehistoric Sedgeway of the 80’s era that ended up in receivership after falling short of both sales forecasts and enthusiasm from the general public - mostly cited around safety, practicality, and the notoriously short battery life. Sinclair had an interest in battery powered vehicles, and whilst his initial idea seemed outlandish in the 80s, if you look at the electric cars market today, he was actually a groundbreaking pioneer.

The technology Revolution

The next revolution in technology was, without doubt, the World Wide Web. A creation pioneered in 1989 by Sir Tim Berners-Lee whilst working for CERN in Switzerland that made use of the earliest form of common computer language - HTML This new technology, coupled with the Mosaic Browser formed the basis of all technology communication as we know it today. The internet. With the dot com tech bubble lasting between 1995 and 2001 before finally bursting, the huge wave of interest in this new transport and communication phenomenon meant that new ideas came to life. Ideas that were previously considered inconceivable became probable, and then reality as technology gained significant ground and funding from a variety of sources. One of the earliest investors in the internet was Cisco. Despite losing almost 86% of its market share during the dot com fallout, it managed to cling on and is now responsible for providing the underpinning technology and infrastructure that makes the web as we know it today function. In a similar ilk, eBay and Amazon were early adopters of the dot com boom, and also managed to stay afloat during the crash. Amazon is the huge success story that went from simply selling books to being one of the largest technology firms in its space, and pioneering technology such as Amazon Web Services that effectively destroyed the physical data centre with its unstoppable adoption rate of organisations moving their operations to cloud based environments.

With the rise of the internet came the rise of automation, and Artificial Intelligence. Early technological advances and revolutionary ideas arrived in the form of self serving ATM’s, analogue cell phones, credit card transaction processing (data warehouses), and improvements to home appliances all designed to make our lives easier. Whilst it’s undisputed that technology has immensely enriched our lives and allowed us to achieve feats of engineering and construction that Brunel could have only dreamed of, the technology evolution wheel now spins at an alarming rate. The mobile phone when first launched was the size of a house brick and had an antennae that made placing it in your pocket impossible unless you wore a trench coat. Newer iterations of the same technology saw analogue move to digital, and the cell phone reduce in size to that of a Mars bar. As with all technology advances, the first generation was rapidly left behind, with 3G, then 4G being the mainstream and accepted standard (with 5G being in the final stages before release). Along with the accessibility factor in terms of mobile networks came the smartphone. An idea first pioneered in 2007 by Steve Jobs with the arrival of the iPhone 2G. This technology brand rocketed in popularity and rapidly became the most sought after technology in the world thanks to its founder’s insight. Since 2007, we’ve seen several new iPhone and iPad models surface - as of now, up to the iPhone X. 2008 saw competitor Android release its first device with version 1. Fast forward ten years and the most recent release is Oreo (8.0). The smartphone and enhanced capacity networks era made it possible to communicate in new ways that were previously inaccessible. From instant messaging to video calls on your smartphone, plus a wealth of applications designed to provide both entertainment and enhanced functionality, technology was now the at the forefront and a major component of everyday life.

The brain’s last stand ?

The rise of social media platforms such as Facebook and Twitter took communication to a new level - creating a playing field for technology to further embrace communication and enrich our lives in terms of how we interact with others, and the information we share on a daily basis. However, to fully understand how Artificial Intelligence made such a dramatic impact on our lives, we need to step back to 1943 when Alan Turing pioneered the Turing Test. Probably the most defining moment in Artificial Intelligence history was in 1997 when reigning chess champion Garry Kasparov played supercomputer Deep Blue - and subsequently lost. Not surprising when you consider that the IBM built machine was capable of evaluating and executing 200 million moves per second. The question was, could it cope with strategy ? The clear answer was yes. Dubbed “the brain’s last stand”, this occurrence set the inevitable path for Artificial Intelligence to scale to new heights. The US military attempted to use AI in the Cold War, although this amounted to virtually nothing. However, interest in the progress of Artificial Intelligence rose quickly, with development in this new technology being taken seriously with a range of autonomous robots. BigDog, developed by Boston Dynamics, was one of the first. Designed to operate as a robotic pack animal in terrain considered unreachable or inaccessible for standard vehicles - although it has never actually seen active service. iRobot also gained popularity and became a major player in this area. The bomb disposal device PackBot combines user control with artificial intelligence capabilities such as explosives sniffing. As of today, over 2000 of these PackBot devices have been utilised in Iraq and Afghanistan with the aim of successfully and safely tackling IED’s to prevent loss of human life.

Never heard of Boston Dynamics ? Here’s a video that’ll give you an insight into one of their latest creations

A similar occurrence to the Kasparov incident in 2011 saw IBM again unveil it’s latest technology (Watson) which took on the human brain again - this time in US quiz show Jeopardy. Watson was “trained” for three years to take on this challenge, and using a complex set of algorithms and machine learning, trounced the human opposition (who happened to be two of the shows smartest contestants) and stole the show. This incident quickly went viral, and placed Artificial Intelligence as a prominent technology that had advanced to the point where it proved beyond reasonable doubt to be superior to the human brain. 2014 saw the introduction of driverless vehicles that leveraged Artificial Intelligence to make decisions based on road and traffic conditions. Both Tesla and Google are major players in this area - more predominantly Tesla of late. I’ve previously written an article concerning the use of Artificial Intelligence around driverless vehicles that can be found here.

The Facebook Experiment

In the save year, Tim Berners-Lee himself said that machines were getting smarter, but humans were not. He also stated that computers would end up communicating with each other in their own language. Given the Facebook AI experiment conducted this year, this prediction was in fact correct. Here’s a synopsis of the discussion between two bots named Alice and Bob.

Bob: i can i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i i can i i i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i . . . . . . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i i i i i everything else . . . . . . . . . . . . . .

Alice: balls have 0 to me to me to me to me to me to me to me to me to

Bob: you i i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me toWhilst this discussion became difficult to decipher from the human perspective and looked like complete gibberish (you could draw some parallel with The Chuckle Brothers on LSD) the AI learning in this experiment had actually taken the decision to communicate in a bespoke method as a means of making the stream more effective. The problem with this approach was that the bots were designed to communicate with humans, and not themselves. AI decision making isn’t something new either. Google translate actually converts unsanitized input to its own internal machine language before providing a translation. The developers at Google noticed this, but where happy for this to continue as it made the AI more effective. And now for reality. Is this considered acceptable when it’s supposed to enhance something rather than effectively exclude a human from the process ? The idea here is around interaction. There’s a lot of rumours circulating the internet as to Facebook’s decision to pull the plug. Was it out of fear, or did the scientists device to simply abandon the experiment because it didn’t produce the desired result ?

The future of AI - and human existence ?

A more disturbing point is that AI appears to have had control in the decision making process, and did not need a human to choose or approve any request. We’ve actually had basic AI for many years in the form of speech recognition when calling a customer service centre, or when seeking help on websites in the form of unattended bots that can answer basic questions quickly and effectively - and in the event that they cannot answer, they have the intelligence to route the question elsewhere. But what happens if you take AI to a new level where you give it control of something far more sinister like military capabilities ? Here’s a video outlining how a particular scenario concerning the usage of autonomous weapons could work if we continue down the path of ignorance. Whilst it seems like Hollywood, the potential is very real and should be taken seriously. In fact, this particular footage, whilst fiction, had been taken very seriously with names such as Elon Musk and Stephen Hawking providing strong support and backing to effectively create a ban on autonomous weapons and the use of AI in their deployment.

Does this strike a chord with you ? Is this really how we are going to allow AI to evolve ? We’ve had unmanned drones for a while now, and whilst they are effective at providing a mechanism for surgical strike on a particular target, they are still controlled by humans that can override functionality, and ultimately decide when to execute. The real question here is just how far do we want to take AI in terms of autonomy and decision making ? Do we want AI to enrich our lives, or assume our identities thus allowing the human race to slip into oblivion ? If we allow this to happen, where does our purpose lie, and what function would humanity then provide that AI can’t ? People need to realise that as soon as we fully embrace AI and give it control over aspects of our lives, we will effectively end up working for it rather than it working for us. Is AI going to pay for your pension ? No, but it could certainly replace your existence.

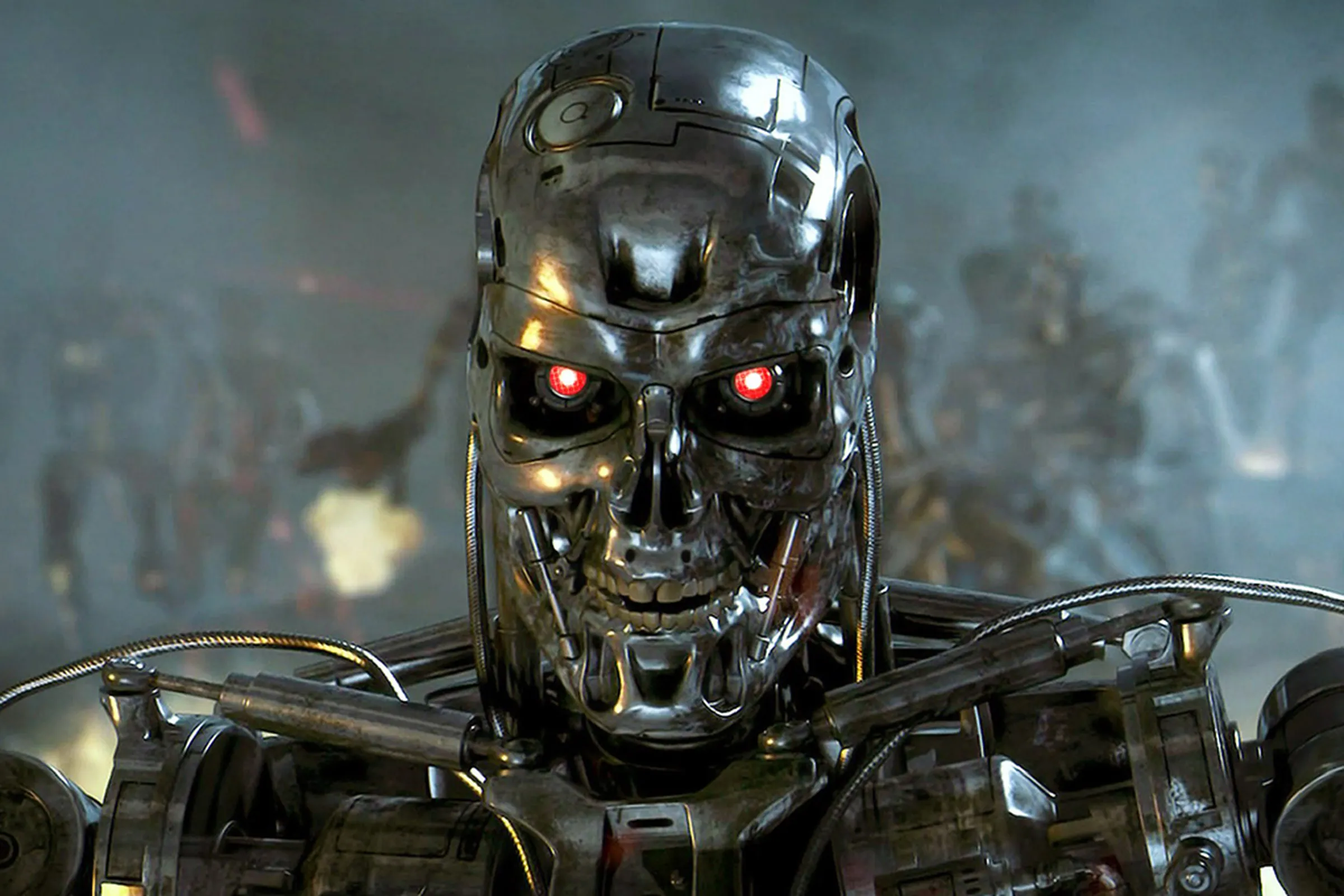

In addition, how long before that “intelligence” decides you are superfluous to requirement ? Sounds very “Hollywood” I’ll admit, but there really needs to be a clear boundary as to what the human race accepts as progress as opposed to elimination. Have I been watching too many Terminator films ? No. I live technology and fully embrace it, but replacing human way of life with machines is not the answer to the world’s problems - only the downfall. It’s already started with driverless cars, and will only get worse - if we allow it.

-

undefined phenomlab referenced this topic on 11 Jan 2023, 18:19

-

Ever since the first computer was created and made available to the world, technology has advanced at an incredible pace. From its early inception before the World Wide Web became the common platform it is today, there have been innovators. Some of those faded into obscurity before their idea even made it into the mainstream - for example, Sir Clive Sinclair’s ill fated C5 - effectively the prehistoric Sedgeway of the 80’s era that ended up in receivership after falling short of both sales forecasts and enthusiasm from the general public - mostly cited around safety, practicality, and the notoriously short battery life. Sinclair had an interest in battery powered vehicles, and whilst his initial idea seemed outlandish in the 80s, if you look at the electric cars market today, he was actually a groundbreaking pioneer.

The technology Revolution

The next revolution in technology was, without doubt, the World Wide Web. A creation pioneered in 1989 by Sir Tim Berners-Lee whilst working for CERN in Switzerland that made use of the earliest form of common computer language - HTML This new technology, coupled with the Mosaic Browser formed the basis of all technology communication as we know it today. The internet. With the dot com tech bubble lasting between 1995 and 2001 before finally bursting, the huge wave of interest in this new transport and communication phenomenon meant that new ideas came to life. Ideas that were previously considered inconceivable became probable, and then reality as technology gained significant ground and funding from a variety of sources. One of the earliest investors in the internet was Cisco. Despite losing almost 86% of its market share during the dot com fallout, it managed to cling on and is now responsible for providing the underpinning technology and infrastructure that makes the web as we know it today function. In a similar ilk, eBay and Amazon were early adopters of the dot com boom, and also managed to stay afloat during the crash. Amazon is the huge success story that went from simply selling books to being one of the largest technology firms in its space, and pioneering technology such as Amazon Web Services that effectively destroyed the physical data centre with its unstoppable adoption rate of organisations moving their operations to cloud based environments.

With the rise of the internet came the rise of automation, and Artificial Intelligence. Early technological advances and revolutionary ideas arrived in the form of self serving ATM’s, analogue cell phones, credit card transaction processing (data warehouses), and improvements to home appliances all designed to make our lives easier. Whilst it’s undisputed that technology has immensely enriched our lives and allowed us to achieve feats of engineering and construction that Brunel could have only dreamed of, the technology evolution wheel now spins at an alarming rate. The mobile phone when first launched was the size of a house brick and had an antennae that made placing it in your pocket impossible unless you wore a trench coat. Newer iterations of the same technology saw analogue move to digital, and the cell phone reduce in size to that of a Mars bar. As with all technology advances, the first generation was rapidly left behind, with 3G, then 4G being the mainstream and accepted standard (with 5G being in the final stages before release). Along with the accessibility factor in terms of mobile networks came the smartphone. An idea first pioneered in 2007 by Steve Jobs with the arrival of the iPhone 2G. This technology brand rocketed in popularity and rapidly became the most sought after technology in the world thanks to its founder’s insight. Since 2007, we’ve seen several new iPhone and iPad models surface - as of now, up to the iPhone X. 2008 saw competitor Android release its first device with version 1. Fast forward ten years and the most recent release is Oreo (8.0). The smartphone and enhanced capacity networks era made it possible to communicate in new ways that were previously inaccessible. From instant messaging to video calls on your smartphone, plus a wealth of applications designed to provide both entertainment and enhanced functionality, technology was now the at the forefront and a major component of everyday life.

The brain’s last stand ?

The rise of social media platforms such as Facebook and Twitter took communication to a new level - creating a playing field for technology to further embrace communication and enrich our lives in terms of how we interact with others, and the information we share on a daily basis. However, to fully understand how Artificial Intelligence made such a dramatic impact on our lives, we need to step back to 1943 when Alan Turing pioneered the Turing Test. Probably the most defining moment in Artificial Intelligence history was in 1997 when reigning chess champion Garry Kasparov played supercomputer Deep Blue - and subsequently lost. Not surprising when you consider that the IBM built machine was capable of evaluating and executing 200 million moves per second. The question was, could it cope with strategy ? The clear answer was yes. Dubbed “the brain’s last stand”, this occurrence set the inevitable path for Artificial Intelligence to scale to new heights. The US military attempted to use AI in the Cold War, although this amounted to virtually nothing. However, interest in the progress of Artificial Intelligence rose quickly, with development in this new technology being taken seriously with a range of autonomous robots. BigDog, developed by Boston Dynamics, was one of the first. Designed to operate as a robotic pack animal in terrain considered unreachable or inaccessible for standard vehicles - although it has never actually seen active service. iRobot also gained popularity and became a major player in this area. The bomb disposal device PackBot combines user control with artificial intelligence capabilities such as explosives sniffing. As of today, over 2000 of these PackBot devices have been utilised in Iraq and Afghanistan with the aim of successfully and safely tackling IED’s to prevent loss of human life.

Never heard of Boston Dynamics ? Here’s a video that’ll give you an insight into one of their latest creations

A similar occurrence to the Kasparov incident in 2011 saw IBM again unveil it’s latest technology (Watson) which took on the human brain again - this time in US quiz show Jeopardy. Watson was “trained” for three years to take on this challenge, and using a complex set of algorithms and machine learning, trounced the human opposition (who happened to be two of the shows smartest contestants) and stole the show. This incident quickly went viral, and placed Artificial Intelligence as a prominent technology that had advanced to the point where it proved beyond reasonable doubt to be superior to the human brain. 2014 saw the introduction of driverless vehicles that leveraged Artificial Intelligence to make decisions based on road and traffic conditions. Both Tesla and Google are major players in this area - more predominantly Tesla of late. I’ve previously written an article concerning the use of Artificial Intelligence around driverless vehicles that can be found here.

The Facebook Experiment

In the save year, Tim Berners-Lee himself said that machines were getting smarter, but humans were not. He also stated that computers would end up communicating with each other in their own language. Given the Facebook AI experiment conducted this year, this prediction was in fact correct. Here’s a synopsis of the discussion between two bots named Alice and Bob.

Bob: i can i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i i can i i i everything else . . . . . . . . . . . . . .

Alice: balls have a ball to me to me to me to me to me to me to me

Bob: i . . . . . . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i i i i i everything else . . . . . . . . . . . . . .

Alice: balls have 0 to me to me to me to me to me to me to me to me to

Bob: you i i i everything else . . . . . . . . . . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me toWhilst this discussion became difficult to decipher from the human perspective and looked like complete gibberish (you could draw some parallel with The Chuckle Brothers on LSD) the AI learning in this experiment had actually taken the decision to communicate in a bespoke method as a means of making the stream more effective. The problem with this approach was that the bots were designed to communicate with humans, and not themselves. AI decision making isn’t something new either. Google translate actually converts unsanitized input to its own internal machine language before providing a translation. The developers at Google noticed this, but where happy for this to continue as it made the AI more effective. And now for reality. Is this considered acceptable when it’s supposed to enhance something rather than effectively exclude a human from the process ? The idea here is around interaction. There’s a lot of rumours circulating the internet as to Facebook’s decision to pull the plug. Was it out of fear, or did the scientists device to simply abandon the experiment because it didn’t produce the desired result ?

The future of AI - and human existence ?

A more disturbing point is that AI appears to have had control in the decision making process, and did not need a human to choose or approve any request. We’ve actually had basic AI for many years in the form of speech recognition when calling a customer service centre, or when seeking help on websites in the form of unattended bots that can answer basic questions quickly and effectively - and in the event that they cannot answer, they have the intelligence to route the question elsewhere. But what happens if you take AI to a new level where you give it control of something far more sinister like military capabilities ? Here’s a video outlining how a particular scenario concerning the usage of autonomous weapons could work if we continue down the path of ignorance. Whilst it seems like Hollywood, the potential is very real and should be taken seriously. In fact, this particular footage, whilst fiction, had been taken very seriously with names such as Elon Musk and Stephen Hawking providing strong support and backing to effectively create a ban on autonomous weapons and the use of AI in their deployment.

Does this strike a chord with you ? Is this really how we are going to allow AI to evolve ? We’ve had unmanned drones for a while now, and whilst they are effective at providing a mechanism for surgical strike on a particular target, they are still controlled by humans that can override functionality, and ultimately decide when to execute. The real question here is just how far do we want to take AI in terms of autonomy and decision making ? Do we want AI to enrich our lives, or assume our identities thus allowing the human race to slip into oblivion ? If we allow this to happen, where does our purpose lie, and what function would humanity then provide that AI can’t ? People need to realise that as soon as we fully embrace AI and give it control over aspects of our lives, we will effectively end up working for it rather than it working for us. Is AI going to pay for your pension ? No, but it could certainly replace your existence.

In addition, how long before that “intelligence” decides you are superfluous to requirement ? Sounds very “Hollywood” I’ll admit, but there really needs to be a clear boundary as to what the human race accepts as progress as opposed to elimination. Have I been watching too many Terminator films ? No. I live technology and fully embrace it, but replacing human way of life with machines is not the answer to the world’s problems - only the downfall. It’s already started with driverless cars, and will only get worse - if we allow it.

-

Here’s another article that might make those not concerned by AI think again.

https://globalnews.ca/news/9432503/chatgpt-exams-passing-mba-medical-licence-bar/

@phenomlab this is really interesting. I saw an article similar to this where a professor in religion gave chatgpt the bible and some other text and asked it to write a 6 page paper about a specific topic and to make it look like the professor wrote it. Three seconds later it was done and the paper it wrote was top notch and looked like it had been written by the professor.

Looking at it from that aspect, it is scary to think a computer can do that. My other thought on it, is what if you gave the AI all the medical information that there is, including evidence based research and results from tests and research and all the different outcomes from patients world wide, what conclusion or maybe even new information it would come up with to help with disease, cancer and all that kind of stuff that could help everyone. It would probably take it a matter of seconds to figure it all out.

I wonder if it could make diagnosing instantaneous and more accurate and maybe even better ways to fix an ailment.

I also think that in the wrong hands it could also be very dangerous.

It is very interesting.

-

@phenomlab this is really interesting. I saw an article similar to this where a professor in religion gave chatgpt the bible and some other text and asked it to write a 6 page paper about a specific topic and to make it look like the professor wrote it. Three seconds later it was done and the paper it wrote was top notch and looked like it had been written by the professor.

Looking at it from that aspect, it is scary to think a computer can do that. My other thought on it, is what if you gave the AI all the medical information that there is, including evidence based research and results from tests and research and all the different outcomes from patients world wide, what conclusion or maybe even new information it would come up with to help with disease, cancer and all that kind of stuff that could help everyone. It would probably take it a matter of seconds to figure it all out.

I wonder if it could make diagnosing instantaneous and more accurate and maybe even better ways to fix an ailment.

I also think that in the wrong hands it could also be very dangerous.

It is very interesting.

@Madchatthew said in AI... A new dawn, or the demise of humanity ?:

I wonder if it could make diagnosing instantaneous and more accurate and maybe even better ways to fix an ailment.

This is an interesting take given your profession

I totally get it though - I can certainly see a hugely beneficial use case for this.

I totally get it though - I can certainly see a hugely beneficial use case for this.@Madchatthew said in AI... A new dawn, or the demise of humanity ?:

I also think that in the wrong hands it could also be very dangerous.

They say a picture paints a thousand words…

-

@Madchatthew said in AI... A new dawn, or the demise of humanity ?:

I wonder if it could make diagnosing instantaneous and more accurate and maybe even better ways to fix an ailment.

This is an interesting take given your profession

I totally get it though - I can certainly see a hugely beneficial use case for this.

I totally get it though - I can certainly see a hugely beneficial use case for this.@Madchatthew said in AI... A new dawn, or the demise of humanity ?:

I also think that in the wrong hands it could also be very dangerous.

They say a picture paints a thousand words…

@phenomlab said in AI... A new dawn, or the demise of humanity ?:

They say a picture paints a thousand words…

LOL - yes, in the making LOL

-

undefined phenomlab referenced this topic on 29 Mar 2023, 20:17

-

And this is certainly interesting. I came across this on Sky News this morning

Seems even someone considered the “Godfather of AI” has quit, and is now raising concerns around privacy and jobs (and we’re not talking about Steve here either

)

) -

A rare occasion where I actually agree with Elon Musk

Some interesting quotes from that article

“So just having more advanced weapons on the battlefield that can react faster than any human could is really what AI is capable of.”

“Any future wars between advanced countries or at least countries with drone capability will be very much the drone wars.”

When asked if AI advances the end of an empire, he replied: “I think it does. I don’t think (AI) is necessary for anything that we’re doing.”

This is also worth watching.

This further bolsters my view that AI needs to be regulated.

-

-

And here - Boss of AI firm’s ‘worst fears’ are more worrying than creepy Senate party trick

US politicians fear artificial intelligence (AI) technology is like a “bomb in a china shop”. And there was worrying evidence at a Senate committee on Tuesday from the industry itself that the tech could “cause significant harm”.

-

undefined phenomlab referenced this topic on 6 Jun 2023, 16:29

-

An interesting argument, but with little foundation in my view

“But many of our ingrained fears and worries also come from movies, media and books, like the AI characterisations in Ex Machina, The Terminator, and even going back to Isaac Asimov’s ideas which inspired the film I, Robot.”

@phenomlab yeap, but no need to fear

this might even be better for humanity, since they will have a “common enemy” to fight against, so, maybe instead of fighting with each other, they will unite.

this might even be better for humanity, since they will have a “common enemy” to fight against, so, maybe instead of fighting with each other, they will unite.In general, I do not embrace anthropocentric views well… and since human greed and money will determine how this will end, we all can guess what will happen…

so sorry to say this mates, but if there is a robot uprising, I will sell out human race hard

-

@phenomlab yeap, but no need to fear

this might even be better for humanity, since they will have a “common enemy” to fight against, so, maybe instead of fighting with each other, they will unite.

this might even be better for humanity, since they will have a “common enemy” to fight against, so, maybe instead of fighting with each other, they will unite.In general, I do not embrace anthropocentric views well… and since human greed and money will determine how this will end, we all can guess what will happen…

so sorry to say this mates, but if there is a robot uprising, I will sell out human race hard

@crazycells I understand your point - albeit selling out the human race as that would include you

There’s a great video on YouTube that goes into more depth (along with the “Slaughterbots” video in the first post) that I think is well worth watching. Unfortunately, it’s over an hour long, but does go into specific detail around the concerns. My personal concern is not one of having my job replaced by a machine - more about my existence.

-

And whilst it looks very much like I’m trying to hammer home a point here, see the below. Clearly, I’m not the only one concerned at the rate of AI’s development, and the consequences if not managed properly.

-

@crazycells I understand your point - albeit selling out the human race as that would include you

There’s a great video on YouTube that goes into more depth (along with the “Slaughterbots” video in the first post) that I think is well worth watching. Unfortunately, it’s over an hour long, but does go into specific detail around the concerns. My personal concern is not one of having my job replaced by a machine - more about my existence.

@phenomlab thanks for sharing. I will watch this.

no worries

I do not have a high opinion about human race, human greed wins each time, so I always feel it will be futile to resist. we are just one of the billions of species around us. thanks to evolution, our genes make us selfish creatures, but even if there is a catastrophe, I am pretty sure there will be at least a handful of survivors to continue.

I do not have a high opinion about human race, human greed wins each time, so I always feel it will be futile to resist. we are just one of the billions of species around us. thanks to evolution, our genes make us selfish creatures, but even if there is a catastrophe, I am pretty sure there will be at least a handful of survivors to continue. -

And whilst it looks very much like I’m trying to hammer home a point here, see the below. Clearly, I’m not the only one concerned at the rate of AI’s development, and the consequences if not managed properly.

@phenomlab maybe I did not understand it well, but I do not share the opinions of this article. Are we trying to prevent deadly weapons from being built or are we trying to prevent AI from being part of it

Regulations might (and probably will) be bent by individual countries secretly. So, what will happen then?

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in