Never underestimate the importance of security

-

Over the years, I’ve been exposed to a variety of industries - one of these is aerospace engineering and manufacturing. During my time in this industry, I picked up a wealth of experience around processing, manufacturing, treatments, inspection, and various others. Sheet metal work within the aircraft industry is fine-limit. We’re not talking about millimeters here - we’re talking about thousands of an inch, or “thou” to be more precise. Sounds Imperial, right ? Correct. This has been a standard for years, and hasn’t really changed. The same applies to sheet metal thickness, typically measured using SWG (sheet / wire gauge). For example, 16 SWG is actually 1.6mm thick or thereabouts and the only way you’d get a true reading is with either a Vernier or a Micrometer. For those now totally baffled, one mm is around 40 thou or 25.4 micrometers (μm). Can you imagine having to work to such a minute limit where the work you’ve submitted is 2 thou out of tolerance, and as a result, fails first off inspection ?Welcome to precision engineering. It’s not all tech and fine-limit though. In every industry, you have to start somewhere. And typically, in engineering, you’d start as an apprentice in the store room learning the trade and associated materials.

Anyone familiar with engineering will know exactly what I mean when I use terms such as Gasparini, Amada, CNC, Bridgeport, guillotine, and Donkey Saw. Whilst the Donkey Saw sounds like animal cruelty, it’s actually an automated mechanical saw who’s job it is to cut tough material (such as S99 bar, which is hardened stainless steel) simulating the back and forth action manually performed with a hacksaw. Typically, a barrel of coolant liquid was connected to the saw and periodically deposited liquid into the blade to prevent it from overheating and snapping. Where am I going with all this ?

Well, through my tenure in engineering, I started at the bottom as “the boy” - the one you’d send to the stores to get a plastic hammer, a long weight (wait), a bubble for a spirit level, sky hooks, and just about any other imaginary or pointless tool you could think of. It was part of the initiation ceremony - and the learning process.

One other extremely dull task was to cut “blanks’’ in the store room from 8’ X 4’ sheets of CRS (cold rolled steel) or L166 (1.6mm aerospace grade aluminium, poly coated on both sides). These would then be used to make parts according to the drawing and spec you had, or could be for tooling purposes. My particular “job” (if you could call it that) in this case was to press the footswitch to activate the guillotine blade after the sheet was placed into the guide. The problem is that after about 50 or so blanks, you only hear the trigger word requiring you to “react”. In this case, that particular word was “right”. This meant that the old guy I was working with had placed the sheet, and was ready for me to kick the switch to activate the guillotine. All very high tech and vitally important - not.

And so, here it is. Jim walks into the store room where we’re cutting blanks, and asks George if he’d like coffee. After 10 minutes, Jim returns with a tray of drinks and shouts “George, coffee!”. George, fiddling with the guillotine guide responds with “right”…. See if you can guess the rest…

George went as white as a sheet and almost fainted as the guillotine blade narrowly missed his fingers. It took more than one coffee laden with sugar to put the colour back into his cheeks and restore his ailing blood sugar level.

The good news is that George finally retired with all his fingers intact, and I eventually progressed through the shop floor and learned a trade.

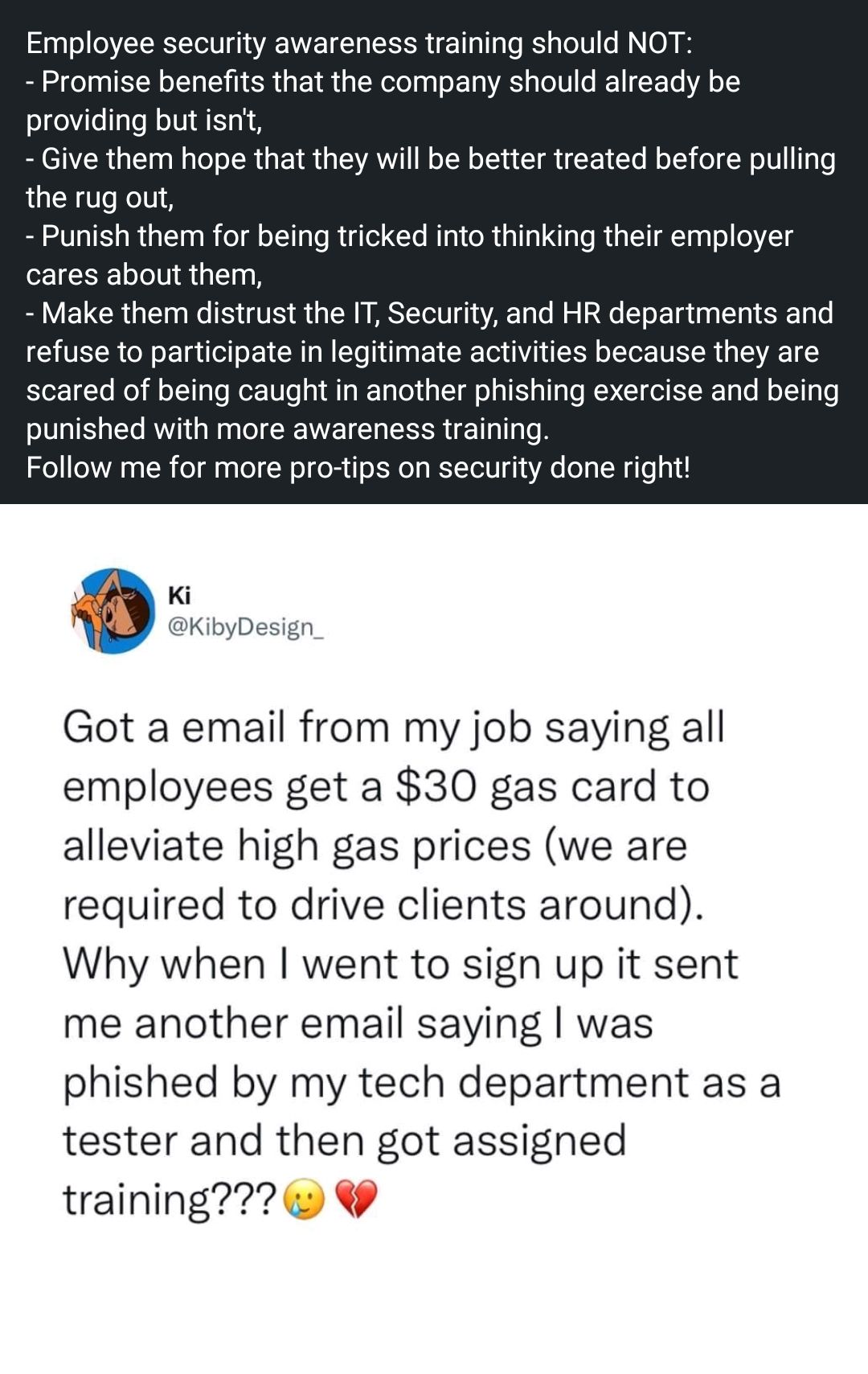

The purpose of this post ? In an ever changing and evolving security environment, have your wits about you at all times. It’s not only your organisation’s information security, but clients who have entrusted you as a custodian of their information to keep it safe and prevent unauthorised access. Information Security is a 101 rule to be adhered to at all times - regardless of how experienced you think you are. Complacency is at the heart of most mistakes. By taking a more pragmatic approach, that risk is greatly reduced.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in