Optimum config for NodeBB under NGINX

-

I noticed that my v3 instance of NodeBB in test was so much slower than live, but was using the same database etc. On closer inspection, the

nginxconfiguration needed a tweak, so I’m posting my settings here so others can benefit from it. Note, that various aspects have been redacted for obvious privacy and security reasons, and to this end, you will need to substitute these values for those that exist in your own environment.server { # Ensure you put your server name here, such as example.com www.example.com etc. server_name sservername; listen x.x.x.x:443 ssl http2; access_log /path/to/access.log; error_log /path/to/error.log; ssl_certificate /path/to/ssl.combined; ssl_certificate_key /path/to/ssl.key; # You may not need the below values, so feel free to remove these if not required rewrite ^\Q/mail/config-v1.1.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/.well-known/autoconfig/mail/config-v1.1.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/AutoDiscover/AutoDiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/Autodiscover/Autodiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/autodiscover/autodiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; # You may not need the above values, so feel free to remove these if not required client_body_buffer_size 10K; client_header_buffer_size 1k; client_max_body_size 8m; large_client_header_buffers 4 4k; client_body_timeout 12; client_header_timeout 12; keepalive_timeout 15; send_timeout 10; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_redirect off; # Socket.io Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; gzip on; gzip_disable "msie6"; gzip_vary on; gzip_proxied any; gzip_min_length 1024; gzip_comp_level 6; gzip_buffers 16 8k; gzip_http_version 1.1; gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript; add_header X-XSS-Protection "1; mode=block"; add_header X-Download-Options "noopen" always; add_header Content-Security-Policy "upgrade-insecure-requests" always; add_header Referrer-Policy 'no-referrer' always; add_header Permissions-Policy "accelerometer=(), camera=(), geolocation=(), gyroscope=(), magnetometer=(), microphone=(), payment=(), usb=()" always; # This is the string that will show in the headers if requested, so you can put what you want in here. Keep it clean :) add_header X-Powered-By "<whatever you want here>" always; add_header X-Permitted-Cross-Domain-Policies "none" always; location / { # Don't forget to change the port to the one you use. I have a non-standard one :) proxy_pass http://127.0.0.1:5000; } location @nodebb { # Don't forget to change the port to the one you use. I have a non-standard one :) proxy_pass http://127.0.0.1:5000; } location ~ ^/assets/(.*) { root /path/to/nodebb/; try_files /build/public/$1 /public/$1 @nodebb; add_header Cache-Control "max-age=31536000"; } location /plugins/ { root /path/to/nodebb/build/public/; try_files $uri @nodebb; add_header Cache-Control "max-age=31536000"; } if ($scheme = http) { # Ensure you set your actual domain here rewrite ^/(?!.well-known)(.*) https://yourdomain/$1 break; } } I’ve added comments at the obvious places where you need to make changes. Depending on how your server is configured, and it’s capabilities, this should improve performance no end.

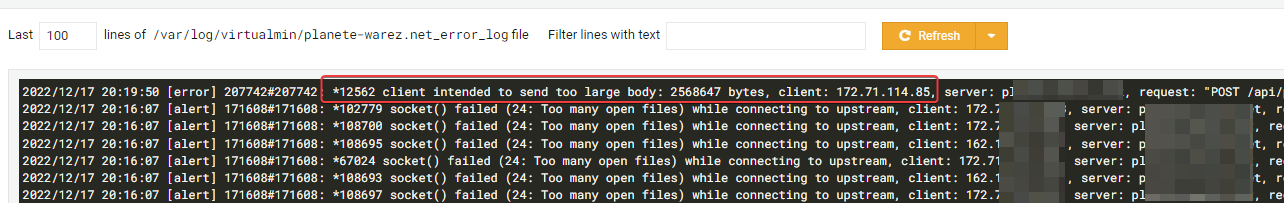

There is a caveat though, and it’s an important one

Don’t use insane levels in the below section

client_body_buffer_size 10K; client_header_buffer_size 1k; client_max_body_size 8m; large_client_header_buffers 4 4k; client_body_timeout 12; client_header_timeout 12; keepalive_timeout 15; send_timeout 10; Keep to these values, and if anything, adjust them DOWN to suit your server.

-

I noticed that my v3 instance of NodeBB in test was so much slower than live, but was using the same database etc. On closer inspection, the

nginxconfiguration needed a tweak, so I’m posting my settings here so others can benefit from it. Note, that various aspects have been redacted for obvious privacy and security reasons, and to this end, you will need to substitute these values for those that exist in your own environment.server { # Ensure you put your server name here, such as example.com www.example.com etc. server_name sservername; listen x.x.x.x:443 ssl http2; access_log /path/to/access.log; error_log /path/to/error.log; ssl_certificate /path/to/ssl.combined; ssl_certificate_key /path/to/ssl.key; # You may not need the below values, so feel free to remove these if not required rewrite ^\Q/mail/config-v1.1.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/.well-known/autoconfig/mail/config-v1.1.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/AutoDiscover/AutoDiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/Autodiscover/Autodiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/autodiscover/autodiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; # You may not need the above values, so feel free to remove these if not required client_body_buffer_size 10K; client_header_buffer_size 1k; client_max_body_size 8m; large_client_header_buffers 4 4k; client_body_timeout 12; client_header_timeout 12; keepalive_timeout 15; send_timeout 10; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_redirect off; # Socket.io Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; gzip on; gzip_disable "msie6"; gzip_vary on; gzip_proxied any; gzip_min_length 1024; gzip_comp_level 6; gzip_buffers 16 8k; gzip_http_version 1.1; gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript; add_header X-XSS-Protection "1; mode=block"; add_header X-Download-Options "noopen" always; add_header Content-Security-Policy "upgrade-insecure-requests" always; add_header Referrer-Policy 'no-referrer' always; add_header Permissions-Policy "accelerometer=(), camera=(), geolocation=(), gyroscope=(), magnetometer=(), microphone=(), payment=(), usb=()" always; # This is the string that will show in the headers if requested, so you can put what you want in here. Keep it clean :) add_header X-Powered-By "<whatever you want here>" always; add_header X-Permitted-Cross-Domain-Policies "none" always; location / { # Don't forget to change the port to the one you use. I have a non-standard one :) proxy_pass http://127.0.0.1:5000; } location @nodebb { # Don't forget to change the port to the one you use. I have a non-standard one :) proxy_pass http://127.0.0.1:5000; } location ~ ^/assets/(.*) { root /path/to/nodebb/; try_files /build/public/$1 /public/$1 @nodebb; add_header Cache-Control "max-age=31536000"; } location /plugins/ { root /path/to/nodebb/build/public/; try_files $uri @nodebb; add_header Cache-Control "max-age=31536000"; } if ($scheme = http) { # Ensure you set your actual domain here rewrite ^/(?!.well-known)(.*) https://yourdomain/$1 break; } }I’ve added comments at the obvious places where you need to make changes. Depending on how your server is configured, and it’s capabilities, this should improve performance no end.

There is a caveat though, and it’s an important one

Don’t use insane levels in the below section

client_body_buffer_size 10K; client_header_buffer_size 1k; client_max_body_size 8m; large_client_header_buffers 4 4k; client_body_timeout 12; client_header_timeout 12; keepalive_timeout 15; send_timeout 10;Keep to these values, and if anything, adjust them DOWN to suit your server.

-

undefined phenomlab marked this topic as a regular topic on 3 Feb 2023, 13:46

-

I noticed that my v3 instance of NodeBB in test was so much slower than live, but was using the same database etc. On closer inspection, the

nginxconfiguration needed a tweak, so I’m posting my settings here so others can benefit from it. Note, that various aspects have been redacted for obvious privacy and security reasons, and to this end, you will need to substitute these values for those that exist in your own environment.server { # Ensure you put your server name here, such as example.com www.example.com etc. server_name sservername; listen x.x.x.x:443 ssl http2; access_log /path/to/access.log; error_log /path/to/error.log; ssl_certificate /path/to/ssl.combined; ssl_certificate_key /path/to/ssl.key; # You may not need the below values, so feel free to remove these if not required rewrite ^\Q/mail/config-v1.1.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/.well-known/autoconfig/mail/config-v1.1.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/AutoDiscover/AutoDiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/Autodiscover/Autodiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; rewrite ^\Q/autodiscover/autodiscover.xml\E(.*) $scheme://$host/cgi-bin/autoconfig.cgi$1 break; # You may not need the above values, so feel free to remove these if not required client_body_buffer_size 10K; client_header_buffer_size 1k; client_max_body_size 8m; large_client_header_buffers 4 4k; client_body_timeout 12; client_header_timeout 12; keepalive_timeout 15; send_timeout 10; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_redirect off; # Socket.io Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; gzip on; gzip_disable "msie6"; gzip_vary on; gzip_proxied any; gzip_min_length 1024; gzip_comp_level 6; gzip_buffers 16 8k; gzip_http_version 1.1; gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript; add_header X-XSS-Protection "1; mode=block"; add_header X-Download-Options "noopen" always; add_header Content-Security-Policy "upgrade-insecure-requests" always; add_header Referrer-Policy 'no-referrer' always; add_header Permissions-Policy "accelerometer=(), camera=(), geolocation=(), gyroscope=(), magnetometer=(), microphone=(), payment=(), usb=()" always; # This is the string that will show in the headers if requested, so you can put what you want in here. Keep it clean :) add_header X-Powered-By "<whatever you want here>" always; add_header X-Permitted-Cross-Domain-Policies "none" always; location / { # Don't forget to change the port to the one you use. I have a non-standard one :) proxy_pass http://127.0.0.1:5000; } location @nodebb { # Don't forget to change the port to the one you use. I have a non-standard one :) proxy_pass http://127.0.0.1:5000; } location ~ ^/assets/(.*) { root /path/to/nodebb/; try_files /build/public/$1 /public/$1 @nodebb; add_header Cache-Control "max-age=31536000"; } location /plugins/ { root /path/to/nodebb/build/public/; try_files $uri @nodebb; add_header Cache-Control "max-age=31536000"; } if ($scheme = http) { # Ensure you set your actual domain here rewrite ^/(?!.well-known)(.*) https://yourdomain/$1 break; } }I’ve added comments at the obvious places where you need to make changes. Depending on how your server is configured, and it’s capabilities, this should improve performance no end.

There is a caveat though, and it’s an important one

Don’t use insane levels in the below section

client_body_buffer_size 10K; client_header_buffer_size 1k; client_max_body_size 8m; large_client_header_buffers 4 4k; client_body_timeout 12; client_header_timeout 12; keepalive_timeout 15; send_timeout 10;Keep to these values, and if anything, adjust them DOWN to suit your server.

hi @phenomlab , is there any reason that you do not use 4567?

Additionally, do you scale your forum up to 3 ports?

-

hi @phenomlab , is there any reason that you do not use 4567?

Additionally, do you scale your forum up to 3 ports?

@crazycells hi - no security reason, or anything specific in this case. However, the

nginx.confI posted was from my Dev environment which uses this port as a way of not interfering with production.And yes, I use clustering on this site with three instances.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in