Surface Web, Deep Web, And Dark Web Explained

-

When you think about the internet, what’s the first thing that comes to mind ? Online shopping ? Gaming ? Gambling sites ? Social media ? Each one of these would certainly fall into the category of what requires internet access to make possible, and it would be almost impossible to imagine a life without the web as we know it today. However, how well do we really know the internet and its underlying components ?Let’s first understand the origins of the Internet

The “internet” as we know it today in fact began life as a product called ARPANET. The first workable version came in the late 1960s and used the acronym above rather than the less friendly “Advanced Research Projects Agency Network”. The product was initially funded by the U.S. Department of Defense, and used early forms of packet switching to allow multiple computers to communicate on a single network - known today as a LAN (Local Area Network).

The internet itself isn’t one machine or server. It’s an enormous collection of networking components such as switches, routers, servers and much more located all over the world - all contacted using common “protocols” (a method of transport which data requires to reach other connected entities) such as TCP (Transmission Control Protocol) and UDP (User Datagram Protocol). Both TCP and UDP use a principle of “ports” to create connections, and ultimately each connected device requires an internet address (known as an IP address which is unique to each device meaning it can be identified individually amongst millions of other inter connected devices).

In 1983, ARPANET began to leverage the newly available TCP/IP protocol which enabled scientists and engineers to assemble a “network of networks” that would begin to lay the foundation in terms of the required framework or “web” for the internet as we know it today to operate on. The icing on the cake came in 1990 when Tim Berners-Lee created the World Wide Web (www as we affectionately know it) - effectively allowing websites and hyperlinks to work together to form the internet we know and use daily.

However, over time, the internet model changed as a result of various sites wishing to remain outside of the reach of search engines such as Google, Bing, Yahoo, and the like. This method also gave content owners a mechanism to charge users for access to content - referred to today as a “Paywall”. Out of this new model came, effectively, three layers of the internet.

Three “Internets” ?

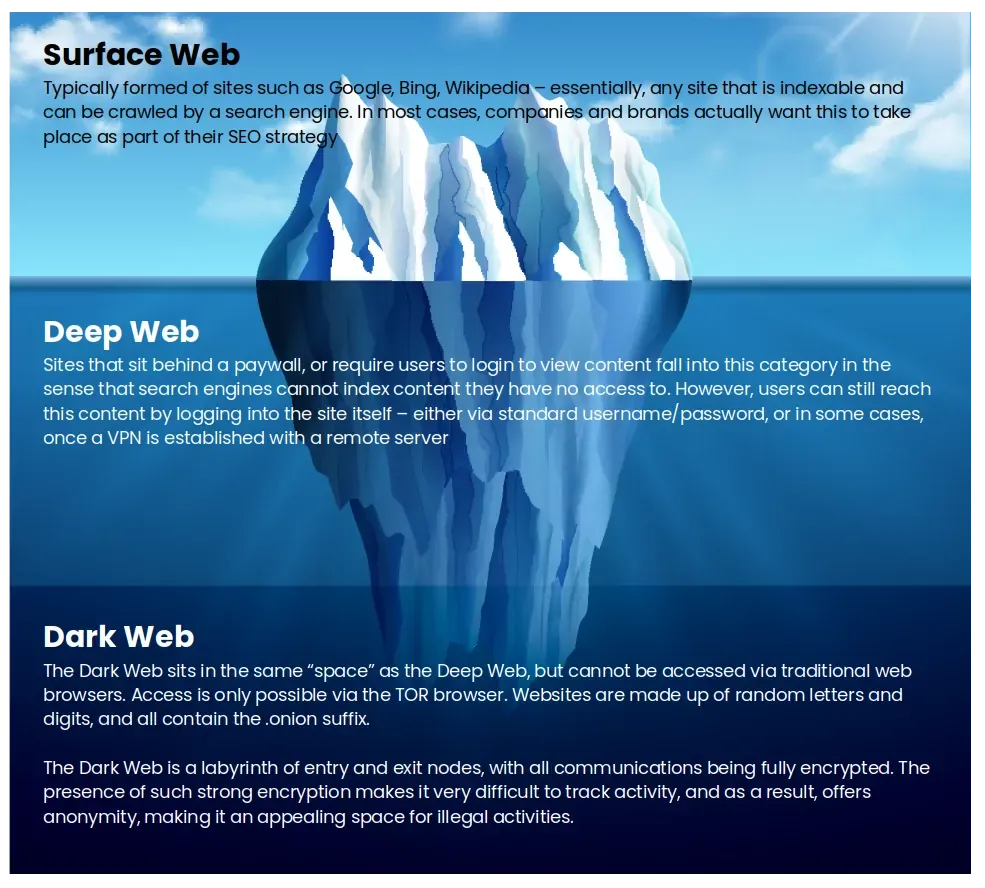

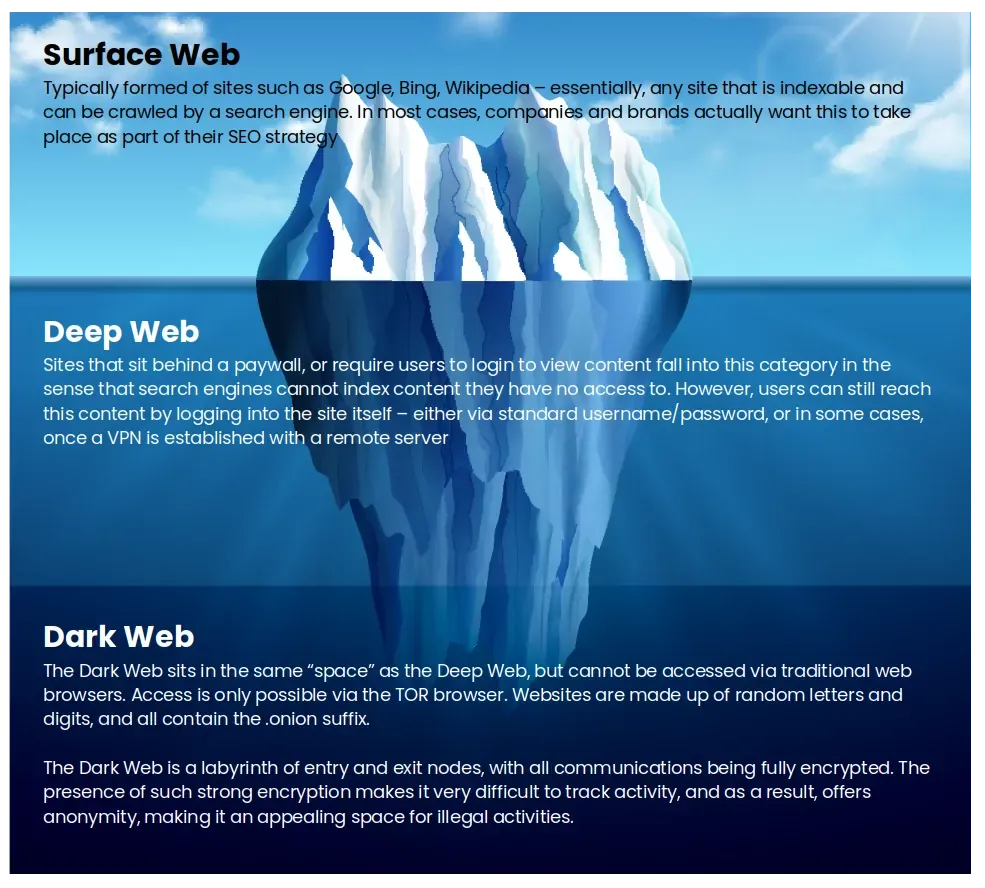

To make this easier to understand (hopefully), I’ve put together the below diagram

The “Surface Web”

Ok - with the history lesson out of the way, we’ll get back to the underlying purpose of this article, which is to reveal the three “layers” of the internet. For a simple paradigm, the easiest way to explain this is to use the “Iceberg Model”.

The “internet” that forms part of our everyday lives consists of sites such as Google, Bing, Yahoo (to a lesser extent) and Wikipedia (as common examples - there are thousands more).

The “Deep Web”

The next layer is known as the “Deep Web” which typically consists of sites that do not expose themselves to search engines, meaning they cannot be “crawled” and will not feature in Google searches (in the sense that you cannot access a direct link without first having to login). Sites covered in this category - those such as Netflix, your Amazon or eBay account, PayPal, Google Drive, LinkedIn (essentially, anything that requires a login for you to gain access)

The “Dark Web”

The third layer down is known as the “Dark Web” - and it’s “Dark” for a reason. These are sites that truly live underground and out of reach for most standard internet users. Typically, access is gained via a TOR (The Onion Router - a bit more about that later) enabled browser, with links to websites being made up of completely random characters (and changing often to avoid detection), with the suffix of .onion. If I were asked to describe the Dark Web, I’d describe it as an underground online marketplace where literally anything goes - and I literally mean “anything”.

Such examples are

- Ransomware

- Botnets,

- Biitcoin trading

- Hacker services and forums

- Financial fraud

- Illegal pornography

- Terrorism

- Anonymous journalism

- Drug cartels (including online marketplaces for sale and distribution - a good example of this is Silk Road and Silk Road II)

- Whistleblowing sites

- Information leakage sites (a bit like Wikileaks, but often containing information that even that site cannot obtain and make freely available)

- Murder for hire (hitmen etc.)

Takeaway

The Surface, Dark, and Deep Web are in fact interconnected. The purpose of these classifications is to determine where certain activities that take place on the internet fit. While internet activity on the Surface Web is, for the most part, secure, those activities in the Deep Web are hidden from view, but not necessarily harmful by nature. It’s very different in the case of the Dark Web. Thanks to it’s (virtually) anonymous nature little is known about the true content. Various attempts have been made to try and “map” the Dark Web, but given that URLs change frequently, and generally, there is no trail of breadcrumbs leading to the surface, it’s almost impossible to do so.

In summary, the Surface Web is where search engine crawlers go to fetch useful information. By direct contrast, the Dark Web plays host to an entire range of nefarious activity, and is best avoided for security concerns alone. -

When you think about the internet, what’s the first thing that comes to mind ? Online shopping ? Gaming ? Gambling sites ? Social media ? Each one of these would certainly fall into the category of what requires internet access to make possible, and it would be almost impossible to imagine a life without the web as we know it today. However, how well do we really know the internet and its underlying components ?Let’s first understand the origins of the Internet

The “internet” as we know it today in fact began life as a product called ARPANET. The first workable version came in the late 1960s and used the acronym above rather than the less friendly “Advanced Research Projects Agency Network”. The product was initially funded by the U.S. Department of Defense, and used early forms of packet switching to allow multiple computers to communicate on a single network - known today as a LAN (Local Area Network).

The internet itself isn’t one machine or server. It’s an enormous collection of networking components such as switches, routers, servers and much more located all over the world - all contacted using common “protocols” (a method of transport which data requires to reach other connected entities) such as TCP (Transmission Control Protocol) and UDP (User Datagram Protocol). Both TCP and UDP use a principle of “ports” to create connections, and ultimately each connected device requires an internet address (known as an IP address which is unique to each device meaning it can be identified individually amongst millions of other inter connected devices).

In 1983, ARPANET began to leverage the newly available TCP/IP protocol which enabled scientists and engineers to assemble a “network of networks” that would begin to lay the foundation in terms of the required framework or “web” for the internet as we know it today to operate on. The icing on the cake came in 1990 when Tim Berners-Lee created the World Wide Web (www as we affectionately know it) - effectively allowing websites and hyperlinks to work together to form the internet we know and use daily.

However, over time, the internet model changed as a result of various sites wishing to remain outside of the reach of search engines such as Google, Bing, Yahoo, and the like. This method also gave content owners a mechanism to charge users for access to content - referred to today as a “Paywall”. Out of this new model came, effectively, three layers of the internet.

Three “Internets” ?

To make this easier to understand (hopefully), I’ve put together the below diagram

The “Surface Web”

Ok - with the history lesson out of the way, we’ll get back to the underlying purpose of this article, which is to reveal the three “layers” of the internet. For a simple paradigm, the easiest way to explain this is to use the “Iceberg Model”.

The “internet” that forms part of our everyday lives consists of sites such as Google, Bing, Yahoo (to a lesser extent) and Wikipedia (as common examples - there are thousands more).

The “Deep Web”

The next layer is known as the “Deep Web” which typically consists of sites that do not expose themselves to search engines, meaning they cannot be “crawled” and will not feature in Google searches (in the sense that you cannot access a direct link without first having to login). Sites covered in this category - those such as Netflix, your Amazon or eBay account, PayPal, Google Drive, LinkedIn (essentially, anything that requires a login for you to gain access)

The “Dark Web”

The third layer down is known as the “Dark Web” - and it’s “Dark” for a reason. These are sites that truly live underground and out of reach for most standard internet users. Typically, access is gained via a TOR (The Onion Router - a bit more about that later) enabled browser, with links to websites being made up of completely random characters (and changing often to avoid detection), with the suffix of .onion. If I were asked to describe the Dark Web, I’d describe it as an underground online marketplace where literally anything goes - and I literally mean “anything”.

Such examples are

- Ransomware

- Botnets,

- Biitcoin trading

- Hacker services and forums

- Financial fraud

- Illegal pornography

- Terrorism

- Anonymous journalism

- Drug cartels (including online marketplaces for sale and distribution - a good example of this is Silk Road and Silk Road II)

- Whistleblowing sites

- Information leakage sites (a bit like Wikileaks, but often containing information that even that site cannot obtain and make freely available)

- Murder for hire (hitmen etc.)

Takeaway

The Surface, Dark, and Deep Web are in fact interconnected. The purpose of these classifications is to determine where certain activities that take place on the internet fit. While internet activity on the Surface Web is, for the most part, secure, those activities in the Deep Web are hidden from view, but not necessarily harmful by nature. It’s very different in the case of the Dark Web. Thanks to it’s (virtually) anonymous nature little is known about the true content. Various attempts have been made to try and “map” the Dark Web, but given that URLs change frequently, and generally, there is no trail of breadcrumbs leading to the surface, it’s almost impossible to do so.

In summary, the Surface Web is where search engine crawlers go to fetch useful information. By direct contrast, the Dark Web plays host to an entire range of nefarious activity, and is best avoided for security concerns alone.@phenomlab some months ago I remember that I’ve take a look to the dark web……and I do not want to see it anymore….

It’s really….dark, the content that I’ve seen scared me a lot……

-

@phenomlab some months ago I remember that I’ve take a look to the dark web……and I do not want to see it anymore….

It’s really….dark, the content that I’ve seen scared me a lot……

@justoverclock yes, completely understand that. It’s a haven for criminal gangs and literally everything is on the table. Drugs, weapons, money laundering, cyber attacks for rent, and even murder for hire.

Nothing it seems is off limits. The dark web is truly a place where the only limitation is the amount you are prepared to spend.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in