Mongodb or Redis

-

So I tried several times to get Mongodb running on Arch but to no avail. I was able to install redis and get a nodebb instance setup and even proxied through nginx. And as expected, Nodebb is just superfast. I like how you don’t have to mess with making sure the proper php extensions are installed and make sure that path is entered in and all that jazz. It is nice to install it and it just works.

I will have to keep attempting to install mongodb on Arch. Then I can use them both for Nodebb.

-

@Madchatthew did you get any specific error message when trying to compile?

Perhaps try this

https://ivansaul.gitbook.io/blog/how-to-install-mongodb-on-arch-linux

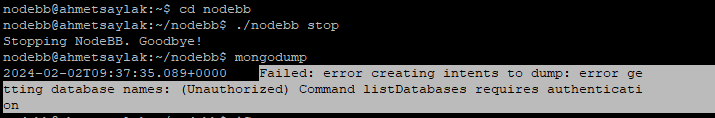

@phenomlab I am going to install it again here and I will post the error that I received.

-

I used these instructions to install mongodb

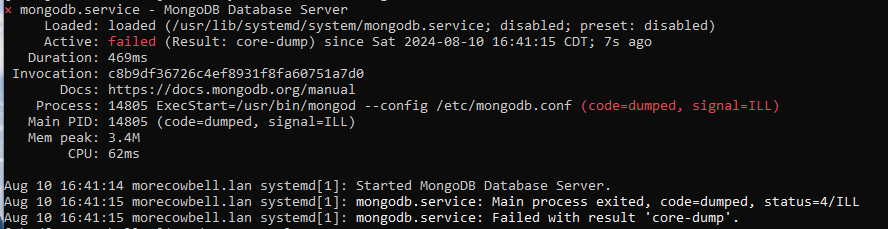

https://www.geeksforgeeks.org/how-to-install-mongodb-on-arch-based-linux-distributionsmanjaro/This is what I see when I use ‘sudo systemctl status mongodb’ after starting the mongodb service

I rebooted the server and the results were the same after rebooting the server.

Here is the status in text form to make for easier searching.

× mongodb.service - MongoDB Database Server Loaded: loaded (/usr/lib/systemd/system/mongodb.service; disabled; preset: disabled) Active: failed (Result: core-dump) since Sat 2024-08-10 16:45:00 CDT; 1s ago Duration: 945ms Invocation: a849587148c548f6a89a72bc53aacb48 Docs: https://docs.mongodb.org/manual Process: 704 ExecStart=/usr/bin/mongod --config /etc/mongodb.conf (code=dumped, signal=ILL) Main PID: 704 (code=dumped, signal=ILL) Mem peak: 56.5M CPU: 148ms Aug 10 16:44:59 morecowbell.lan systemd[1]: Started MongoDB Database Server. Aug 10 16:45:00 morecowbell.lan systemd[1]: mongodb.service: Main process exited, code=dumped, status=4/ILL Aug 10 16:45:00 morecowbell.lan systemd[1]: mongodb.service: Failed with result 'core-dump'. -

Process: 704 ExecStart=/usr/bin/mongod --config /etc/mongodb.conf (code=dumped, %(#e31616)[signal=ILL])

I think the signal=ILL in the highlighted text means that it isn’t compatible with the OS after doing some searching.

@Madchatthew not sure about the incompatibility, but did find this

-

@Madchatthew not sure about the incompatibility, but did find this

@phenomlab Yeah I found this one too that says the same thing. When I do the command to see if avx is on there, it doesn’t show anything. Also, my computer is 10 years old.

-

I was able to figure it out with the help of this stackoverflow post linked below. Following these instructions I was able to get mongodb running and avx showed up in the cpu info. So I have this setup in virtualbox so on a hosted server, hopefully this wouldn’t be an issue, but if anyone else runs across this, hopefully it helps.

To summarize the instructions: Open a Command Prompt in Windows Host as Administrator. Find the Command Prompt icon and right-click. Choose Run As Administrator. Disable Hypervisor lunch bcdedit /set hypervisorlaunchtype off Disable Microsoft Hyper-V DISM /Online /Disable-Feature:Microsoft-Hyper-V Shutdown quick shutdown -s -t 2 Wait a few seconds before turn it on. -

Now the text task will be to migrate data from Redis to Mongodb and setup the multithread that @phenomlab has setup for this site.

-

@phenomlab how do you have it setup to have this sight start up automatically if the server gets rebooted? I attempted to follow nodebb’s instructions but can’t seem to get the systemd nodebb.service instructions to work. I have also tried a few others that I googled for and it just doesn’t seem to want to run as a service.

-

@phenomlab how do you have it setup to have this sight start up automatically if the server gets rebooted? I attempted to follow nodebb’s instructions but can’t seem to get the systemd nodebb.service instructions to work. I have also tried a few others that I googled for and it just doesn’t seem to want to run as a service.

@Madchatthew can you post the configuration you have for the service?

-

@Madchatthew can you post the configuration you have for the service?

This is the Nginx conf file

upstream io_nodes { ip_hash; server 127.0.0.1:4567; server 127.0.0.1:4568; server 127.0.0.1:4569; } server { listen 80; server_name nodebb.lan; root /home/nodebb/public_html; index index.php index.html index.htm; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; location @nodebb { proxy_pass http://io_nodes; } location ~ ^/assets/(.*) { root /home/nodebb/public_html/; try_files /build/public/$1 /public/$1 @nodebb; } location / { proxy_pass http://io_nodes; } } This is the current nodebb.service file

[Unit] Description=NodeBB forum for Node.js. Documentation=http://nodebb.readthedocs.io/en/latest/ After=system.slice multi-user.target [Service] Type=simple User=nodebb StandardOutput=syslog StandardError=syslog SyslogIdentifier=nodebb Environment=NODE_ENV=production WorkingDirectory=/home/nodebb/public_html/ # Call with exec to be able to redirect output: http://stackoverflow.com/a/33036589/1827734 ExecStart=/usr/bin/node /home/nodebb/public_html/loader.js Restart=on-failure [Install] Alias=forum WantedBy=multi-user.target I started with this code and the above code is from a google search. This code below, I did also try it with the correct path for node.

[Unit] Description=NodeBB Documentation=https://docs.nodebb.org After=system.slice multi-user.target mongod.service [Service] Type=forking User=nodebb WorkingDirectory=/path/to/nodebb PIDFile=/path/to/nodebb/pidfile ExecStart=/usr/bin/env node loader.js --no-silent Restart=always [Install] WantedBy=multi-user.target The “/usr/bin/env node” is where node is located that I found from using a command.

I also get a 502 Bad Gateway when I start either ./nodebb start or node loader.js.When I do “sudo systemctl status nodebb” after using “sudo systemctl start nodebb”, I get the following.

○ nodebb.service - NodeBB forum for Node.js. Loaded: loaded (/etc/systemd/system/nodebb.service; disabled; preset: disabled) Active: inactive (dead) Docs: http://nodebb.readthedocs.io/en/latest/ Aug 11 20:53:16 morecowbell.lan systemd[1]: /etc/systemd/system/nodebb.service:10: Standard output type syslog is obsolete, automatically updating to journal. Please update your unit file, and consider removing the setting altogether. Aug 11 20:53:16 morecowbell.lan systemd[1]: /etc/systemd/system/nodebb.service:11: Standard output type syslog is obsolete, automatically updating to journal. Please update your unit file, and consider removing the setting altogether. Aug 11 20:53:16 morecowbell.lan systemd[1]: Started NodeBB forum for Node.js.. Aug 11 20:53:16 morecowbell.lan nodebb[2605]: Process "1298" from pidfile already running, exiting Aug 11 20:53:16 morecowbell.lan systemd[1]: nodebb.service: Deactivated successfully. Aug 11 20:53:24 morecowbell.lan systemd[1]: /etc/systemd/system/nodebb.service:10: Standard output type syslog is obsolete, automatically updating to journal. Please update your unit file, and consider removing the setting altogether. Aug 11 20:53:24 morecowbell.lan systemd[1]: /etc/systemd/system/nodebb.service:11: Standard output type syslog is obsolete, automatically updating to journal. Please update your unit file, and consider removing the setting altogether. Sorry this post is so long and thank you for your help.

Update: I just ran the status for nginx and received the below. So the 192.168.1.10 is my windows PC and the connection was refused. Would I need to add the port to ufw in order for this to work, or is this a different issue.

Aug 11 20:49:54 morecowbell.lan nginx[576]: 2024/08/11 20:49:54 [error] 576#576: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4568/", host: "nodebb.lan" Aug 11 20:49:54 morecowbell.lan nginx[576]: 2024/08/11 20:49:54 [error] 576#576: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4569/", host: "nodebb.lan" Aug 11 20:49:54 morecowbell.lan nginx[576]: 2024/08/11 20:49:54 [error] 576#576: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4567/", host: "nodebb.lan" Aug 11 20:49:55 morecowbell.lan nginx[576]: 2024/08/11 20:49:55 [error] 576#576: *1 no live upstreams while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET /favicon.ico HTTP/1.1", upstream: "http://io_nodes/favicon.ico", host: "nodebb.lan", referrer: "http://nodebb.lan/" Aug 11 20:51:57 morecowbell.lan nginx[577]: 2024/08/11 20:51:57 [error] 577#577: *6 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4568/", host: "nodebb.lan" Aug 11 20:51:57 morecowbell.lan nginx[577]: 2024/08/11 20:51:57 [error] 577#577: *6 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4569/", host: "nodebb.lan" Aug 11 20:51:57 morecowbell.lan nginx[577]: 2024/08/11 20:51:57 [error] 577#577: *6 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4567/", host: "nodebb.lan" Aug 11 20:51:57 morecowbell.lan nginx[577]: 2024/08/11 20:51:57 [error] 577#577: *6 no live upstreams while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET /favicon.ico HTTP/1.1", upstream: "http://io_nodes/favicon.ico", host: "nodebb.lan", referrer: "http://nodebb.lan/" Aug 11 20:51:59 morecowbell.lan nginx[577]: 2024/08/11 20:51:59 [error] 577#577: *6 no live upstreams while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://io_nodes/", host: "nodebb.lan" Aug 11 20:51:59 morecowbell.lan nginx[577]: 2024/08/11 20:51:59 [error] 577#577: *6 no live upstreams while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET /favicon.ico HTTP/1.1", upstream: "http://io_nodes/favicon.ico", host: "nodebb.lan", referrer: "http://nodebb.lan/" Update: adding those ports to ufw did not fix it.

-

This is the Nginx conf file

upstream io_nodes { ip_hash; server 127.0.0.1:4567; server 127.0.0.1:4568; server 127.0.0.1:4569; } server { listen 80; server_name nodebb.lan; root /home/nodebb/public_html; index index.php index.html index.htm; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_redirect off; # Socket.IO Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; location @nodebb { proxy_pass http://io_nodes; } location ~ ^/assets/(.*) { root /home/nodebb/public_html/; try_files /build/public/$1 /public/$1 @nodebb; } location / { proxy_pass http://io_nodes; } }This is the current nodebb.service file

[Unit] Description=NodeBB forum for Node.js. Documentation=http://nodebb.readthedocs.io/en/latest/ After=system.slice multi-user.target [Service] Type=simple User=nodebb StandardOutput=syslog StandardError=syslog SyslogIdentifier=nodebb Environment=NODE_ENV=production WorkingDirectory=/home/nodebb/public_html/ # Call with exec to be able to redirect output: http://stackoverflow.com/a/33036589/1827734 ExecStart=/usr/bin/node /home/nodebb/public_html/loader.js Restart=on-failure [Install] Alias=forum WantedBy=multi-user.targetI started with this code and the above code is from a google search. This code below, I did also try it with the correct path for node.

[Unit] Description=NodeBB Documentation=https://docs.nodebb.org After=system.slice multi-user.target mongod.service [Service] Type=forking User=nodebb WorkingDirectory=/path/to/nodebb PIDFile=/path/to/nodebb/pidfile ExecStart=/usr/bin/env node loader.js --no-silent Restart=always [Install] WantedBy=multi-user.targetThe “/usr/bin/env node” is where node is located that I found from using a command.

I also get a 502 Bad Gateway when I start either ./nodebb start or node loader.js.When I do “sudo systemctl status nodebb” after using “sudo systemctl start nodebb”, I get the following.

○ nodebb.service - NodeBB forum for Node.js. Loaded: loaded (/etc/systemd/system/nodebb.service; disabled; preset: disabled) Active: inactive (dead) Docs: http://nodebb.readthedocs.io/en/latest/ Aug 11 20:53:16 morecowbell.lan systemd[1]: /etc/systemd/system/nodebb.service:10: Standard output type syslog is obsolete, automatically updating to journal. Please update your unit file, and consider removing the setting altogether. Aug 11 20:53:16 morecowbell.lan systemd[1]: /etc/systemd/system/nodebb.service:11: Standard output type syslog is obsolete, automatically updating to journal. Please update your unit file, and consider removing the setting altogether. Aug 11 20:53:16 morecowbell.lan systemd[1]: Started NodeBB forum for Node.js.. Aug 11 20:53:16 morecowbell.lan nodebb[2605]: Process "1298" from pidfile already running, exiting Aug 11 20:53:16 morecowbell.lan systemd[1]: nodebb.service: Deactivated successfully. Aug 11 20:53:24 morecowbell.lan systemd[1]: /etc/systemd/system/nodebb.service:10: Standard output type syslog is obsolete, automatically updating to journal. Please update your unit file, and consider removing the setting altogether. Aug 11 20:53:24 morecowbell.lan systemd[1]: /etc/systemd/system/nodebb.service:11: Standard output type syslog is obsolete, automatically updating to journal. Please update your unit file, and consider removing the setting altogether.Sorry this post is so long and thank you for your help.

Update: I just ran the status for nginx and received the below. So the 192.168.1.10 is my windows PC and the connection was refused. Would I need to add the port to ufw in order for this to work, or is this a different issue.

Aug 11 20:49:54 morecowbell.lan nginx[576]: 2024/08/11 20:49:54 [error] 576#576: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4568/", host: "nodebb.lan" Aug 11 20:49:54 morecowbell.lan nginx[576]: 2024/08/11 20:49:54 [error] 576#576: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4569/", host: "nodebb.lan" Aug 11 20:49:54 morecowbell.lan nginx[576]: 2024/08/11 20:49:54 [error] 576#576: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4567/", host: "nodebb.lan" Aug 11 20:49:55 morecowbell.lan nginx[576]: 2024/08/11 20:49:55 [error] 576#576: *1 no live upstreams while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET /favicon.ico HTTP/1.1", upstream: "http://io_nodes/favicon.ico", host: "nodebb.lan", referrer: "http://nodebb.lan/" Aug 11 20:51:57 morecowbell.lan nginx[577]: 2024/08/11 20:51:57 [error] 577#577: *6 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4568/", host: "nodebb.lan" Aug 11 20:51:57 morecowbell.lan nginx[577]: 2024/08/11 20:51:57 [error] 577#577: *6 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4569/", host: "nodebb.lan" Aug 11 20:51:57 morecowbell.lan nginx[577]: 2024/08/11 20:51:57 [error] 577#577: *6 connect() failed (111: Connection refused) while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://127.0.0.1:4567/", host: "nodebb.lan" Aug 11 20:51:57 morecowbell.lan nginx[577]: 2024/08/11 20:51:57 [error] 577#577: *6 no live upstreams while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET /favicon.ico HTTP/1.1", upstream: "http://io_nodes/favicon.ico", host: "nodebb.lan", referrer: "http://nodebb.lan/" Aug 11 20:51:59 morecowbell.lan nginx[577]: 2024/08/11 20:51:59 [error] 577#577: *6 no live upstreams while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET / HTTP/1.1", upstream: "http://io_nodes/", host: "nodebb.lan" Aug 11 20:51:59 morecowbell.lan nginx[577]: 2024/08/11 20:51:59 [error] 577#577: *6 no live upstreams while connecting to upstream, client: 192.168.1.10, server: nodebb.lan, request: "GET /favicon.ico HTTP/1.1", upstream: "http://io_nodes/favicon.ico", host: "nodebb.lan", referrer: "http://nodebb.lan/"Update: adding those ports to ufw did not fix it.

@Madchatthew connection refused typically means that port is being used elsewhere.

What’s the output of

./nodebb log -

@Madchatthew connection refused typically means that port is being used elsewhere.

What’s the output of

./nodebb log[nodebb@morecowbell public_html]$ ./nodebb log Hit Ctrl-C to exit tail: cannot open './logs/output.log' for reading: No such file or directory -

[nodebb@morecowbell public_html]$ ./nodebb log Hit Ctrl-C to exit tail: cannot open './logs/output.log' for reading: No such file or directory@Madchatthew hmm. That doesn’t look like it’s properly installed, or you have a permissions issue. What user are launching NodeBB as?

Can you try

./nodebb dev -

@Madchatthew hmm. That doesn’t look like it’s properly installed, or you have a permissions issue. What user are launching NodeBB as?

Can you try

./nodebb dev@phenomlab said in Mongodb or Redis:

@Madchatthew hmm. That doesn’t look like it’s properly installed, or you have a permissions issue. What user are launching NodeBB as?

Can you try

./nodebb devI will give this a try this evening. At work now.

-

@phenomlab said in Mongodb or Redis:

@Madchatthew hmm. That doesn’t look like it’s properly installed, or you have a permissions issue. What user are launching NodeBB as?

Can you try

./nodebb devI will give this a try this evening. At work now.

@Madchatthew thanks. Let me know.

-

@Madchatthew thanks. Let me know.

@phenomlab here are those results. It is a little long but I wanted to make sure you were getting the whole picture.

[nodebb@morecowbell public_html]$ ./nodebb dev NodeBB v3.8.4 Copyright (C) 2013-2024 NodeBB Inc. This program comes with ABSOLUTELY NO WARRANTY. This is free software, and you are welcome to redistribute it under certain conditions. For the full license, please visit: http://www.gnu.org/copyleft/gpl.html Clustering enabled: Spinning up 3 process(es). 2024-08-13T01:03:42.527Z [4568/7624] - verbose: * using configuration stored in: /home/nodebb/public_html/config.json 2024-08-13T01:03:42.539Z [4567/7623] - verbose: * using configuration stored in: /home/nodebb/public_html/config.json 2024-08-13T01:03:42.547Z [4567/7623] - info: Initializing NodeBB v3.8.4 http://localhost:4567 2024-08-13T01:03:42.548Z [4567/7623] - verbose: * using mongo store at 127.0.0.1:27017 2024-08-13T01:03:42.548Z [4567/7623] - verbose: * using themes stored in: /home/nodebb/public_html/node_modules 2024-08-13T01:03:42.556Z [4569/7625] - verbose: * using configuration stored in: /home/nodebb/public_html/config.json 2024-08-13T01:03:42.961Z [4568/7624] - error: ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) 2024-08-13T01:03:42.966Z [4568/7624] - error: uncaughtException: NOAUTH Authentication required. ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) {"date":"Mon Aug 12 2024 20:03:42 GMT-0500 (Central Daylight Time)","error":{"command":{"args":[],"name":"info"}},"exception":true,"os":{"loadavg":[0.12,0.1,0.08],"uptime":84039.1},"process":{"argv":["/usr/bin/node","/home/nodebb/public_html/app.js"],"cwd":"/home/nodebb/public_html","execPath":"/usr/bin/node","gid":1006,"memoryUsage":{"arrayBuffers":18535346,"external":20792656,"heapTotal":55267328,"heapUsed":25627808,"rss":141299712},"pid":7624,"uid":1006,"version":"v22.6.0"},"stack":"ReplyError: NOAUTH Authentication required.\n at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12)\n at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14)","trace":[{"column":12,"file":"/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js","function":"parseError","line":179,"method":null,"native":false},{"column":14,"file":"/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js","function":"parseType","line":302,"method":null,"native":false}]} 2024-08-13T01:03:42.967Z [4568/7624] - error: ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) 2024-08-13T01:03:42.970Z [4567/7623] - error: ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) 2024-08-13T01:03:42.973Z [4569/7625] - error: ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) 2024-08-13T01:03:42.974Z [4567/7623] - error: uncaughtException: NOAUTH Authentication required. ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) {"date":"Mon Aug 12 2024 20:03:42 GMT-0500 (Central Daylight Time)","error":{"command":{"args":[],"name":"info"}},"exception":true,"os":{"loadavg":[0.12,0.1,0.08],"uptime":84039.11},"process":{"argv":["/usr/bin/node","/home/nodebb/public_html/app.js"],"cwd":"/home/nodebb/public_html","execPath":"/usr/bin/node","gid":1006,"memoryUsage":{"arrayBuffers":18535345,"external":20792655,"heapTotal":55267328,"heapUsed":25973952,"rss":142553088},"pid":7623,"uid":1006,"version":"v22.6.0"},"stack":"ReplyError: NOAUTH Authentication required.\n at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12)\n at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14)","trace":[{"column":12,"file":"/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js","function":"parseError","line":179,"method":null,"native":false},{"column":14,"file":"/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js","function":"parseType","line":302,"method":null,"native":false}]} 2024-08-13T01:03:42.975Z [4567/7623] - error: ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) 2024-08-13T01:03:42.978Z [4569/7625] - error: uncaughtException: NOAUTH Authentication required. ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) {"date":"Mon Aug 12 2024 20:03:42 GMT-0500 (Central Daylight Time)","error":{"command":{"args":[],"name":"info"}},"exception":true,"os":{"loadavg":[0.12,0.1,0.08],"uptime":84039.12},"process":{"argv":["/usr/bin/node","/home/nodebb/public_html/app.js"],"cwd":"/home/nodebb/public_html","execPath":"/usr/bin/node","gid":1006,"memoryUsage":{"arrayBuffers":18535345,"external":20792655,"heapTotal":55005184,"heapUsed":25626160,"rss":141709312},"pid":7625,"uid":1006,"version":"v22.6.0"},"stack":"ReplyError: NOAUTH Authentication required.\n at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12)\n at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14)","trace":[{"column":12,"file":"/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js","function":"parseError","line":179,"method":null,"native":false},{"column":14,"file":"/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js","function":"parseType","line":302,"method":null,"native":false}]} 2024-08-13T01:03:42.980Z [4569/7625] - error: ReplyError: NOAUTH Authentication required. at parseError (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:179:12) at parseType (/home/nodebb/public_html/node_modules/redis-parser/lib/parser.js:302:14) 2024-08-13T01:03:44.687Z [4567/7623] - verbose: [minifier] utilizing a maximum of 2 additional threads 2024-08-13T01:03:44.707Z [4569/7625] - verbose: [minifier] utilizing a maximum of 2 additional threads 2024-08-13T01:03:44.712Z [4567/7623] - info: [app] Shutdown (SIGTERM/SIGINT/SIGQUIT) Initialised. 2024-08-13T01:03:44.720Z [4569/7625] - info: [app] Shutdown (SIGTERM/SIGINT/SIGQUIT) Initialised. 2024-08-13T01:03:44.767Z [4568/7624] - verbose: [minifier] utilizing a maximum of 2 additional threads 2024-08-13T01:03:44.780Z [4568/7624] - info: [app] Shutdown (SIGTERM/SIGINT/SIGQUIT) Initialised. (node:7625) [DEP0040] DeprecationWarning: The `punycode` module is deprecated. Please use a userland alternative instead. (Use `node --trace-deprecation ...` to show where the warning was created) 2024-08-13T01:03:45.068Z [4569/7625] - error: Error [ERR_SERVER_NOT_RUNNING]: Server is not running. at Server.close (node:net:2278:12) at Object.onceWrapper (node:events:634:28) at Server.emit (node:events:520:28) at emitCloseNT (node:net:2338:8) at process.processTicksAndRejections (node:internal/process/task_queues:81:21) (node:7623) [DEP0040] DeprecationWarning: The `punycode` module is deprecated. Please use a userland alternative instead. (Use `node --trace-deprecation ...` to show where the warning was created) 2024-08-13T01:03:45.073Z [4567/7623] - error: Error [ERR_SERVER_NOT_RUNNING]: Server is not running. at Server.close (node:net:2278:12) at Object.onceWrapper (node:events:634:28) at Server.emit (node:events:520:28) at emitCloseNT (node:net:2338:8) at process.processTicksAndRejections (node:internal/process/task_queues:81:21) [cluster] Child Process (7625) has exited (code: 1, signal: null) [cluster] Spinning up another process... [cluster] Child Process (7623) has exited (code: 1, signal: null) [cluster] Spinning up another process... (node:7624) [DEP0040] DeprecationWarning: The `punycode` module is deprecated. Please use a userland alternative instead. (Use `node --trace-deprecation ...` to show where the warning was created) 2024-08-13T01:03:45.129Z [4568/7624] - error: Error [ERR_SERVER_NOT_RUNNING]: Server is not running. at Server.close (node:net:2278:12) at Object.onceWrapper (node:events:634:28) at Server.emit (node:events:520:28) at emitCloseNT (node:net:2338:8) at process.processTicksAndRejections (node:internal/process/task_queues:81:21) [cluster] Child Process (7624) has exited (code: 1, signal: null) [cluster] Spinning up another process... -

Woot, I figured it out man!!! First off, in the config.json, i forgot to put the redis password on it. Then I was having trouble connecting to mongodb. I was googling, changing the config and finally did a sudo systemctl status mongodb to find out it wasn’t even running. So I enabled it, then started it and boom using ./nodebb dev started it right now.

So then I went back in and configured my nodebb.service file to match what nodebb had on their site and BOOOM it is working. By using the scaling method, nodebb runs even faster and I don’t get the 503 error when refreshing.

I have learned a lot by going through all of this and it is pretty awesome! Now having this complete, I have some more testing to do, but I am many steps closer to using Arch as my production server.

-

Woot, I figured it out man!!! First off, in the config.json, i forgot to put the redis password on it. Then I was having trouble connecting to mongodb. I was googling, changing the config and finally did a sudo systemctl status mongodb to find out it wasn’t even running. So I enabled it, then started it and boom using ./nodebb dev started it right now.

So then I went back in and configured my nodebb.service file to match what nodebb had on their site and BOOOM it is working. By using the scaling method, nodebb runs even faster and I don’t get the 503 error when refreshing.

I have learned a lot by going through all of this and it is pretty awesome! Now having this complete, I have some more testing to do, but I am many steps closer to using Arch as my production server.

@Madchatthew Excellent! I looked at the error message above, and it does seem that your MongoDB configuration has been set to require authentication (which is correct), but seems the password was missing.

-

@Madchatthew Excellent! I looked at the error message above, and it does seem that your MongoDB configuration has been set to require authentication (which is correct), but seems the password was missing.

@phenomlab yeah I looked through the error log more carefully and found the Redis auth part first, then when that was cleared up I found the Mongodb auth error. Probably should have figured that one out first, but oh well hahah

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in