Google slow to crawl website (Search Console)

-

There are times when Google can be remarkably slow in crawling your site - particularly if you had issues previously, and you need to tell them you’ve fixed it.

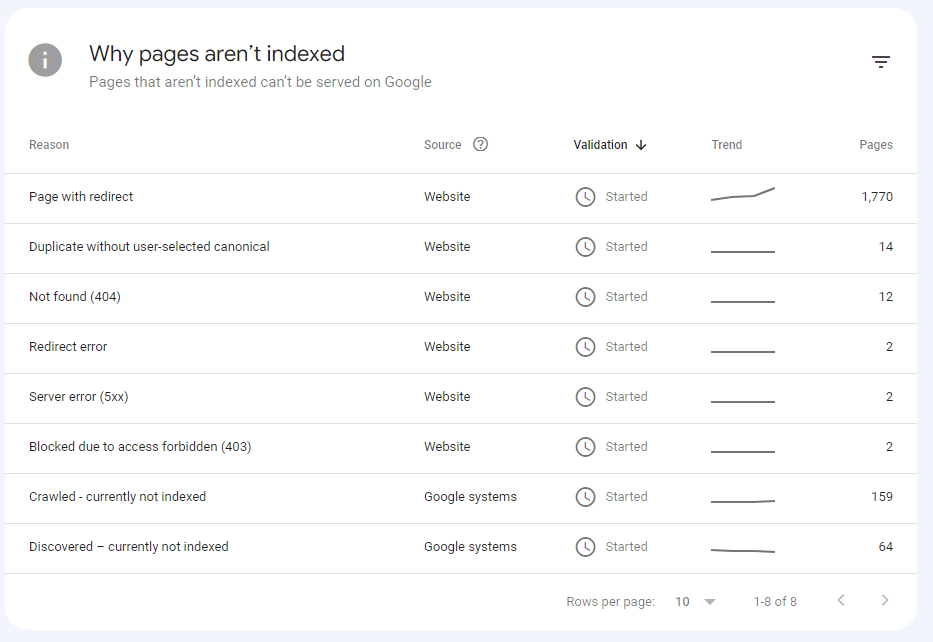

You might see something like this when requesting validation

These have all been fixed, but to “speed things up”, you can

- Remove and re-submit the sitemap.xml file

- Send a ping to Google to ask them to crawl again (see below)

http://www.google.com/webmasters/sitemaps/ping?sitemap=http://example.com/sitemap.xmlSome more useful tips can be found here

Obviously, you’d substitute the

sitemap=part to match your own site. -

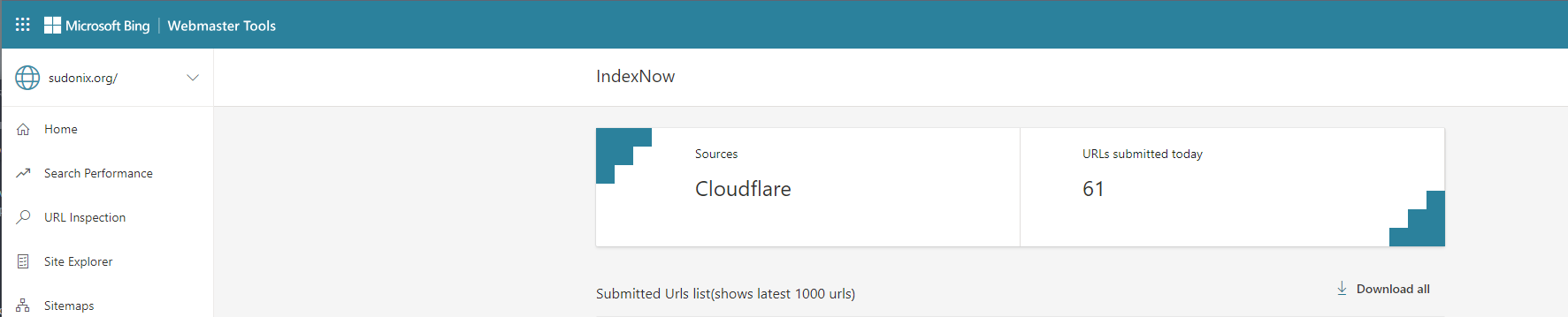

It just goes to show that despite being the behemoth that Google is, it’s crawl rate is ridiculously slow. Bing and Yandex have adopted “IndexNow” - see below article, which is well worth the read in itself

DuckDuckGo are reportedly still considering adopting IndexNow, but given they pull sources from over 400 other entities (of which Bing and Yandex are amongst), their content also updates much quicker (provided you are using Bing and Yandex Webmaster tools, which I’d recommend) - see below example

And so, Google, my point is this. You’re the largest player on this planet, yet also the slowest at crawling sites? Come on!

Evidently, Google have been testing since 2021, but are yet to adopt

-

It seems that in order for Google to crawl your site, you must include a forward slash at the end of the domain name when you add it (if you are adding it as the site itself, and not at domain level) - who knew? That isn’t documented anywhere!

-

I don’t know

-

@DownPW It’s a completely new one on me also! You don’t have to do this with Bing or Yandex

-

Seems like Google is finally crawling this site. And, “crawling” in the sense that it’s still extremely slow …

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in