MongoDB backup script

-

For a while now, I’ve been backing up MongoDB using a basic (and extremely simple) shell script. However, this did not allow for multiple backup sets, retention, purging of unwanted data etc., so I needed something a little more robust.

Deciding not to reinvent the wheel, I searched around for an existing script, and found this

i’ve set this up on another production server I use at work, and it’s brilliant. It does exactly what it says on the tin

Would probably be very useful for others in the same boat.

Would probably be very useful for others in the same boat.EDIT - seems someone else forked this and made several changes / revisions

https://raw.githubusercontent.com/micahwedemeyer/automongobackup/master/src/automongobackup.sh -

undefined phenomlab marked this topic as a regular topic on 24 Nov 2022, 10:03

-

Seems there are others too. I’ll list these here.

-

Seems there are others too. I’ll list these here.

-

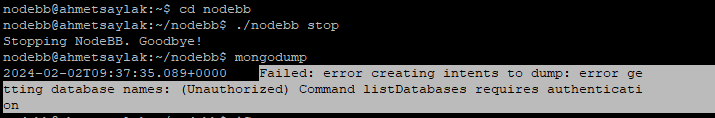

After playing with the original code in the first post, I’ve modified it a little to suit my needs - posting it here in it’s full glory in case it’s of use for anyone else. Obviously, you’ll need to change the important parts

#!/bin/bash #set -eo pipefail # # MongoDB Backup Script # VER. 0.20 # More Info: http://github.com/micahwedemeyer/automongobackup # Note, this is a lobotomized port of AutoMySQLBackup # (http://sourceforge.net/projects/automysqlbackup/) for use with # MongoDB. # # This program is free software; you can redistribute it and/or modify # it under the terms of the GNU General Public License as published by # the Free Software Foundation; either version 2 of the License, or # (at your option) any later version. # # This program is distributed in the hope that it will be useful, # but WITHOUT ANY WARRANTY; without even the implied warranty of # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the # GNU General Public License for more details. # # You should have received a copy of the GNU General Public License # along with this program; if not, write to the Free Software # Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA # #===================================================================== #===================================================================== # Set the following variables to your system needs # (Detailed instructions below variables) #===================================================================== # Database name to specify a specific database only e.g. myawesomeapp # Unnecessary if backup all databases # DBNAME="" # Collections name list to include e.g. system.profile users # DBNAME is required # Unecessary if backup all collections # COLLECTIONS="" # Collections to exclude e.g. system.profile users # DBNAME is required # Unecessary if backup all collections # EXCLUDE_COLLECTIONS="" # Username to access the mongo server e.g. dbuser # Unnecessary if authentication is off DBUSERNAME="" # Password to access the mongo server e.g. password # Unnecessary if authentication is off DBPASSWORD="" # Database for authentication to the mongo server e.g. admin # Unnecessary if authentication is off DBAUTHDB="admin" # Host name (or IP address) of mongo server e.g localhost DBHOST="127.0.0.1" # Port that mongo is listening on DBPORT="27017" # Backup directory location e.g /backups BACKUPDIR="" # Mail setup # What would you like to be mailed to you? # - log : send only log file # - files : send log file and sql files as attachments (see docs) # - stdout : will simply output the log to the screen if run manually. # - quiet : Only send logs if an error occurs to the MAILADDR. MAILCONTENT="log" # Set the maximum allowed email size in k. (4000 = approx 5MB email [see docs]) export MAXATTSIZE="4000" # Email Address to send mail to? (user@domain.com) MAILADDR="" # ============================================================================ # === SCHEDULING AND RETENTION OPTIONS ( Read the doc's below for details )=== #============================================================================= # Do you want to do hourly backups? How long do you want to keep them? DOHOURLY="no" HOURLYRETENTION=24 # Do you want to do daily backups? How long do you want to keep them? DODAILY="yes" DAILYRETENTION=7 # Which day do you want weekly backups? (1 to 7 where 1 is Monday) DOWEEKLY="no" WEEKLYDAY=6 WEEKLYRETENTION=4 # Do you want monthly backups? How long do you want to keep them? DOMONTHLY="no" MONTHLYRETENTION=4 # ============================================================ # === ADVANCED OPTIONS ( Read the doc's below for details )=== #============================================================= # Choose Compression type. (gzip or bzip2) COMP="gzip" # Choose if the uncompressed folder should be deleted after compression has completed CLEANUP="yes" # Additionally keep a copy of the most recent backup in a seperate directory. LATEST="yes" # Make Hardlink not a copy LATESTLINK="yes" # Use oplog for point-in-time snapshotting. OPLOG="no" # Choose other Server if is Replica-Set Master REPLICAONSLAVE="no" # Allow DBUSERNAME without DBAUTHDB REQUIREDBAUTHDB="yes" # Maximum files of a single backup used by split - leave empty if no split required # MAXFILESIZE="" # Command to run before backups (uncomment to use) # PREBACKUP="" # Command run after backups (uncomment to use) # POSTBACKUP="" #===================================================================== # Options documentation #===================================================================== # Set DBUSERNAME and DBPASSWORD of a user that has at least SELECT permission # to ALL databases. # # Set the DBHOST option to the server you wish to backup, leave the # default to backup "this server".(to backup multiple servers make # copies of this file and set the options for that server) # # You can change the backup storage location from /backups to anything # you like by using the BACKUPDIR setting.. # # The MAILCONTENT and MAILADDR options and pretty self explanatory, use # these to have the backup log mailed to you at any email address or multiple # email addresses in a space seperated list. # # (If you set mail content to "log" you will require access to the "mail" program # on your server. If you set this to "files" you will have to have mutt installed # on your server. If you set it to "stdout" it will log to the screen if run from # the console or to the cron job owner if run through cron. If you set it to "quiet" # logs will only be mailed if there are errors reported. ) # # # Finally copy automongobackup.sh to anywhere on your server and make sure # to set executable permission. You can also copy the script to # /etc/cron.daily to have it execute automatically every night or simply # place a symlink in /etc/cron.daily to the file if you wish to keep it # somwhere else. # # NOTE: On Debian copy the file with no extention for it to be run # by cron e.g just name the file "automongobackup" # # Thats it.. # # # === Advanced options === # # To set the day of the week that you would like the weekly backup to happen # set the WEEKLYDAY setting, this can be a value from 1 to 7 where 1 is Monday, # The default is 6 which means that weekly backups are done on a Saturday. # # Use PREBACKUP and POSTBACKUP to specify Pre and Post backup commands # or scripts to perform tasks either before or after the backup process. # # #===================================================================== # Backup Rotation.. #===================================================================== # # Hourly backups are executed if DOHOURLY is set to "yes". # The number of hours backup copies to keep for each day (i.e. 'Monday', 'Tuesday', etc.) is set with DHOURLYRETENTION. # DHOURLYRETENTION=0 rotates hourly backups every day (i.e. only the most recent hourly copy is kept). -1 disables rotation. # # Daily backups are executed if DODAILY is set to "yes". # The number of daily backup copies to keep for each day (i.e. 'Monday', 'Tuesday', etc.) is set with DAILYRETENTION. # DAILYRETENTION=0 rotates daily backups every week (i.e. only the most recent daily copy is kept). -1 disables rotation. # # Weekly backups are executed if DOWEEKLY is set to "yes". # WEEKLYDAY [1-7] sets which day a weekly backup occurs when cron.daily scripts are run. # Rotate weekly copies after the number of weeks set by WEEKLYRETENTION. # WEEKLYRETENTION=0 rotates weekly backups every week. -1 disables rotation. # # Monthly backups are executed if DOMONTHLY is set to "yes". # Monthy backups occur on the first day of each month when cron.daily scripts are run. # Rotate monthly backups after the number of months set by MONTHLYRETENTION. # MONTHLYRETENTION=0 rotates monthly backups upon each execution. -1 disables rotation. # #===================================================================== # Please Note!! #===================================================================== # # I take no resposibility for any data loss or corruption when using # this script. # # This script will not help in the event of a hard drive crash. You # should copy your backups offline or to another PC for best protection. # # Happy backing up! # #===================================================================== # Restoring #===================================================================== # ??? # #===================================================================== # Change Log #===================================================================== # VER 0.11 - (2016-05-04) (author: Claudio Prato) # - Fixed bugs in select_secondary_member() with authdb enabled # - Fixed bugs in Compression function by removing the * symbol # - Added incremental backup feature # - Added option to select the collections to backup # # VER 0.10 - (2015-06-22) (author: Markus Graf) # - Added option to backup only one specific database # # VER 0.9 - (2011-10-28) (author: Joshua Keroes) # - Fixed bugs and improved logic in select_secondary_member() # - Fixed minor grammar issues and formatting in docs # # VER 0.8 - (2011-10-02) (author: Krzysztof Wilczynski) # - Added better support for selecting Secondary member in the # Replica Sets that can be used to take backups without bothering # busy Primary member too much. # # VER 0.7 - (2011-09-23) (author: Krzysztof Wilczynski) # - Added support for --journal dring taking backup # to enable journaling. # # VER 0.6 - (2011-09-15) (author: Krzysztof Wilczynski) # - Added support for --oplog during taking backup for # point-in-time snapshotting. # - Added filter for "mongodump" writing "connected to:" # on the standard error, which is not desirable. # # VER 0.5 - (2011-02-04) (author: Jan Doberstein) # - Added replicaset support (don't Backup on Master) # - Added Hard Support for 'latest' Copy # # VER 0.4 - (2010-10-26) # - Cleaned up warning message to make it clear that it can # usually be safely ignored # # VER 0.3 - (2010-06-11) # - Added the DBPORT parameter # - Changed USERNAME and PASSWORD to DBUSERNAME and DBPASSWORD # - Fixed some bugs with compression # # VER 0.2 - (2010-05-27) (author: Gregory Barchard) # - Added back the compression option for automatically creating # tgz or bz2 archives # - Added a cleanup option to optionally remove the database dump # after creating the archives # - Removed unnecessary path additions # # VER 0.1 - (2010-05-11) # - Initial Release # # VER 0.2 - (2015-09-10) # - Added configurable backup rentention options, even for # monthly backups. # #===================================================================== #===================================================================== #===================================================================== # # Should not need to be modified from here down!! # #===================================================================== #===================================================================== #===================================================================== shellout () { if [ -n "$1" ]; then echo "$1" exit 1 fi exit 0 } # External config - override default values set above for x in default sysconfig; do if [ -f "/etc/$x/automongobackup" ]; then # shellcheck source=/dev/null source /etc/$x/automongobackup fi done # Include extra config file if specified on commandline, e.g. for backuping several remote dbs from central server # shellcheck source=/dev/null [ ! -z "$1" ] && [ -f "$1" ] && source ${1} #===================================================================== PATH=/usr/local/bin:/usr/bin:/bin timestamp=$(date +%F-%T) DATE=$(date +%F-%H%M%S) # Datestamp e.g 2002-09-21 HOD=$(date +%s) # Current timestamp for PITR backup DOW=$(date +%A) # Day of the week e.g. Monday DNOW=$(date +%u) # Day number of the week 1 to 7 where 1 represents Monday DOM=$(date +%d) # Date of the Month e.g. 27 M=$(date +%B) # Month e.g January W=$(date +%V) # Week Number e.g 37 VER=0.11 # Version Number LOGFILE=$BACKUPDIR/$DBHOST-$(date +%F-%H%M%S).log # Logfile Name LOGERR=$BACKUPDIR/ERRORS_$DBHOST-$(date +%F-%H%M%S).log # Logfile Name OPT="" # OPT string for use with mongodump OPTSEC="" # OPT string for use with mongodump in select_secondary_member function QUERY="" # QUERY string for use with mongodump HOURLYQUERY="" # HOURLYQUERY string for use with mongodump # Do we need to use a username/password? if [ "$DBUSERNAME" ]; then OPT="$OPT --username=$DBUSERNAME --password=$DBPASSWORD" if [ "$REQUIREDBAUTHDB" = "yes" ]; then OPT="$OPT --authenticationDatabase=$DBAUTHDB" fi fi # Do we need to use a username/password for ReplicaSet Secondary Members Selection? if [ "$DBUSERNAME" ]; then OPTSEC="$OPTSEC --username=$DBUSERNAME --password=$DBPASSWORD" if [ "$REQUIREDBAUTHDB" = "yes" ]; then OPTSEC="$OPTSEC --authenticationDatabase=$DBAUTHDB" fi fi # Do we use oplog for point-in-time snapshotting? if [ "$OPLOG" = "yes" ] && [ -z "$DBNAME" ]; then OPT="$OPT --oplog" fi # Do we need to backup only a specific database? if [ "$DBNAME" ]; then OPT="$OPT -d $DBNAME" fi # Do we need to backup only a specific collections? if [ "$COLLECTIONS" ]; then for x in $COLLECTIONS; do OPT="$OPT --collection $x" done fi # Do we need to exclude collections? if [ "$EXCLUDE_COLLECTIONS" ]; then for x in $EXCLUDE_COLLECTIONS; do OPT="$OPT --excludeCollection $x" done fi # Do we use a filter for hourly point-in-time snapshotting? if [ "$DOHOURLY" == "yes" ]; then # getting PITR START timestamp # shellcheck disable=SC2012 [ "$COMP" = "gzip" ] && HOURLYQUERY=$(ls -t $BACKUPDIR/hourly | head -n 1 | cut -d '.' -f3) # setting the start timestamp to NOW for the first execution if [ -z "$HOURLYQUERY" ]; then QUERY="" else # limit the documents included in the output of mongodump # shellcheck disable=SC2016 QUERY='{ "ts" : { $gt : Timestamp('$HOURLYQUERY', 1) } }' fi fi # Create required directories mkdir -p $BACKUPDIR/{hourly,daily,weekly,monthly} || shellout 'failed to create directories' if [ "$LATEST" = "yes" ]; then rm -rf "$BACKUPDIR/latest" mkdir -p "$BACKUPDIR/latest" || shellout 'failed to create directory' fi # Do we use a filter for hourly point-in-time snapshotting? if [ "$DOHOURLY" == "yes" ]; then # getting PITR START timestamp # shellcheck disable=SC2012 [ "$COMP" = "gzip" ] && HOURLYQUERY=$(ls -t $BACKUPDIR/hourly | head -n 1 | cut -d '.' -f3) # setting the start timestamp to NOW for the first execution if [ -z "$HOURLYQUERY" ]; then QUERY="" else # limit the documents included in the output of mongodump # shellcheck disable=SC2016 QUERY='{ "ts" : { $gt : Timestamp('$HOURLYQUERY', 1) } }' fi fi # Check for correct sed usage if [ "$(uname -s)" = 'Darwin' ] || [ "$(uname -s)" = 'FreeBSD' ]; then SED="sed -i ''" else SED="sed -i" fi # IO redirection for logging. touch "$LOGFILE" exec 6>&1 # Link file descriptor #6 with stdout. # Saves stdout. exec > "$LOGFILE" # stdout replaced with file $LOGFILE. touch "$LOGERR" exec 7>&2 # Link file descriptor #7 with stderr. # Saves stderr. exec 2> "$LOGERR" # stderr replaced with file $LOGERR. # When a desire is to receive log via e-mail then we close stdout and stderr. [ "x$MAILCONTENT" == "xlog" ] && exec 6>&- 7>&- # Functions # Database dump function dbdump () { if [ -n "$QUERY" ]; then # filter for point-in-time snapshotting and if DOHOURLY=yes # shellcheck disable=SC2086 mongodump --quiet --host=$DBHOST:$DBPORT --out="$1" $OPT -q "$QUERY" MDUMPSTATUS=$? else # all others backups type # shellcheck disable=SC2086 mongodump --quiet --host=$DBHOST:$DBPORT --out="$1" $OPT MDUMPSTATUS=$? fi if [ $MDUMPSTATUS -ne 0 ]; then echo "$timestamp - ERROR: mongodump failed: $1" >&2 return 1 fi [ -e "$1" ] && return 0 echo "$timestamp - ERROR: mongodump failed to create dumpfile: $1" >&2 return 1 } # # Select first available Secondary member in the Replica Sets and show its # host name and port. # function select_secondary_member { # We will use indirect-reference hack to return variable from this function. local __return=$1 # Return list of with all replica set members # shellcheck disable=SC2086 members=( $(mongo --quiet --host $DBHOST:$DBPORT --eval 'rs.conf().members.forEach(function(x){ print(x.host) })' $OPTSEC ) ) # Check each replset member to see if it's a secondary and return it. if [ ${#members[@]} -gt 1 ]; then for member in "${members[@]}"; do is_secondary=$(mongo --quiet --host "$member" --eval 'rs.isMaster().secondary' $OPTSEC ) case "$is_secondary" in 'true') # First secondary wins ... secondary=$member break ;; 'false') # Skip particular member if it is a Primary. continue ;; *) # Skip irrelevant entries. Should not be any anyway ... continue ;; esac done fi if [ -n "$secondary" ]; then # Ugly hack to return value from a Bash function ... # shellcheck disable=SC2086 eval $__return="'$secondary'" fi } if [ -n "$MAXFILESIZE" ]; then write_file() { split --bytes "$MAXFILESIZE" --numeric-suffixes - "${1}-" } else write_file() { cat > "$1" } fi # Compression function plus latest copy compression () { SUFFIX="" dir=$(dirname "$1") file=$(basename "$1") if [ -n "$COMP" ]; then [ "$COMP" = "gzip" ] && SUFFIX=".tgz" [ "$COMP" = "bzip2" ] && SUFFIX=".tar.bz2" echo $timestamp - Tar and $COMP to "$file$SUFFIX" cd "$dir" || return 1 tar -cf - "$file" | $COMP --stdout | write_file "${file}${SUFFIX}" cd - >/dev/null || return 1 else echo "$timestamp - No compression option set, check advanced settings" fi if [ "$LATEST" = "yes" ]; then if [ "$LATESTLINK" = "yes" ];then COPY="ln" else COPY="cp" fi $COPY "$1$SUFFIX" "$BACKUPDIR/latest/" fi if [ "$CLEANUP" = "yes" ]; then echo $timestamp - Cleaning up folder at "$1" rm -rf "$1" fi return 0 } # Run command before we begin if [ "$PREBACKUP" ]; then echo "$timestamp - Prebackup command output." eval "$PREBACKUP" echo fi # Hostname for LOG information if [ "$DBHOST" = "localhost" ] || [ "$DBHOST" = "127.0.0.1" ]; then HOST=$(hostname) if [ "$SOCKET" ]; then OPT="$OPT --socket=$SOCKET" fi else HOST=$DBHOST fi # Try to select an available secondary for the backup or fallback to DBHOST. if [ "x${REPLICAONSLAVE}" == "xyes" ]; then # Return value via indirect-reference hack ... select_secondary_member secondary if [ -n "$secondary" ]; then DBHOST=${secondary%%:*} DBPORT=${secondary##*:} else SECONDARY_WARNING="WARNING: No suitable Secondary found in the Replica Sets. Falling back to ${DBHOST}." fi fi if [ ! -z "$SECONDARY_WARNING" ]; then echo echo "$SECONDARY_WARNING" fi echo "$timestamp - Database Server - $HOST on $DBHOST" echo "$timestamp - Backup started $(date)" # Monthly Full Backup of all Databases if [[ $DOM = "01" ]] && [[ $DOMONTHLY = "yes" ]]; then echo "$timestamp - Monthly Full Backup" echo # Delete old monthly backups while respecting the set rentention policy. if [[ $MONTHLYRETENTION -ge 0 ]] ; then NUM_OLD_FILES=$(find $BACKUPDIR/monthly -depth -not -newermt "$MONTHLYRETENTION month ago" -type f | wc -l) if [[ $NUM_OLD_FILES -gt 0 ]] ; then echo $timestamp - Deleting "$NUM_OLD_FILES" global setting backup file\(s\) older than "$MONTHLYRETENTION" month\(s\) old. find $BACKUPDIR/monthly -not -newermt "$MONTHLYRETENTION month ago" -type f -delete fi fi FILE="$BACKUPDIR/monthly/$DATE.$M" # Weekly Backup elif [[ "$DNOW" = "$WEEKLYDAY" ]] && [[ "$DOWEEKLY" = "yes" ]] ; then echo Weekly Backup echo if [[ $WEEKLYRETENTION -ge 0 ]] ; then # Delete old weekly backups while respecting the set rentention policy. NUM_OLD_FILES=$(find $BACKUPDIR/weekly -depth -not -newermt "$WEEKLYRETENTION week ago" -type f | wc -l) if [[ $NUM_OLD_FILES -gt 0 ]] ; then echo $timestamp - Deleting "$NUM_OLD_FILES" global setting backup file\(s\) older than "$WEEKLYRETENTION" week\(s\) old. find $BACKUPDIR/weekly -not -newermt "$WEEKLYRETENTION week ago" -type f -delete fi fi FILE="$BACKUPDIR/weekly/week.$W.$DATE" # Daily Backup elif [[ $DODAILY = "yes" ]] ; then echo $timestamp - Daily Backup of Databases echo # Delete old daily backups while respecting the set rentention policy. if [[ $DAILYRETENTION -ge 0 ]] ; then NUM_OLD_FILES=$(find $BACKUPDIR/daily -depth -not -newermt "$DAILYRETENTION days ago" -type f | wc -l) if [[ $NUM_OLD_FILES -gt 0 ]] ; then echo Deleting "$NUM_OLD_FILES" global setting backup file\(s\) made in previous weeks. find "$BACKUPDIR/daily" -not -newermt "$DAILYRETENTION days ago" -type f -delete fi fi # Below changed to remove .$DOW as this is not required FILE="$BACKUPDIR/daily/$DATE" # Hourly Backup elif [[ $DOHOURLY = "yes" ]] ; then echo $timestamp - Hourly Backup of Databases echo # Delete old hourly backups while respecting the set rentention policy. if [[ $HOURLYRETENTION -ge 0 ]] ; then NUM_OLD_FILES=$(find $BACKUPDIR/hourly -depth -not -newermt "$HOURLYRETENTION hour ago" -type f | wc -l) if [[ $NUM_OLD_FILES -gt 0 ]] ; then echo "$timestamp - Deleting $NUM_OLD_FILES global setting backup file\(s\) made in previous weeks." find $BACKUPDIR/hourly -not -newermt "$HOURLYRETENTION hour ago" -type f -delete fi fi FILE="$BACKUPDIR/hourly/$DATE.$DOW.$HOD" # convert timestamp to date: echo $TIMESTAMP | gawk '{print strftime("%c", $0)}' fi # FILE will not be set if no frequency is selected. if [[ -z "$FILE" ]] ; then echo "$timestamp - ERROR: No backup frequency was chosen." echo "$timestamp - Please set one of DOHOURLY,DODAILY,DOWEEKLY,DOMONTHLY to \"yes\"" exit 1 fi dbdump "$FILE" && compression "$FILE" echo "$timestamp - Backup completed $(date)" echo echo "Total disk space used for backup storage" echo echo "Size - Location " du -hs "$BACKUPDIR" echo # Run command when we're done if [ "$POSTBACKUP" ]; then echo "$timestamp - Postbackup command output." echo eval "$POSTBACKUP" echo fi # Clean up IO redirection if we plan not to deliver log via e-mail. [ ! "x$MAILCONTENT" == "xlog" ] && exec 1>&6 2>&7 6>&- 7>&- if [ -s "$LOGERR" ]; then eval "$SED" "/^connected/d" "$LOGERR" fi if [ "$MAILCONTENT" = "log" ]; then mail -s "Mongo Backup Log for $HOST - $DATE" "$MAILADDR" < "$LOGFILE" if [ -s "$LOGERR" ]; then cat "$LOGERR" mail -s "ERRORS REPORTED: Mongo Backup error Log for $HOST - $DATE" "$MAILADDR" < "$LOGERR" fi else if [ -s "$LOGERR" ]; then cat "$LOGFILE" echo echo "$timestamp - ###### WARNING ######" echo "$timestamp - STDERR written to during mongodump execution." echo "$timestamp - The backup probably succeeded, as mongodump sometimes writes to STDERR, but you may wish to scan the error log below:" cat "$LOGERR" else cat "$LOGFILE" fi fi # TODO: Would be nice to know if there were any *actual* errors in the $LOGERR STATUS=0 if [ -s "$LOGERR" ]; then STATUS=1 fi # Clean up Logfile rm -f "$LOGFILE" "$LOGERR" exit $STATUS One other thing I forgot to mention here is that you’ll need

mailutilsinstalled on your server for the mail option to work

-

undefined phenomlab moved this topic from Configure on 26 Jun 2023, 17:47

-

undefined phenomlab referenced this topic on 2 Feb 2024, 12:53

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in