AI... A new dawn, or the demise of humanity ?

-

An interesting take from Sky News from one of their own reporters asking if AI could actually do their job

-

-

@phenomlab wow that is very sad… they should add something like this to the handlers, so the moment it touchs human flesh, it stops immediately

-

@phenomlab wow that is very sad… they should add something like this to the handlers, so the moment it touchs human flesh, it stops immediately

@crazycells Yes, you seriously have to wonder about the existence of health and safety controls. That specific machine shouldn’t have even been powered on!

-

@phenomlab wow that is very sad… they should add something like this to the handlers, so the moment it touchs human flesh, it stops immediately

@crazycells That is an incredible creation. 5000 RPM, yet stops on 1/1000th of a second? I guess the only downside is that the saw blade and module are destroyed by the 10G impact, but given the $60 price tag, it’s effectively nothing.

And the fact that this guy is willing to use himself as a demo means he has every faith in his own creation, and there aren’t many people who can claim that.

-

@crazycells That is an incredible creation. 5000 RPM, yet stops on 1/1000th of a second? I guess the only downside is that the saw blade and module are destroyed by the 10G impact, but given the $60 price tag, it’s effectively nothing.

And the fact that this guy is willing to use himself as a demo means he has every faith in his own creation, and there aren’t many people who can claim that.

@phenomlab I agree, actually it was the first time I have seen someone using their finger, previously I only saw similar videos with hot dog sausage as finger.

$60 is definitely no brainer. According to US congress, a human life worths $10 million, if financing a regulation is less than $10 million per life it will save, the proposition can pass as a law.

-

-

-

@phenomlab I wonder the specifics…

Article does not give much info except this paragraph…

Generative AI systems such as OpenAI’s ChatGPT have become increasingly ubiquitous in recent months - wowing users with their ability to create text, photos and songs but also causing concerns around jobs, privacy and copyright protection.

So, I guess it is mostly related to copyright protection and privacy etc.

-

@phenomlab I wonder the specifics…

Article does not give much info except this paragraph…

Generative AI systems such as OpenAI’s ChatGPT have become increasingly ubiquitous in recent months - wowing users with their ability to create text, photos and songs but also causing concerns around jobs, privacy and copyright protection.

So, I guess it is mostly related to copyright protection and privacy etc.

@crazycells it does seem that way, yes. Although Sky News frequently update their articles so worth checking back periodically.

-

@phenomlab I wonder the specifics…

Article does not give much info except this paragraph…

Generative AI systems such as OpenAI’s ChatGPT have become increasingly ubiquitous in recent months - wowing users with their ability to create text, photos and songs but also causing concerns around jobs, privacy and copyright protection.

So, I guess it is mostly related to copyright protection and privacy etc.

@crazycells I think the most concerning part of that article is this

Officials provided few details on what will make it into the eventual law, which will not take effect until 2025 at the earliest.

If that truly is the case, then how do we gain any comfort that what does make it into law is for for purpose?

-

@crazycells I think the most concerning part of that article is this

Officials provided few details on what will make it into the eventual law, which will not take effect until 2025 at the earliest.

If that truly is the case, then how do we gain any comfort that what does make it into law is for for purpose?

@phenomlab I think this is thought to be main frame and base for future rather than comprehensive instructions. I wonder if it will change anything at all…

By the way, aren’t most of these AI repo open source? So anyone can use it the way they want?.. It is like saying “do not share copyrighted material” but they cannot prevent piracy… So, I guess it will be something similar with AI… I mean people will use the copyrighted books to train their algorithm and will not acknowledge this…

-

@phenomlab I think this is thought to be main frame and base for future rather than comprehensive instructions. I wonder if it will change anything at all…

By the way, aren’t most of these AI repo open source? So anyone can use it the way they want?.. It is like saying “do not share copyrighted material” but they cannot prevent piracy… So, I guess it will be something similar with AI… I mean people will use the copyrighted books to train their algorithm and will not acknowledge this…

@crazycells yes, I think so too. But, even frameworks require some form of basis and to not provide even a synopsis of that makes me wonder if it even exists yet.

-

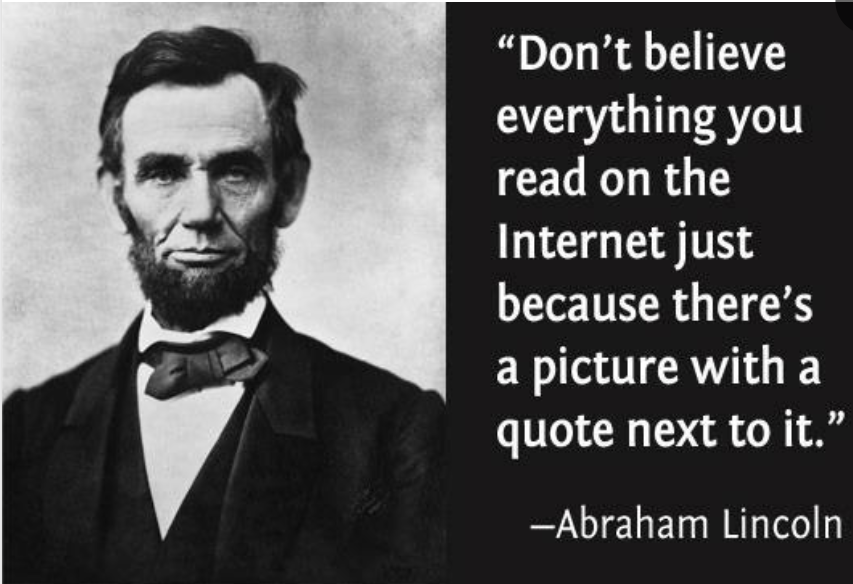

This is interesting. Who knew that Deep Fake images also were the victim of poor Photoshop skills

On a serious note, these AI generated images can be hugely damaging. Just look at the amount of times these fake images have been shared across social media sites. They say a picture speaks a thousand words - yet in this case, none of those words would have any true bearing or be factual.

-

This is interesting. Who knew that Deep Fake images also were the victim of poor Photoshop skills

On a serious note, these AI generated images can be hugely damaging. Just look at the amount of times these fake images have been shared across social media sites. They say a picture speaks a thousand words - yet in this case, none of those words would have any true bearing or be factual.

@phenomlab I agree… as once one of the greatest AI, internet and technology expert Abraham said…

-

This is interesting. Who knew that Deep Fake images also were the victim of poor Photoshop skills

On a serious note, these AI generated images can be hugely damaging. Just look at the amount of times these fake images have been shared across social media sites. They say a picture speaks a thousand words - yet in this case, none of those words would have any true bearing or be factual.

@phenomlab I guess there might be ways to solve this problem…

Most logical way is that people should stop using social media as news site… But we all know that this will never happen… So, easiest solution could be tagging each shared caps or photo on the picture (not in the instructions) as “real”, “art”, “satire”, “ai-made” etc…

-

@phenomlab I agree… as once one of the greatest AI, internet and technology expert Abraham said…

@crazycells Exactly. A great analogy.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (ether email, or push notification). You'll also be able to save bookmarks, use reactions, and upvote to show your appreciation to other community members.

With your input, this post could be even better 💗

RegisterLog in